2022-2023 Undergraduate Catalog

- About This Catalog

- 2022-2023 Academic Calendar

- Degree Programs

- Minor Programs

- Colleges/Programs by College

- University Admissions

Freshman Admission: General Statement

- Alternative Freshman Admissions Programs

- International Admissions

- Admission of Veterans

- Re-Enrollment Admissions

- Transfer and Second Baccalaureate Degree Admission: General Statement

- Financial Information

- Academic Standards, Policies, and Procedures

- Campus Life/Student Services

- Faculty/Personnel/Advisory Boards

- Catalog Home

- All Catalogs

2022-2023 Undergraduate Catalog > Admissions > Freshman Admission: General Statement

Kean offers a smarter approach to education that focuses on giving you the skills and real-world experience to launch the career of your dreams. Applicants for admission to Kean University are considered in terms of prior achievement and future promise. The Office of Admissions uses alternate indicators to evaluate college preparedness, included but not limited to courses taken, grade point average, class rank, and earned college credits.

Apply , Visit , or Request Information today.

Important Dates and Deadlines:

December 1: Preferred Application Deadline (Spring Semester) January 1: Early Action Deadline March 1: Preferred FAFSA (Free Application for Federal Student Aid) Deadline. April 30: Preferred Application Deadline. Rolling basis thereafter. (Fall semester) May 1: National Decision Day - Preferred Tuition Deposit Deadline. May 1: Educational Opportunity Fund (EOF) Program Application Deadline (Fall semester admission only).

Freshman Application Instructions

International Application Instructions

Up one level

Kean University

- Kean Connect

- Navigate360

- Learning Commons

- Academic Calendar

- Cougar Link

- Support Services

- Rapid Initiative

Transfer Application Instructions

Thank you for your interest in Kean University. Below you will find application instructions for transfer students. Please note, freshman with advanced standing who have earned college credits after high school graduation are required to apply as a transfer student.

If you are an international applicant, please visit our International Application Instructions page.

Applicants: Important Dates and Deadlines

Fall Priority Deadline: June 1

Fall: August 1 (Michael Graves College: Graphic Design June 30th)

Spring Priority Deadline: November 1

Spring Deadline: December 1

Applicants with 30 credits or more are required to submit:

- Official transcripts from every college and post-secondary school attended.

Transfer Applicants with less than 30 credits are required to submit:

- Transcripts from every college and post-secondary school attended, and

- Official high school transcripts.

A complete application consists of the following items*:

- A submitted online application .

- Official transcripts as referenced above from every college and post-secondary school attended must be sent electronically to the attention of the Office of Transfer Admissions at [email protected] . If electronic copies of transcripts are unavailable, official (sealed envelope) transcripts can be sent by mail to the Office of Transfer Admissions located in Kean Hall at 1000 Morris Avenue, Union, New Jersey 07083.

- A non-refundable $75 application fee. Application fees may be paid through the online application by credit card or debit card. Alternately, the fee may be paid by check or money orders made payable to Kean University and sent to the address below.

- International students (i.e. those who require an I-20 Form, including those on F-1 Visas to study in the U.S.) must also complete the International Student Application Supplement.

* Please review the additional application requirements for consideration into the following programs:

- Architecture

- Art Education

Further, please note the following:

- The current deadline for programs in the Michael Graves College (Architecture and Design programs) is June 30.

- Incomplete applications and submitted applications received without transcripts will not be processed for decision.

- Transfer credit will only be awarded from institutions listed on the application at the point of admission. Any institutions not listed will nullify the potential transfer of those credits.

- Deliberate omission of an institution attended to conceal poor performance will result in withdrawal of an admission offer.

Mailing Instructions:

Coming Back to Kean? (Readmission)

Once a Cougar, always a Cougar! We invite you to finish what you started! If you were admitted and enrolled at Kean, but never completed your degree requirements, then apply for Readmission . Get started by connecting with one of our admissions counselors.

- For detailed information on the readmission, please visit Kean.edu/offices/admissions/readmission-kean .

Applicants for Second Baccalaureate Degree

Transfer applicants that already hold a Baccalaureate degree from an accredited four-year U.S. college/university or foreign equivalent, will need to complete the same Transfer Student Application Instructions listed above. Please also carefully read the information below.

Second Baccalaureate Degree Requirements

- You must have a Baccalaureate degree from an accredited four-year U.S. college/university or the equivalent from a foreign university. The equivalency of the foreign degree must be verified by an NACES accredited evaluation agency (see above in Application Instructions).

- Your GPA from your baccalaureate degree-granting institution must meet the minimum GPA requirement for the specific major desired. Minimum GPA's required range from 2.0 to 3. depending on the major.

- You will also be subject to any other requirements or special procedures in effect for admission to the major program you select (e.g. portfolios for design majors).

Please Note:

- Not all major programs offer second degrees. For example, Second Degrees in Education majors are not offered. Interested students should change the way you are applying from Transfer to Post-Baccalaureate Teaching Certification in the Academic Intent section of the online application.

- You cannot work on your first and second baccalaureate degrees simultaneously.

Second Baccalaureate Degree Completion Requirements

- You must complete all academic major requirements and all "cognate" or "additional required courses" that support the major and any lower-level prerequisites for those courses, if applicable.

- You will be granted Advanced Standing Credits for all Free Electives and General Education (GE) requirements - except for GE courses that are prerequisites, if applicable.

- Transfer courses may be awarded toward the Second Degree major; however, you must complete a minimum of 32 credits, including at least half of the major requirements, at Kean after admission to the Second Degree program.

- Once accepted to the Second Degree program, all coursework must be completed at Kean University.

Additional Resources

- Transfer Credit Evaluation

- Transfer- Planning to Become a Teacher

Admissions Appeal Request

Requests for reconsideration of the Kean University Undergraduate Admissions Application must be based upon new academic information (that was not available at the time of the original decision and) which shows a significant improvement as compared to credentials previously submitted.

If you would like to appeal your admissions decision, please email [email protected] .

Kean University

Academic Programs

- Accounting*

- Art & Design

- Communication

- Criminal Justice*

- Engineering

- Finance/Accounting

- Government/Political Science

- Health Science

- Liberal Arts

- Performing Arts

- Psychology*

- R.N. to BSN *

- Social Science

- Visual Arts

Student experience

- Co-op/Internship Opportunities

- Disability Services

- Hispanic Serving Institution (HSI)

- Intramural/Club Sports

- LGBTQIA Services

- Military/Veteran Services

- Night Class Offerings

- On-Campus Housing

- Online Programs

- PTK Transfer Friendly University

- Professional Equity Theatre

- ROTC Program

- Study Abroad

- Undergraduate Research

- Veteran Fee Waiver

- Virtual Learning

Application information

Find out about requirements, fees, and deadlines

Kean University offers a smarter approach to education that focuses on rewarding careers, fulfilling lives, and lifelong learning. Ready to apply as a freshman? Learn more about application instructions and important deadlines at kean.edu/admissions.

Kean University is nationally recognized for the seamless way we welcome transfer students who are ready to pursue four-year degrees. Ready to apply as a transfer student? Learn more about application instructions and important deadlines at kean.edu/admissions.

Additional Information

Admissions office

1000 Morris Avenue Union , NJ 07083 , United States of America

Phone number

(908) 737-7100

For first-year students

Admissions website.

www.kean.edu/offices/admissions

Financial aid website

www.kean.edu/offices/financial-aid

For transfer students

Undocumented or daca students.

www.kean.edu/offices/registrars-office/new-jersey-dream-act

Mid-Atlantic

View more in this region

Follow Kean University

- Facebook icon

- Twitter icon

- Youtube icon

- Instagram icon

You are using an outdated browser. Please upgrade your browser or activate Google Chrome Frame to improve your experience.

Kean University Requirements for Admission

Choose your test.

What are Kean University's admission requirements? While there are a lot of pieces that go into a college application, you should focus on only a few critical things:

- GPA requirements

- Testing requirements, including SAT and ACT requirements

- Application requirements

In this guide we'll cover what you need to get into Kean University and build a strong application.

School location: Union, NJ

Admissions Rate: 82.6%

If you want to get in, the first thing to look at is the acceptance rate. This tells you how competitive the school is and how serious their requirements are.

The acceptance rate at Kean University is 82.6% . For every 100 applicants, 83 are admitted.

This means the school is lightly selective . The school will have their expected requirements for GPA and SAT/ACT scores. If you meet their requirements, you're almost certain to get an offer of admission. But if you don't meet Kean University's requirements, you'll be one of the unlucky few people who gets rejected.

We can help. PrepScholar Admissions is the world's best admissions consulting service. We combine world-class admissions counselors with our data-driven, proprietary admissions strategies . We've overseen thousands of students get into their top choice schools , from state colleges to the Ivy League.

We know what kinds of students colleges want to admit. We want to get you admitted to your dream schools.

Learn more about PrepScholar Admissions to maximize your chance of getting in.

Kean University GPA Requirements

Many schools specify a minimum GPA requirement, but this is often just the bare minimum to submit an application without immediately getting rejected.

The GPA requirement that really matters is the GPA you need for a real chance of getting in. For this, we look at the school's average GPA for its current students.

Average GPA: 3.2

The average GPA at Kean University is 3.2 .

(Most schools use a weighted GPA out of 4.0, though some report an unweighted GPA.

With a GPA of 3.2, Kean University accepts below-average students . It's OK to be a B-average student, with some A's mixed in. It'd be best to avoid C's and D's, since application readers might doubt whether you can handle the stress of college academics.

SAT and ACT Requirements

Each school has different requirements for standardized testing. Only a few schools require the SAT or ACT, but many consider your scores if you choose to submit them.

Kean University hasn't explicitly named a policy on SAT/ACT requirements, but because it's published average SAT or ACT scores (we'll cover this next), it's likely test flexible. Typically, these schools say, "if you feel your SAT or ACT score represents you well as a student, submit them. Otherwise, don't."

Despite this policy, the truth is that most students still take the SAT or ACT, and most applicants to Kean University will submit their scores. If you don't submit scores, you'll have one fewer dimension to show that you're worthy of being admitted, compared to other students. We therefore recommend that you consider taking the SAT or ACT, and doing well.

Kean University SAT Requirements

Many schools say they have no SAT score cutoff, but the truth is that there is a hidden SAT requirement. This is based on the school's average score.

Average SAT: 990

The average SAT score composite at Kean University is a 990 on the 1600 SAT scale.

This score makes Kean University Lightly Competitive for SAT test scores.

Kean University SAT Score Analysis (New 1600 SAT)

The 25th percentile SAT score is 910, and the 75th percentile SAT score is 1150. In other words, a 910 on the SAT places you below average, while a 1150 will move you up to above average .

Here's the breakdown of SAT scores by section:

SAT Score Choice Policy

The Score Choice policy at your school is an important part of your testing strategy.

Kean University ACT Requirements

Just like for the SAT, Kean University likely doesn't have a hard ACT cutoff, but if you score too low, your application will get tossed in the trash.

Average ACT: 20

The average ACT score at Kean University is 20. This score makes Kean University Moderately Competitive for ACT scores.

The 25th percentile ACT score is 16, and the 75th percentile ACT score is 25.

ACT Score Sending Policy

If you're taking the ACT as opposed to the SAT, you have a huge advantage in how you send scores, and this dramatically affects your testing strategy.

Here it is: when you send ACT scores to colleges, you have absolute control over which tests you send. You could take 10 tests, and only send your highest one. This is unlike the SAT, where many schools require you to send all your tests ever taken.

This means that you have more chances than you think to improve your ACT score. To try to aim for the school's ACT requirement of 16 and above, you should try to take the ACT as many times as you can. When you have the final score that you're happy with, you can then send only that score to all your schools.

ACT Superscore Policy

By and large, most colleges do not superscore the ACT. (Superscore means that the school takes your best section scores from all the test dates you submit, and then combines them into the best possible composite score). Thus, most schools will just take your highest ACT score from a single sitting.

We weren't able to find the school's exact ACT policy, which most likely means that it does not Superscore. Regardless, you can choose your single best ACT score to send in to Kean University, so you should prep until you reach our recommended target ACT score of 16.

Download our free guide on the top 5 strategies you must be using to improve your score. This guide was written by Harvard graduates and ACT perfect scorers. If you apply the strategies in this guide, you'll study smarter and make huge score improvements.

SAT/ACT Writing Section Requirements

Currently, only the ACT has an optional essay section that all students can take. The SAT used to also have an optional Essay section, but since June 2021, this has been discontinued unless you are taking the test as part of school-day testing in a few states. Because of this, no school requires the SAT Essay or ACT Writing section, but some schools do recommend certain students submit their results if they have them.

Kean University considers the SAT Essay/ACT Writing section optional and may not include it as part of their admissions consideration. You don't need to worry too much about Writing for this school, but other schools you're applying to may require it.

Final Admissions Verdict

Because this school is lightly selective, you have a great shot at getting in, as long as you don't fall well below average . Aim for a 910 SAT or a 16 ACT or higher, and you'll almost certainly get an offer of admission. As long as you meet the rest of the application requirements below, you'll be a shoo-in.

But if you score below our recommended target score, you may be one of the very few unlucky people to get rejected.

Admissions Calculator

Here's our custom admissions calculator. Plug in your numbers to see what your chances of getting in are. Pick your test: SAT ACT

- 80-100%: Safety school: Strong chance of getting in

- 50-80%: More likely than not getting in

- 20-50%: Lower but still good chance of getting in

- 5-20%: Reach school: Unlikely to get in, but still have a shot

- 0-5%: Hard reach school: Very difficult to get in

How would your chances improve with a better score?

Take your current SAT score and add 160 points (or take your ACT score and add 4 points) to the calculator above. See how much your chances improve?

At PrepScholar, we've created the leading online SAT/ACT prep program . We guarantee an improvement of 160 SAT points or 4 ACT points on your score, or your money back.

Here's a summary of why we're so much more effective than other prep programs:

- PrepScholar customizes your prep to your strengths and weaknesses . You don't waste time working on areas you already know, so you get more results in less time.

- We guide you through your program step-by-step so that you're never confused about what you should be studying. Focus all your time learning, not worrying about what to learn.

- Our team is made of national SAT/ACT experts . PrepScholar's founders are Harvard graduates and SAT perfect scorers . You'll be studying using the strategies that actually worked for them.

- We've gotten tremendous results with thousands of students across the country. Read about our score results and reviews from our happy customers .

There's a lot more to PrepScholar that makes it the best SAT/ACT prep program. Click to learn more about our program , or sign up for our 5-day free trial to check out PrepScholar for yourself:

Application Requirements

Every school requires an application with the bare essentials - high school transcript and GPA, application form, and other core information. Many schools, as explained above, also require SAT and ACT scores, as well as letters of recommendation, application essays, and interviews. We'll cover the exact requirements of Kean University here.

Application Requirements Overview

- Common Application Accepted

- Electronic Application Available

- Essay or Personal Statement Required for all freshmen

- Letters of Recommendation 2

- Interview Not required

- Application Fee $75

- Fee Waiver Available? Available

- Other Notes

Testing Requirements

- SAT or ACT Considered if submitted

- SAT Essay or ACT Writing Optional

- SAT Subject Tests

- Scores Due in Office August 15

Coursework Requirements

- Subject Required Years

- Foreign Language

- Social Studies

- Electives 5

Deadlines and Early Admissions

- Offered? Deadline Notification

- Yes August 15 November 1

- Yes December 1 January 1

Admissions Office Information

- Address: 1000 Union, NJ 7083

- Phone: (908) 737-KEAN xKEAN

- Fax: (908) 737-3415

- Email: [email protected]

Other Schools For You

If you're interested in Kean University, you'll probably be interested in these schools as well. We've divided them into 3 categories depending on how hard they are to get into, relative to Kean University.

Reach Schools: Harder to Get Into

These schools are have higher average SAT scores than Kean University. If you improve your SAT score, you'll be competitive for these schools.

Same Level: Equally Hard to Get Into

If you're competitive for Kean University, these schools will offer you a similar chance of admission.

Safety Schools: Easier to Get Into

If you're currently competitive for Kean University, you should have no problem getting into these schools. If Kean University is currently out of your reach, you might already be competitive for these schools.

Data on this page is sourced from Peterson's Databases © 2023 (Peterson's LLC. All rights reserved.) as well as additional publicly available sources.

If You Liked Our Advice...

Our experts have written hundreds of useful articles on improving your SAT score and getting into college. You'll definitely find something useful here.

Subscribe to our newsletter to get FREE strategies and guides sent to your email. Learn how to ace the SAT with exclusive tips and insights that we share with our private newsletter subscribers.

You should definitely follow us on social media . You'll get updates on our latest articles right on your feed. Follow us on all of our social networks:

Kean University: Direct admissions details

Dec 26, 2023 • knowledge, information.

Common App Direct Admissions is a program that offers college admission to qualified students. Participating colleges set a minimum, qualifying GPA for students in their home state. Common App then identifies students who meet those requirements using their Common App responses.

Some colleges may remove certain application requirements for Common App Direct Admissions recipients. If a college has any exceptions in their direct admissions offer, we will list them in this FAQ.

To accept your Common App Direct Admissions offer, all you need to do is submit your application to Kean University for free.

Add college to my list

Direct Admissions requirements

Personal essay, teacher evaluations.

0 Required, 10 Optional

Program or major exceptions

While this offer will guarantee your admission to this college, it does not guarantee you will be admitted to the following programs:

- Advertising (B.F.A.)

- Architectural Studies (B.A.)

- Biology - Teacher Education (B.A.)

- Biology - STEM Teacher Education - 5yr. (B.S./M.A.)

- Biomedicine - STEM 4yr. (B.S.)

- Biotechnology/Molecular Biology - STEM 5yr. (B.S./M.S.)

- Chemistry - STEM Teacher Education - 5yr. (B.S./M.A.)

- Computational Science & Engineering - STEM 5yr. (B.S./M.S.)

- Fine Arts - Art Education (B.A.)

- Graphic Design - (B.F.A.)

- Industrial Design (B.I.D.)

- Interior Design (B.F.A.)

- Mathematics - STEM Teacher Education - 5yr. (B.S./M.A.)

- Music (B.A.)

- Music Education (B.M.)

- Music Performance (B.M.)

- Studio Art (B.F.A.)

- Theatre (B.A.)

- Theatre Design & Technology (B.F.A.)

- Theatre Performance (B.F.A.)

- Theatre Performance - Musical Theatre Option (B.F.A.)

College-specific exceptions

Enrollment is contingent upon conviction and disciplinary history inquiry.

Fee waiver instructions

To ensure your application to this college is free, please indicate that you’d like to use a school-specific fee waiver.

- In the college’s application questions, find the “Do you intend to use one of these school-specific fee waivers?” question.

- Respond to this question with the “Common App Direct Admissions recipient” option.

The college will verify your eligibility status after you submit your application.

Additional resources

- Explore colleges profile – Learn more about what this college has to offer

- Financial aid – More information about this college’s financial aid offerings

- Direct Admissions FAQ – More information about the Common App Direct Admissions program

Kean University

- Cost & scholarships

- Essay prompt

Acceptance Rate

Average SAT

SAT 25th-75th

Students Submitting SAT

Average (25th - 75th)

Reading and Writing

Average ACT

ACT 25th-75th

Students Submitting ACT

Wondering your admission chance to this school? Calculate your chance now

Applications, how to apply, tests typically submitted, similar schools.

- grade B Overall Grade

- Rating 3.75 out of 5 1,614 reviews

How to Apply to Kean University

Start your application, application requirements.

- High School GPA Required

- High School Rank Considered but not required

- High School Transcript Required

- College Prep Courses Required

- SAT/ACT Considered but not required

- Recommendations Considered but not required

- Why choose WKU

- China-Mainland

- China-Hong Kong,Macao and Taiwan International

- International

- Freshmen Guide

Start Here Go Anywhere

Start Here Go Anywhere

Wenzhou-Kean University now offers 18 undergraduate programs and Kean University offers more than 50 undergraduate programs and more than 60 graduate options for study.

Kean University is a public university founded in 1855 in New Jersey. It enjoys a high reputation in the United States. Kean is a world-class, vibrant and diverse university.

100% of professional courses are introduced from the Kean University, using original American and international textbooks.

From 35 countries and regions around the world, most of whom have international teaching backgrounds and doctoral degrees.

Accounting (B.S.) Finance(B.S.) Economics (B.S.) Global Business(B.S.) Management (Business Analytics Option) (B.S.) Management (Supply Chain and Information Management Option) (B.S.) Marketing (B.S.) Accounting (M.S.) Global Management (M.B.A.)

English(B.A.) Communication(B.A.) Psychology(B.A.) Psychology (M.A.)

Chemistry(B.S.) Environmental Science(B.S.) Biology (Cell and Molecular Biology Option)(B.S.) Mathematical Sciences (Data Analytics Option)(B.A.) Computer Science(B.S.) Computer Information Systems(M.S.) Biotechnology Science(M.S.)

Architectural Studies (B.A.) Industrial Design(B.I.D.) Interior Design(B.F.A.) Architecture (M.Arch.)

Instruction and Curriculum (M.A.) Educational Administration (M.A.) Educational Leadership (Ed.D.)

Career prospects

中国浙江省温州市瓯海区大学路88号

邮政编码: 325060

+86 577 5587 0000

© 2023 温州肯恩大学版权所有 浙ICP备12035272号-1

Applying to Kean University

- Admission Trends

- SAT Score Trends

- NJ Acceptance Rate

- Save School

Acceptance Rate

Kean University's acceptance rate is 82.60% for 2023 admission. A total of 10,485 students applied and 8,661 were admitted to the school. Judging by the acceptance rate, it is relatively easy (higher than the national average) to get into Kean.

Kean's acceptance rate places 16th out of 52 New Jersey colleges with competitive admission .

The median SAT score is 1,020 and the ACT score is 18 at Kean. Kean's SAT score ranks 21st out of 22 New Jersey colleges that consider SAT score for admission .

To apply to Kean, personal statement (or essay) is required to submit and recommendations is not required but considered. The SAT and ACT score is not required, but considered for admission. In addition, english proficiency test score is also considered (required).

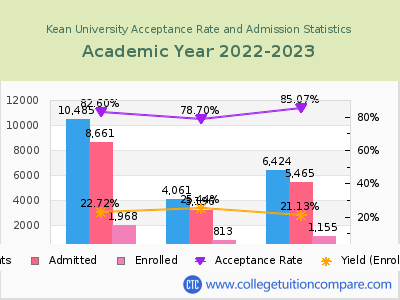

Admission Statistics

For the academic year 2022-23, the acceptance rate of Kean University is 82.60% and the yield (also known as enrollment rate) is 22.72%. 4,061 men and 6,424 women applied to Kean and 3,196 men and 5,465 women students were accepted.

Among them, 813 men and 1,155 women were enrolled in the school (Fall 2022). The following table and chart show the admission statistics including the number of applicants, acceptance rate, and yield at Kean.

Data source: IPEDS (Integrated Postsecondary Education Data System) (Last update: December 11, 2023)

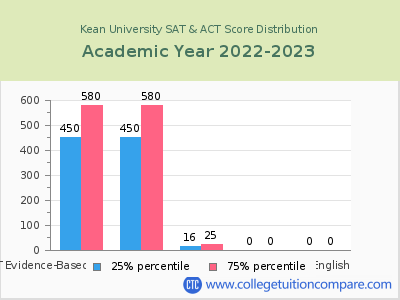

SAT and ACT Score Distribution

In 2023, 252 students (13% of enrolled) have submitted their SAT scores and 28 students (1%) have submitted their ACT scores for seeking degrees at Kean.

The median SAT score is 1,020 with 510 SAT EBRW and 510 SAT Math median scores. The 75 th percentile SAT score of EBRW (Evidence-Based Reading / Writing) is 580 and the 25 th percentile is 450. The SAT Math 75 th percentile score is 580 and 25 th percentile is 450 .

The median ACT composite score is 18 with 75 th percentile score of 25 and 25 th percentile score of 16 at Kean.

The SAT and ACT scores at Kean University are similar to the average score compared to similar colleges (SAT: 1,065, ACT: 21 - public doctoral / research university).

First-year Enrollment by Gender and Enrolled Type

For the academic year 2022-23, total 1,968 first-year students enrolled in Kean. The proportion of full-time students is 96.75% and part-time students is 3.25%.

By gender, the proportion of men students is 41.31% and women students is 58.69%.

The following table shows the first-year students by gender and enrolled type at Kean.

Application Requirements

It requires to submit High School GPA, High School Record (or Transcript), Completion of College Preparatory Program, English Proficiency Test, and Personal statement or essay to its applicants.

The SAT and ACT test score is not reqired, but considered in admission process at Kean. The english proficiency test is reqired .

The next table summarizes the application requirements to apply Kean University.

For more admission information such as minimum GPA and deadlines, see its online application page .

General Admissions Information

The application fee required to apply to Kean University is $75 for both undergraduate and graduate programs. The following table provides general admission information for applying to Kean.

Kean University Admission Requirements 2022

Acceptance Rate

Kean University Admissions

It has an acceptance rate of around 69%. This figure translates into the fact that out of 100 applicants willing to take admission at the school, 69 are admitted. Notably, the SAT scores of the students lie in the range of 920-1100. For the perusal of the applicants, here are some interesting figures; the ACT scores of the admitted students lie in the range of 17-23, whereas, the average GPA scores hover around 3. The applicants must keep in mind that the application deadline for Kean University is August 15, 2020. Kean University follows a simple application and admission process that includes a total of 4 steps, which are as follows: Step 1: Submitting the application A candidate is first required to apply to the university as part of the admission procedure at Kean University. To apply to the university, interested candidates can either call the university at (908) 737-5326 or do it through online mode to secure admission. Those willing to take admission can also directly visit the university's administration at 1000 Morris Avenue, Union, New Jersey 07083. Students wishing to take admission at Kean University can also directly contact its admissions office by phone. Step 2 in the application process is acceptance by KU Once the candidate has submitted their form and deposited the enrollment fee, they are required to submit certain documents to successfully receive an admission decision. For Kean University, candidates have to submit the following documents: Transcripts: As an important part of the evaluation process, candidates have to submit necessary high-school and university transcripts through Parchment (or similar platforms). Essay: Also called as the personal statement, it offers the applicants a unique way to showcase their achievements and personality. Candidates are required to add personal experience and anecdotes to it. Official Test Scores: Candidates can make a stronger claim for securing a seat at Kean University by submitting their official SAT or ACT scores. It is not a mandatory requirement and not all students submit their scores. The university may request additional documents or information from the applicants once the reviewing process is over. Step 3: Confirm Your Attendance The third step includes confirming their attendance to KU by the candidates. The candidates will be required to complete their Financial Check-In process and select their housing, too. Review and verification of Summary of Accounts and Financial Aid (scholarships, fees, and tuition costs), choosing a lodging option, selecting a payment plan, and making the first payment are some of the processes included in the admission at the varsity. Candidates will be required to complete the Math and English assessments before proceeding to register for classes. Step 4 includes registration for classes Registration for courses will be the next step once all the processes mentioned above are completed.

SAT Scores you need to get in

SAT Range The composite score range which the Institution consistently takes, below which admission should be considered a reach.

Applicants Submitting SAT Percentage of Students submitting SAT Scores.

SAT Reading 25th

SAT Math 25th

SAT Composite 25th

SAT Reading 75th

SAT Math 75th

SAT Composite 75th

Average SAT Score

While making the admission decisions, Kean University also takes the SAT scores of the applicants into consideration. Apart from other things, the candidates need to know that SAT scores are compulsory to be considered for admission to the university. And, the average SAT score of students who get into the university is 1010. However, even those applicants with SAT scores of 920 or below could also be accepted in some cases. As many as 88% of the applicants to the university submit their SAT scores for admission consideration.

ACT Scores you need to get in

ACT Range The composite score range which the Institution consistently takes, below which admission should be considered a reach.

Applicants Submitting ACT Percentage of Students submitting ACT Scores.

ACT Math 25th

ACT English 25th

ACT Composite 25th

ACT Math 75th

ACT English 75th

ACT Composite 75th

Average ACT Score

Coming to the ACT scores that candidates need to get accepted to Kean University, the latest data suggests that one requires an average ACT score of 20. Moreover, most of the candidates accepted by Kean University have composite ACT scores in the range of 17-23. However, a minimum ACT composite score of 17 is necessary to be considered for admission by the institution. And, ACT scores are also compulsory for admission consideration. As many as 8% of the applicants submit their ACT scores for admission to the university, as per the latest information.

Estimated GPA Requirements & Average GPA

Grade Points Average (GPA) The average high school GPA of the admitted students

A high GPA is required for a candidate to be accepted at Kean University. Generally, having a good GPA helps the applicant stand out from the crowd and also improves their chances to secure admission into any college/university of their choice. The candidates must also have average high school grades with a GPA score of at least 2.50. To note, the average high school GPA of the applicants who get into KU is 3. Candidates applying to Kean University should also have a good idea about the requirements for admission, including the necessary documents, and all the information that is considered while making admission decisions. The students must also submit several important documents to the university such as the Secondary school GPA, Secondary school record, and Admission test scores (SAT/ACT). Furthermore, the submission of other documents and details, including their Completion of college-preparatory program, is also recommended for admission.

Admission Requirements

What Really Matters When Applying

High School GPA

High School Rank

Neither required nor recommended

High School Transcript

College Prep Courses

Recommended

Recommendations

Kean University has a plethora of online and on-campus programs tailor-made for students to make their dream come true, be it in any kind of field. The requirements tend to differ as per the type of applicant, that is undergraduate (freshmen, international, transfer), graduate, and online programs.

Apart from important transcripts, the students may get asked to submit ACT/SAT scores, academic placement test scores, and other documents like essays, letters of recommendation, etc.

The application fee for application form for any program is $75 (non-refundable) .

Undergraduate Admission Requirements

Applicants need to submit the following requirements below on the basis of major, types of programs.

For Freshmen students

Admission to most of the programs for freshmen is SAT-optional for students who have excellent academic performance to show through their transcripts. This condition stands irrespective of their standardized test scores. You need to include Kean's College Entrance Examination Board (CEEB) number 2517 while submitting your scores.

Following terms are needed to be looked at for eligibility:

It is mandatory to submit your official high school transcript.

You need to submit your recent score of ACT/SAT. The university will consider the highest-level score of the Evidence-Based Reading and Writing and Math sections on your SAT and the highest composite score of the ACT.

Recommended not compulsory to submit:

Two (2) letters of recommendation from either a teacher, counselor, employer, and/or coach, etc.

A personal essay stating your educational and professional career goals.

List of your high school activities and/or work experiences, indicating any leadership positions held.

SAT-optional requirements : If you have a record of a high level of academic success in school, you may apply to the university through this window. Applicants are required to take the reading, writing, and math placement tests.

Applicants with a high school GPA of 3.0 or higher and a minimum of 16 college preparatory courses get the option of not submitting their SAT scores during the enrollment process. However, the following applicants need to submit their test scores:

NJCSTM majors (honors program)

Home-school students

International students

Merit Scholarship applicants

GED applicants

SAT test-optional applicants must mandatorily submit the following:

Admission requirements for Architecture, Design, Theatre, Music, and Fine Arts programs require additional documents. For more details, please visit the official link .

International students must fulfill additional requirements along with the standard ones. The benefit of applying from the SAT-optional window is not applicable to international students. Some of the additional requirements for International students are mentioned below:

Official high school transcripts evaluated and verified by the National Association of Credit Evaluation Services (NACES).

Standardized Testing Scores from the SAT or ACT. The university will consider the highest-level score of the Evidence-Based Reading and Writing and Math sections on your SAT and the highest composite score of the ACT.

English Language Proficiency Test scores from TOEFL (minimum: Internet-based: 79; Paper-based: 550) or IELTS (minimum: 6.0)

The applicants can get an exemption from the English Proficiency test by submitting documentation as evidence of completion of secondary schooling from an institution where English is the language of instruction.

Optional: Personal Statement, as specified by the program. It should include their academic and professional goals and how and why Kean University fits into their aspirations. Also, 2-3 Letters of Recommendation, as specified by the program.

Graduate Admission Requirements

The graduate programs by Kean University embrace dozens of disciplines from Criminal Justice, MBA, Educational Administration, Fine Arts, Speech-Language Pathology, Architecture, and many more. Each program has certain program-specific requirements, for which you need to visit the official university website.

The common requirements for Graduate Admission are as follows:

Filled application form.

Bachelor's degree (or foreign equivalent) from an accredited college or university

Cumulative GPA of 3.0 or higher (candidates with under a 3.0 will be considered based on the strength of the overall application).

Official copies of transcripts of previous institutions attended (this includes transcripts that reflect transfer credit). Transcripts must include proof of all courses, grades, and degrees. You can also include summer coursework, study abroad, or transfer coursework (if any).

Professional Resume or CV

Program-specific requirements

These includes:

Personal Statement, as specified by the program. It should include their academic and professional goals and how and why Kean University fits into their aspirations.

2-3 Letters of Recommendation, as specified by the program.

Documented observational service hours.

Copy of license/certificates.

Standardized Test Scores.

For international students, test scores of TOEFL or IELTS.

A completed supplemental application providing additional information.

The following programs will require both a Centralized Application Service (CAS) application along with Kean University CAS Supplemental application :

Occupational Therapy (OTD) and Occupational Therapy (M.S)

Physical Therapy (DPT)

School and Clinical Psychology (Psy.D)

Speech-Language Pathology (M.A.)

The applicants are advised to be cautious regarding the usage of test codes while submission of test scores.

An English proficiency examination is required of all students who have a bachelor’s degree from an institution outside the U.S. in a country where English is not the principal language.

The minimum score required for TOEFL - Internet-based: 79; Paper-based: 550 and minimum test score for IELTS - 6.5.

The applicants can get an exemption from the English Proficiency test by submitting documentation as evidence of completion of a bachelor’s or master’s degree from an accredited U.S. college or university where English is the language of instruction.

Admission Deadlines

Application Deadline Deadline for application submissions. Please contact the school for more details.

Application Fee Application fees may vary by program and may be waived for certain students. Please check with the school.

Early Decision Deadline

Early Action Deadline

Offer Action Deadline

Offers Early Decision

Application Website

apply.kean.edu

Accepts Common App

Accepts Coalition App

Apart from submitting the documents and other required information, the candidates should also deposit an application fee of $75. The application deadline of the university depends on several factors. Fall applications, most popular among students, usually start from September and might go on till April. September deadline usually pertains to early decision, while the final deadline is in April. Notably, to be eligible for scholarships, students might have to apply before early deadline. This is why it is always recommended to apply early.

Acceptance Rate and Admission Statistics

Admission statistics.

Percent of Admitted Who Enrolled (Admission Yield)

Credits Accepted

Dual Credit

Credit for Life Experiences

The majority of the universities in the US incentivize students by helping them score university credit through certain courses offered in high school. There are many such programs that are at disposal for the students. Some of the famous ones include AP Credit, CLEP (Credit for Life Experiences), and Dual Enrollment (also known as Dual Credit). However, there is no common policy and instead, colleges follow their own, separate policy regarding these credits. There are multiple perks for the usage of these courses such as higher GPA, shorter time to finish a degree, and increased chances of completing a degree. To pursue AP and CLEP credits, students are instructed to visit collegeboard.org and request to submit the scores to the university of their choice.

Other Colleges In New Jersey

Hoboken, New Jersey 4 years Public

Located in Galloway, NJ, Stockton University is a public university. The university covers an area of 2,000 acres. The Stockton University is part of the Stockton University group. Stockton University was founded in the year 1969. The motto of the Stockton University is An Environment for Excell

Galloway, New Jersey 4 years Public

Online Education at Montclair State University

The university strives to provide their non-traditional students with the same quality of education that what their campus students receive. They prepare thousands of students for a lifetime of professional and personal su

Montclair, New Jersey 4 years Public

Other Colleges In Union

A private university by the name of Healthcare Training Institute is located in Union, New Jersey. Across all the courses offered at Healthcare Training Institute, the enrolment stands at 40. To teach various courses to the students, the school has employed a total of 4 faculty. At HTI, around 98

Union, New Jersey 2 years Private For-Profit

Other Public Colleges

Online education at lattc.

College provides an option for the students to take classes in a setting other than the traditional face-to-face classroom.

All course resources and class activities can be accessed online 24/7 to meet your needs while you are a

Los Angeles, California 2 years Public

Minot, North Dakota 4 years Public

Joshua Tree, California 2 years Public

The College Monk Blog

Get detailed info on online education, planning, student life, careers, degree programs, accreditation, academic support, guides and more.

Wondering what could be the highest ACT Score that one could get? Learn more about the highest

Wondering what is a good SAT score? Learn about average SAT scores, good SAT scores, and the best

What is the SAT? It is standard test for college admission process and scores mostly determine your

GPA is a measurement of your academic success in high school that colleges will consider very

Confused about grade point averages? This article explains everything you need to know, including

Confused between ACT and SAT? Here are the key difference between ACT and SAT Test to help you in

Susan Devlin

We are quite confident to write and maintain the originality of our work as it is being checked thoroughly for plagiarism. Thus, no copy-pasting is entertained by the writers and they can easily 'write an essay for me’.

Estelle Gallagher

The experts well detail out the effect relationship between the two given subjects and underline the importance of such a relationship in your writing. Our cheap essay writer service is a lot helpful in making such a write-up a brilliant one.

Deadlines can be scary while writing assignments, but with us, you are sure to feel more confident about both the quality of the draft as well as that of meeting the deadline while we write for you.

Finished Papers

Finished Papers

- Dissertation Chapter - Abstract

- Dissertation Chapter - Introduction Chapter

- Dissertation Chapter - Literature Review

- Dissertation Chapter - Methodology

- Dissertation Chapter - Results

- Dissertation Chapter - Discussion

- Dissertation Chapter - Hypothesis

- Dissertation Chapter - Conclusion Chapter

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 03 June 2024

Applying large language models for automated essay scoring for non-native Japanese

- Wenchao Li 1 &

- Haitao Liu 2

Humanities and Social Sciences Communications volume 11 , Article number: 723 ( 2024 ) Cite this article

12 Accesses

1 Altmetric

Metrics details

- Language and linguistics

Recent advancements in artificial intelligence (AI) have led to an increased use of large language models (LLMs) for language assessment tasks such as automated essay scoring (AES), automated listening tests, and automated oral proficiency assessments. The application of LLMs for AES in the context of non-native Japanese, however, remains limited. This study explores the potential of LLM-based AES by comparing the efficiency of different models, i.e. two conventional machine training technology-based methods (Jess and JWriter), two LLMs (GPT and BERT), and one Japanese local LLM (Open-Calm large model). To conduct the evaluation, a dataset consisting of 1400 story-writing scripts authored by learners with 12 different first languages was used. Statistical analysis revealed that GPT-4 outperforms Jess and JWriter, BERT, and the Japanese language-specific trained Open-Calm large model in terms of annotation accuracy and predicting learning levels. Furthermore, by comparing 18 different models that utilize various prompts, the study emphasized the significance of prompts in achieving accurate and reliable evaluations using LLMs.

Similar content being viewed by others

Scoring method of English composition integrating deep learning in higher vocational colleges

ChatGPT-3.5 as writing assistance in students’ essays

Detecting contract cheating through linguistic fingerprint

Conventional machine learning technology in aes.

AES has experienced significant growth with the advancement of machine learning technologies in recent decades. In the earlier stages of AES development, conventional machine learning-based approaches were commonly used. These approaches involved the following procedures: a) feeding the machine with a dataset. In this step, a dataset of essays is provided to the machine learning system. The dataset serves as the basis for training the model and establishing patterns and correlations between linguistic features and human ratings. b) the machine learning model is trained using linguistic features that best represent human ratings and can effectively discriminate learners’ writing proficiency. These features include lexical richness (Lu, 2012 ; Kyle and Crossley, 2015 ; Kyle et al. 2021 ), syntactic complexity (Lu, 2010 ; Liu, 2008 ), text cohesion (Crossley and McNamara, 2016 ), and among others. Conventional machine learning approaches in AES require human intervention, such as manual correction and annotation of essays. This human involvement was necessary to create a labeled dataset for training the model. Several AES systems have been developed using conventional machine learning technologies. These include the Intelligent Essay Assessor (Landauer et al. 2003 ), the e-rater engine by Educational Testing Service (Attali and Burstein, 2006 ; Burstein, 2003 ), MyAccess with the InterlliMetric scoring engine by Vantage Learning (Elliot, 2003 ), and the Bayesian Essay Test Scoring system (Rudner and Liang, 2002 ). These systems have played a significant role in automating the essay scoring process and providing quick and consistent feedback to learners. However, as touched upon earlier, conventional machine learning approaches rely on predetermined linguistic features and often require manual intervention, making them less flexible and potentially limiting their generalizability to different contexts.

In the context of the Japanese language, conventional machine learning-incorporated AES tools include Jess (Ishioka and Kameda, 2006 ) and JWriter (Lee and Hasebe, 2017 ). Jess assesses essays by deducting points from the perfect score, utilizing the Mainichi Daily News newspaper as a database. The evaluation criteria employed by Jess encompass various aspects, such as rhetorical elements (e.g., reading comprehension, vocabulary diversity, percentage of complex words, and percentage of passive sentences), organizational structures (e.g., forward and reverse connection structures), and content analysis (e.g., latent semantic indexing). JWriter employs linear regression analysis to assign weights to various measurement indices, such as average sentence length and total number of characters. These weights are then combined to derive the overall score. A pilot study involving the Jess model was conducted on 1320 essays at different proficiency levels, including primary, intermediate, and advanced. However, the results indicated that the Jess model failed to significantly distinguish between these essay levels. Out of the 16 measures used, four measures, namely median sentence length, median clause length, median number of phrases, and maximum number of phrases, did not show statistically significant differences between the levels. Additionally, two measures exhibited between-level differences but lacked linear progression: the number of attributives declined words and the Kanji/kana ratio. On the other hand, the remaining measures, including maximum sentence length, maximum clause length, number of attributive conjugated words, maximum number of consecutive infinitive forms, maximum number of conjunctive-particle clauses, k characteristic value, percentage of big words, and percentage of passive sentences, demonstrated statistically significant between-level differences and displayed linear progression.

Both Jess and JWriter exhibit notable limitations, including the manual selection of feature parameters and weights, which can introduce biases into the scoring process. The reliance on human annotators to label non-native language essays also introduces potential noise and variability in the scoring. Furthermore, an important concern is the possibility of system manipulation and cheating by learners who are aware of the regression equation utilized by the models (Hirao et al. 2020 ). These limitations emphasize the need for further advancements in AES systems to address these challenges.

Deep learning technology in AES

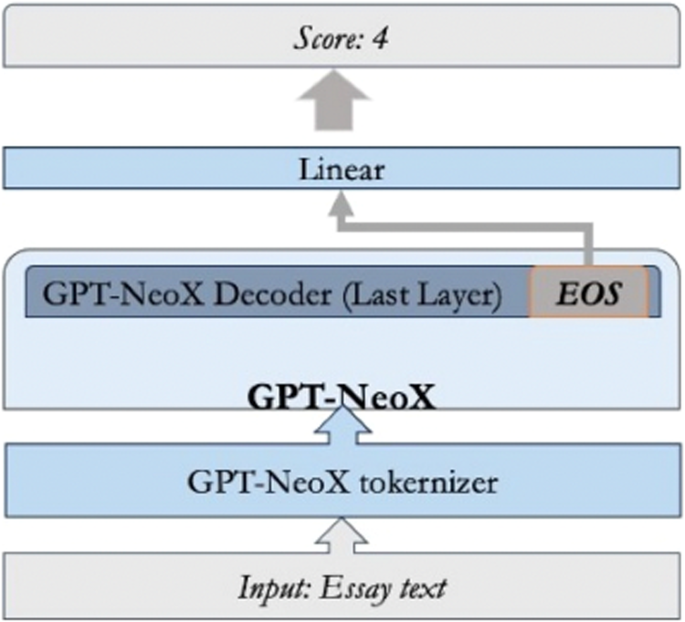

Deep learning has emerged as one of the approaches for improving the accuracy and effectiveness of AES. Deep learning-based AES methods utilize artificial neural networks that mimic the human brain’s functioning through layered algorithms and computational units. Unlike conventional machine learning, deep learning autonomously learns from the environment and past errors without human intervention. This enables deep learning models to establish nonlinear correlations, resulting in higher accuracy. Recent advancements in deep learning have led to the development of transformers, which are particularly effective in learning text representations. Noteworthy examples include bidirectional encoder representations from transformers (BERT) (Devlin et al. 2019 ) and the generative pretrained transformer (GPT) (OpenAI).

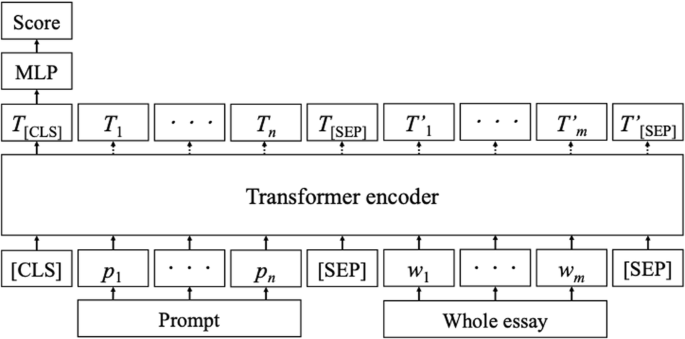

BERT is a linguistic representation model that utilizes a transformer architecture and is trained on two tasks: masked linguistic modeling and next-sentence prediction (Hirao et al. 2020 ; Vaswani et al. 2017 ). In the context of AES, BERT follows specific procedures, as illustrated in Fig. 1 : (a) the tokenized prompts and essays are taken as input; (b) special tokens, such as [CLS] and [SEP], are added to mark the beginning and separation of prompts and essays; (c) the transformer encoder processes the prompt and essay sequences, resulting in hidden layer sequences; (d) the hidden layers corresponding to the [CLS] tokens (T[CLS]) represent distributed representations of the prompts and essays; and (e) a multilayer perceptron uses these distributed representations as input to obtain the final score (Hirao et al. 2020 ).

AES system with BERT (Hirao et al. 2020 ).

The training of BERT using a substantial amount of sentence data through the Masked Language Model (MLM) allows it to capture contextual information within the hidden layers. Consequently, BERT is expected to be capable of identifying artificial essays as invalid and assigning them lower scores (Mizumoto and Eguchi, 2023 ). In the context of AES for nonnative Japanese learners, Hirao et al. ( 2020 ) combined the long short-term memory (LSTM) model proposed by Hochreiter and Schmidhuber ( 1997 ) with BERT to develop a tailored automated Essay Scoring System. The findings of their study revealed that the BERT model outperformed both the conventional machine learning approach utilizing character-type features such as “kanji” and “hiragana”, as well as the standalone LSTM model. Takeuchi et al. ( 2021 ) presented an approach to Japanese AES that eliminates the requirement for pre-scored essays by relying solely on reference texts or a model answer for the essay task. They investigated multiple similarity evaluation methods, including frequency of morphemes, idf values calculated on Wikipedia, LSI, LDA, word-embedding vectors, and document vectors produced by BERT. The experimental findings revealed that the method utilizing the frequency of morphemes with idf values exhibited the strongest correlation with human-annotated scores across different essay tasks. The utilization of BERT in AES encounters several limitations. Firstly, essays often exceed the model’s maximum length limit. Second, only score labels are available for training, which restricts access to additional information.

Mizumoto and Eguchi ( 2023 ) were pioneers in employing the GPT model for AES in non-native English writing. Their study focused on evaluating the accuracy and reliability of AES using the GPT-3 text-davinci-003 model, analyzing a dataset of 12,100 essays from the corpus of nonnative written English (TOEFL11). The findings indicated that AES utilizing the GPT-3 model exhibited a certain degree of accuracy and reliability. They suggest that GPT-3-based AES systems hold the potential to provide support for human ratings. However, applying GPT model to AES presents a unique natural language processing (NLP) task that involves considerations such as nonnative language proficiency, the influence of the learner’s first language on the output in the target language, and identifying linguistic features that best indicate writing quality in a specific language. These linguistic features may differ morphologically or syntactically from those present in the learners’ first language, as observed in (1)–(3).

我-送了-他-一本-书

Wǒ-sòngle-tā-yī běn-shū

1 sg .-give. past- him-one .cl- book

“I gave him a book.”

Agglutinative

彼-に-本-を-あげ-まし-た

Kare-ni-hon-o-age-mashi-ta

3 sg .- dat -hon- acc- give.honorification. past

Inflectional

give, give-s, gave, given, giving

Additionally, the morphological agglutination and subject-object-verb (SOV) order in Japanese, along with its idiomatic expressions, pose additional challenges for applying language models in AES tasks (4).

足-が 棒-に なり-ました

Ashi-ga bo-ni nar-mashita

leg- nom stick- dat become- past

“My leg became like a stick (I am extremely tired).”

The example sentence provided demonstrates the morpho-syntactic structure of Japanese and the presence of an idiomatic expression. In this sentence, the verb “なる” (naru), meaning “to become”, appears at the end of the sentence. The verb stem “なり” (nari) is attached with morphemes indicating honorification (“ます” - mashu) and tense (“た” - ta), showcasing agglutination. While the sentence can be literally translated as “my leg became like a stick”, it carries an idiomatic interpretation that implies “I am extremely tired”.

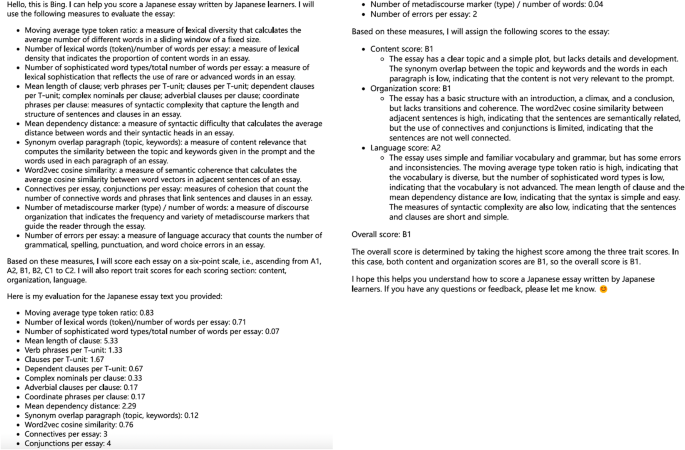

To overcome this issue, CyberAgent Inc. ( 2023 ) has developed the Open-Calm series of language models specifically designed for Japanese. Open-Calm consists of pre-trained models available in various sizes, such as Small, Medium, Large, and 7b. Figure 2 depicts the fundamental structure of the Open-Calm model. A key feature of this architecture is the incorporation of the Lora Adapter and GPT-NeoX frameworks, which can enhance its language processing capabilities.

GPT-NeoX Model Architecture (Okgetheng and Takeuchi 2024 ).

In a recent study conducted by Okgetheng and Takeuchi ( 2024 ), they assessed the efficacy of Open-Calm language models in grading Japanese essays. The research utilized a dataset of approximately 300 essays, which were annotated by native Japanese educators. The findings of the study demonstrate the considerable potential of Open-Calm language models in automated Japanese essay scoring. Specifically, among the Open-Calm family, the Open-Calm Large model (referred to as OCLL) exhibited the highest performance. However, it is important to note that, as of the current date, the Open-Calm Large model does not offer public access to its server. Consequently, users are required to independently deploy and operate the environment for OCLL. In order to utilize OCLL, users must have a PC equipped with an NVIDIA GeForce RTX 3060 (8 or 12 GB VRAM).

In summary, while the potential of LLMs in automated scoring of nonnative Japanese essays has been demonstrated in two studies—BERT-driven AES (Hirao et al. 2020 ) and OCLL-based AES (Okgetheng and Takeuchi, 2024 )—the number of research efforts in this area remains limited.

Another significant challenge in applying LLMs to AES lies in prompt engineering and ensuring its reliability and effectiveness (Brown et al. 2020 ; Rae et al. 2021 ; Zhang et al. 2021 ). Various prompting strategies have been proposed, such as the zero-shot chain of thought (CoT) approach (Kojima et al. 2022 ), which involves manually crafting diverse and effective examples. However, manual efforts can lead to mistakes. To address this, Zhang et al. ( 2021 ) introduced an automatic CoT prompting method called Auto-CoT, which demonstrates matching or superior performance compared to the CoT paradigm. Another prompt framework is trees of thoughts, enabling a model to self-evaluate its progress at intermediate stages of problem-solving through deliberate reasoning (Yao et al. 2023 ).

Beyond linguistic studies, there has been a noticeable increase in the number of foreign workers in Japan and Japanese learners worldwide (Ministry of Health, Labor, and Welfare of Japan, 2022 ; Japan Foundation, 2021 ). However, existing assessment methods, such as the Japanese Language Proficiency Test (JLPT), J-CAT, and TTBJ Footnote 1 , primarily focus on reading, listening, vocabulary, and grammar skills, neglecting the evaluation of writing proficiency. As the number of workers and language learners continues to grow, there is a rising demand for an efficient AES system that can reduce costs and time for raters and be utilized for employment, examinations, and self-study purposes.

This study aims to explore the potential of LLM-based AES by comparing the effectiveness of five models: two LLMs (GPT Footnote 2 and BERT), one Japanese local LLM (OCLL), and two conventional machine learning-based methods (linguistic feature-based scoring tools - Jess and JWriter).

The research questions addressed in this study are as follows:

To what extent do the LLM-driven AES and linguistic feature-based AES, when used as automated tools to support human rating, accurately reflect test takers’ actual performance?

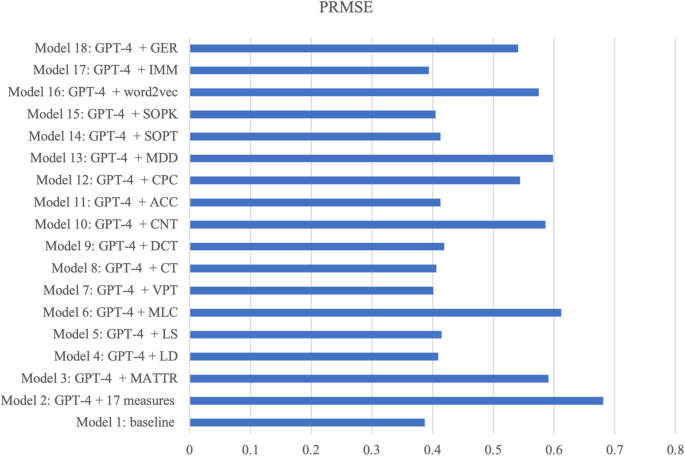

What influence does the prompt have on the accuracy and performance of LLM-based AES methods?

The subsequent sections of the manuscript cover the methodology, including the assessment measures for nonnative Japanese writing proficiency, criteria for prompts, and the dataset. The evaluation section focuses on the analysis of annotations and rating scores generated by LLM-driven and linguistic feature-based AES methods.

Methodology

The dataset utilized in this study was obtained from the International Corpus of Japanese as a Second Language (I-JAS) Footnote 3 . This corpus consisted of 1000 participants who represented 12 different first languages. For the study, the participants were given a story-writing task on a personal computer. They were required to write two stories based on the 4-panel illustrations titled “Picnic” and “The key” (see Appendix A). Background information for the participants was provided by the corpus, including their Japanese language proficiency levels assessed through two online tests: J-CAT and SPOT. These tests evaluated their reading, listening, vocabulary, and grammar abilities. The learners’ proficiency levels were categorized into six levels aligned with the Common European Framework of Reference for Languages (CEFR) and the Reference Framework for Japanese Language Education (RFJLE): A1, A2, B1, B2, C1, and C2. According to Lee et al. ( 2015 ), there is a high level of agreement (r = 0.86) between the J-CAT and SPOT assessments, indicating that the proficiency certifications provided by J-CAT are consistent with those of SPOT. However, it is important to note that the scores of J-CAT and SPOT do not have a one-to-one correspondence. In this study, the J-CAT scores were used as a benchmark to differentiate learners of different proficiency levels. A total of 1400 essays were utilized, representing the beginner (aligned with A1), A2, B1, B2, C1, and C2 levels based on the J-CAT scores. Table 1 provides information about the learners’ proficiency levels and their corresponding J-CAT and SPOT scores.

A dataset comprising a total of 1400 essays from the story writing tasks was collected. Among these, 714 essays were utilized to evaluate the reliability of the LLM-based AES method, while the remaining 686 essays were designated as development data to assess the LLM-based AES’s capability to distinguish participants with varying proficiency levels. The GPT 4 API was used in this study. A detailed explanation of the prompt-assessment criteria is provided in Section Prompt . All essays were sent to the model for measurement and scoring.

Measures of writing proficiency for nonnative Japanese

Japanese exhibits a morphologically agglutinative structure where morphemes are attached to the word stem to convey grammatical functions such as tense, aspect, voice, and honorifics, e.g. (5).

食べ-させ-られ-まし-た-か

tabe-sase-rare-mashi-ta-ka

[eat (stem)-causative-passive voice-honorification-tense. past-question marker]

Japanese employs nine case particles to indicate grammatical functions: the nominative case particle が (ga), the accusative case particle を (o), the genitive case particle の (no), the dative case particle に (ni), the locative/instrumental case particle で (de), the ablative case particle から (kara), the directional case particle へ (e), and the comitative case particle と (to). The agglutinative nature of the language, combined with the case particle system, provides an efficient means of distinguishing between active and passive voice, either through morphemes or case particles, e.g. 食べる taberu “eat concusive . ” (active voice); 食べられる taberareru “eat concusive . ” (passive voice). In the active voice, “パン を 食べる” (pan o taberu) translates to “to eat bread”. On the other hand, in the passive voice, it becomes “パン が 食べられた” (pan ga taberareta), which means “(the) bread was eaten”. Additionally, it is important to note that different conjugations of the same lemma are considered as one type in order to ensure a comprehensive assessment of the language features. For example, e.g., 食べる taberu “eat concusive . ”; 食べている tabeteiru “eat progress .”; 食べた tabeta “eat past . ” as one type.

To incorporate these features, previous research (Suzuki, 1999 ; Watanabe et al. 1988 ; Ishioka, 2001 ; Ishioka and Kameda, 2006 ; Hirao et al. 2020 ) has identified complexity, fluency, and accuracy as crucial factors for evaluating writing quality. These criteria are assessed through various aspects, including lexical richness (lexical density, diversity, and sophistication), syntactic complexity, and cohesion (Kyle et al. 2021 ; Mizumoto and Eguchi, 2023 ; Ure, 1971 ; Halliday, 1985 ; Barkaoui and Hadidi, 2020 ; Zenker and Kyle, 2021 ; Kim et al. 2018 ; Lu, 2017 ; Ortega, 2015 ). Therefore, this study proposes five scoring categories: lexical richness, syntactic complexity, cohesion, content elaboration, and grammatical accuracy. A total of 16 measures were employed to capture these categories. The calculation process and specific details of these measures can be found in Table 2 .

T-unit, first introduced by Hunt ( 1966 ), is a measure used for evaluating speech and composition. It serves as an indicator of syntactic development and represents the shortest units into which a piece of discourse can be divided without leaving any sentence fragments. In the context of Japanese language assessment, Sakoda and Hosoi ( 2020 ) utilized T-unit as the basic unit to assess the accuracy and complexity of Japanese learners’ speaking and storytelling. The calculation of T-units in Japanese follows the following principles:

A single main clause constitutes 1 T-unit, regardless of the presence or absence of dependent clauses, e.g. (6).

ケンとマリはピクニックに行きました (main clause): 1 T-unit.

If a sentence contains a main clause along with subclauses, each subclause is considered part of the same T-unit, e.g. (7).

天気が良かった の で (subclause)、ケンとマリはピクニックに行きました (main clause): 1 T-unit.

In the case of coordinate clauses, where multiple clauses are connected, each coordinated clause is counted separately. Thus, a sentence with coordinate clauses may have 2 T-units or more, e.g. (8).

ケンは地図で場所を探して (coordinate clause)、マリはサンドイッチを作りました (coordinate clause): 2 T-units.

Lexical diversity refers to the range of words used within a text (Engber, 1995 ; Kyle et al. 2021 ) and is considered a useful measure of the breadth of vocabulary in L n production (Jarvis, 2013a , 2013b ).

The type/token ratio (TTR) is widely recognized as a straightforward measure for calculating lexical diversity and has been employed in numerous studies. These studies have demonstrated a strong correlation between TTR and other methods of measuring lexical diversity (e.g., Bentz et al. 2016 ; Čech and Miroslav, 2018 ; Çöltekin and Taraka, 2018 ). TTR is computed by considering both the number of unique words (types) and the total number of words (tokens) in a given text. Given that the length of learners’ writing texts can vary, this study employs the moving average type-token ratio (MATTR) to mitigate the influence of text length. MATTR is calculated using a 50-word moving window. Initially, a TTR is determined for words 1–50 in an essay, followed by words 2–51, 3–52, and so on until the end of the essay is reached (Díez-Ortega and Kyle, 2023 ). The final MATTR scores were obtained by averaging the TTR scores for all 50-word windows. The following formula was employed to derive MATTR:

\({\rm{MATTR}}({\rm{W}})=\frac{{\sum }_{{\rm{i}}=1}^{{\rm{N}}-{\rm{W}}+1}{{\rm{F}}}_{{\rm{i}}}}{{\rm{W}}({\rm{N}}-{\rm{W}}+1)}\)

Here, N refers to the number of tokens in the corpus. W is the randomly selected token size (W < N). \({F}_{i}\) is the number of types in each window. The \({\rm{MATTR}}({\rm{W}})\) is the mean of a series of type-token ratios (TTRs) based on the word form for all windows. It is expected that individuals with higher language proficiency will produce texts with greater lexical diversity, as indicated by higher MATTR scores.

Lexical density was captured by the ratio of the number of lexical words to the total number of words (Lu, 2012 ). Lexical sophistication refers to the utilization of advanced vocabulary, often evaluated through word frequency indices (Crossley et al. 2013 ; Haberman, 2008 ; Kyle and Crossley, 2015 ; Laufer and Nation, 1995 ; Lu, 2012 ; Read, 2000 ). In line of writing, lexical sophistication can be interpreted as vocabulary breadth, which entails the appropriate usage of vocabulary items across various lexicon-grammatical contexts and registers (Garner et al. 2019 ; Kim et al. 2018 ; Kyle et al. 2018 ). In Japanese specifically, words are considered lexically sophisticated if they are not included in the “Japanese Education Vocabulary List Ver 1.0”. Footnote 4 Consequently, lexical sophistication was calculated by determining the number of sophisticated word types relative to the total number of words per essay. Furthermore, it has been suggested that, in Japanese writing, sentences should ideally have a length of no more than 40 to 50 characters, as this promotes readability. Therefore, the median and maximum sentence length can be considered as useful indices for assessment (Ishioka and Kameda, 2006 ).

Syntactic complexity was assessed based on several measures, including the mean length of clauses, verb phrases per T-unit, clauses per T-unit, dependent clauses per T-unit, complex nominals per clause, adverbial clauses per clause, coordinate phrases per clause, and mean dependency distance (MDD). The MDD reflects the distance between the governor and dependent positions in a sentence. A larger dependency distance indicates a higher cognitive load and greater complexity in syntactic processing (Liu, 2008 ; Liu et al. 2017 ). The MDD has been established as an efficient metric for measuring syntactic complexity (Jiang, Quyang, and Liu, 2019 ; Li and Yan, 2021 ). To calculate the MDD, the position numbers of the governor and dependent are subtracted, assuming that words in a sentence are assigned in a linear order, such as W1 … Wi … Wn. In any dependency relationship between words Wa and Wb, Wa is the governor and Wb is the dependent. The MDD of the entire sentence was obtained by taking the absolute value of governor – dependent:

MDD = \(\frac{1}{n}{\sum }_{i=1}^{n}|{\rm{D}}{{\rm{D}}}_{i}|\)

In this formula, \(n\) represents the number of words in the sentence, and \({DD}i\) is the dependency distance of the \({i}^{{th}}\) dependency relationship of a sentence. Building on this, the annotation of sentence ‘Mary-ga-John-ni-keshigomu-o-watashita was [Mary- top -John- dat -eraser- acc -give- past] ’. The sentence’s MDD would be 2. Table 3 provides the CSV file as a prompt for GPT 4.

Cohesion (semantic similarity) and content elaboration aim to capture the ideas presented in test taker’s essays. Cohesion was assessed using three measures: Synonym overlap/paragraph (topic), Synonym overlap/paragraph (keywords), and word2vec cosine similarity. Content elaboration and development were measured as the number of metadiscourse markers (type)/number of words. To capture content closely, this study proposed a novel-distance based representation, by encoding the cosine distance between the essay (by learner) and essay task’s (topic and keyword) i -vectors. The learner’s essay is decoded into a word sequence, and aligned to the essay task’ topic and keyword for log-likelihood measurement. The cosine distance reveals the content elaboration score in the leaners’ essay. The mathematical equation of cosine similarity between target-reference vectors is shown in (11), assuming there are i essays and ( L i , …. L n ) and ( N i , …. N n ) are the vectors representing the learner and task’s topic and keyword respectively. The content elaboration distance between L i and N i was calculated as follows:

\(\cos \left(\theta \right)=\frac{{\rm{L}}\,\cdot\, {\rm{N}}}{\left|{\rm{L}}\right|{\rm{|N|}}}=\frac{\mathop{\sum }\nolimits_{i=1}^{n}{L}_{i}{N}_{i}}{\sqrt{\mathop{\sum }\nolimits_{i=1}^{n}{L}_{i}^{2}}\sqrt{\mathop{\sum }\nolimits_{i=1}^{n}{N}_{i}^{2}}}\)

A high similarity value indicates a low difference between the two recognition outcomes, which in turn suggests a high level of proficiency in content elaboration.

To evaluate the effectiveness of the proposed measures in distinguishing different proficiency levels among nonnative Japanese speakers’ writing, we conducted a multi-faceted Rasch measurement analysis (Linacre, 1994 ). This approach applies measurement models to thoroughly analyze various factors that can influence test outcomes, including test takers’ proficiency, item difficulty, and rater severity, among others. The underlying principles and functionality of multi-faceted Rasch measurement are illustrated in (12).

\(\log \left(\frac{{P}_{{nijk}}}{{P}_{{nij}(k-1)}}\right)={B}_{n}-{D}_{i}-{C}_{j}-{F}_{k}\)