An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Critical Thinking in Critical Care: Five Strategies to Improve Teaching and Learning in the Intensive Care Unit

Margaret m hayes, souvik chatterjee, richard m schwartzstein.

- Author information

- Article notes

- Copyright and License information

Correspondence and requests for reprints should be addressed to Margaret M. Hayes, M.D., Beth Israel Deaconess Medical Center/Harvard Medical School, Pulmonary and Critical Care Medicine, 330 Brookline Avenue E/ES 201B Boston, MA 02215. E-mail: [email protected]

Corresponding author.

Received 2016 Dec 15; Accepted 2017 Feb 1; Issue date 2017 Apr.

Critical thinking, the capacity to be deliberate about thinking, is increasingly the focus of undergraduate medical education, but is not commonly addressed in graduate medical education. Without critical thinking, physicians, and particularly residents, are prone to cognitive errors, which can lead to diagnostic errors, especially in a high-stakes environment such as the intensive care unit. Although challenging, critical thinking skills can be taught. At this time, there is a paucity of data to support an educational gold standard for teaching critical thinking, but we believe that five strategies, routed in cognitive theory and our personal teaching experiences, provide an effective framework to teach critical thinking in the intensive care unit. The five strategies are: make the thinking process explicit by helping learners understand that the brain uses two cognitive processes: type 1, an intuitive pattern-recognizing process, and type 2, an analytic process; discuss cognitive biases, such as premature closure, and teach residents to minimize biases by expressing uncertainty and keeping differentials broad; model and teach inductive reasoning by utilizing concept and mechanism maps and explicitly teach how this reasoning differs from the more commonly used hypothetico-deductive reasoning; use questions to stimulate critical thinking: “how” or “why” questions can be used to coach trainees and to uncover their thought processes; and assess and provide feedback on learner’s critical thinking. We believe these five strategies provide practical approaches for teaching critical thinking in the intensive care unit.

Keywords: medical education, critical thinking, critical care, cognitive errors

Critical thinking, the capacity to be deliberate about thinking and actively assess and regulate one’s cognition ( 1 – 4 ), is an essential skill for all physicians. Absent critical thinking, one typically relies on heuristics, a quick method or shortcut for problem solving, and can fall victim to cognitive biases ( 5 ). Cognitive biases can lead to diagnostic errors, which result in increased patient morbidity and mortality ( 6 ).

Diagnostic errors are the number one cause of medical malpractice claims ( 7 ) and are thought to account for approximately 10% of in-hospital deaths ( 8 ). Many factors contribute to diagnostic errors, including cognitive problems and systems issues ( 9 ), but it has been shown that cognitive errors are an important source of diagnostic error in almost 75% of cases ( 10 ). In addition, a recent report from the Risk Management Foundation, the research arm of the malpractice insurer for the Harvard Medical School hospitals, labeled more than half of the malpractice cases they evaluated as “assessment failures,” which included “narrow diagnostic focus, failure to establish a differential diagnosis, [and] reliance on a chronic condition of previous diagnosis ( 11 ).” In light of these data and the Institute of Medicine’s 2015 recommendation to “enhance health care professional education and training in the diagnostic process ( 8 ),” we present this framework as a practical approach to teaching critical thinking skills in the intensive care unit (ICU).

The process of critical thinking can be taught ( 3 ); however, methods of instruction are challenging ( 12 ), and there is no consensus on the most effective teaching model ( 13 , 14 ). Explicit teaching about reasoning, metacognition, cognitive biases, and debiasing strategies may help avoid cognitive errors ( 3 , 15 , 16 ) and enhance critical thinking ( 17 ), but empirical evidence to inform best educational practices is lacking. Assessment of critical thinking is also difficult ( 18 ). However, because it is of paramount importance to providing high-quality, safe, and effective patient care, we believe critical thinking should be both explicitly taught and explicitly assessed ( 12 , 18 ).

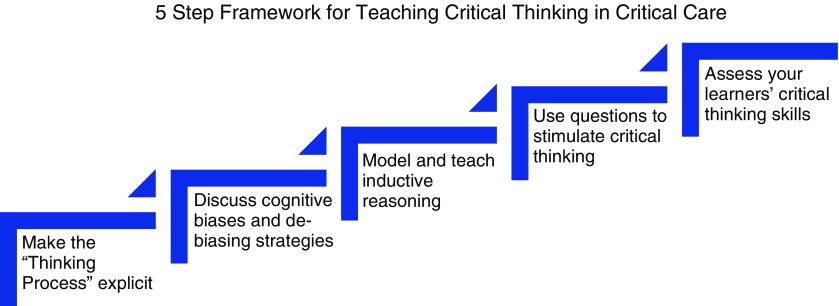

Critical thinking is particularly important in the fast-paced, high-acuity environment of the ICU, where medical errors can lead to serious harm ( 19 ). Despite the paucity of data to support an educational gold standard in this field, we propose five strategies, based on educational principles, we have found effective in teaching critical thinking in the ICU ( Figure 1 ). These strategies are not dependent on one another and often overlap. Using the following case scenario as an example for discussion, we provide a detailed explanation, as well as practical tips on how to employ these strategies.

A 45-year-old man with a history of hypertension presents to the emergency department with fatigue, sore throat, low-grade fever, and mild shortness of breath. On arrival to the emergency department, his heart rate is 110 and his blood pressure is 90/50 mm Hg. He is given 2 L fluids, but his blood pressure continues to fall, and norepinephrine is started. Physical examination is normal with the exception of dry mucous membranes. Laboratory studies performed on blood samples obtained before administration of intravenous fluid show: white blood cell count, 6.0 K/uL; hematocrit, 35%; lactate, 0.8 mmol/L; blood urea nitrogen, 40 mg/dL; and creatinine, 1.1 mg/dL. A chest radiograph shows no infiltrates. He is admitted to the medical intensive care unit. Attending: What is your assessment of this patient? Resident: This is a 45-year-old male with a history of hypertension who was sent to us from the emergency department with sepsis. Attending: That is interesting. I am puzzled: What is the source of infection? And how do you account for the low hematocrit in an essentially healthy man whom you believe to be volume depleted? Resident: Well, maybe pneumonia will appear on the X-ray in the next 24 hours. With respect to the hematocrit...I’m not really sure.

Five strategies to teach critical thinking skills in a critical care environment.

Strategy 1: Make the “Thinking Process” Explicit

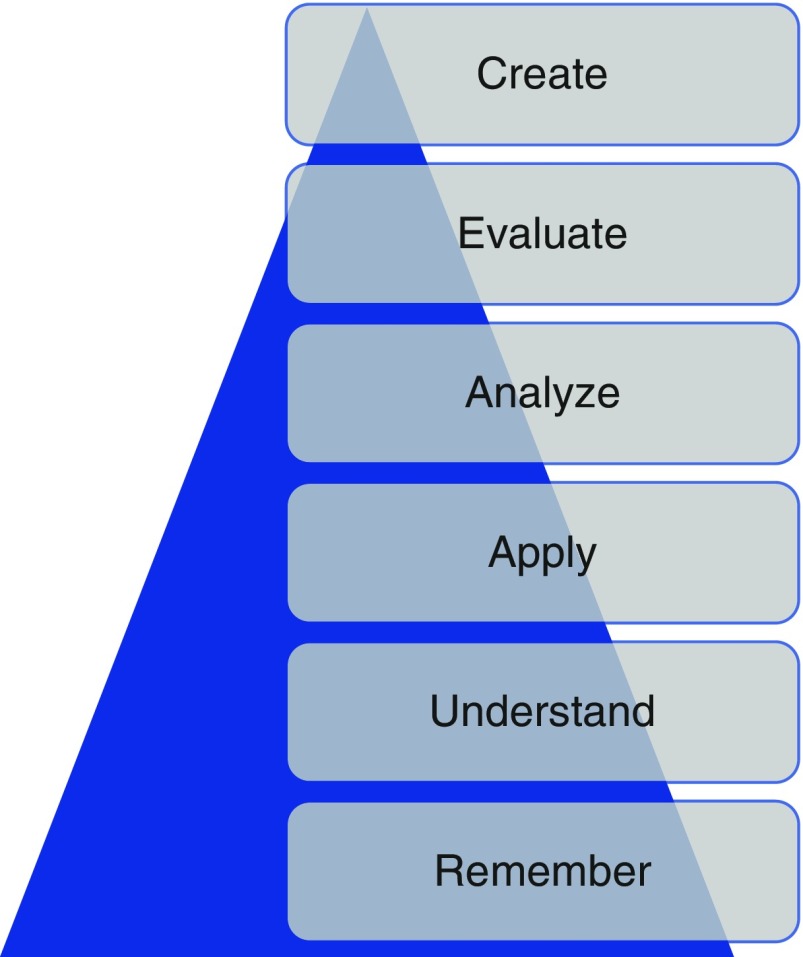

In the ICU, many attendings are satisfied with the trainee simply putting forth an assessment and plan. In the case presented here, the resident’s assessment that the patient has sepsis is likely based on the resident remembering a few facts about sepsis (i.e., hypotension is not responsive to fluids) and recognizing a pattern (history of possible infection + fever + hypotension = sepsis). With this information, we may determine that the learner is operating at the lowest level of Bloom’s taxonomy: remembering ( 20 ) ( Figure 2 ), in this case, she seems to be using reflexive or automatic thought. In a busy ICU, it is tempting for the attending to simply overlook the response and proceed with one’s own plan, but we should be expecting more. As indicated in the attending’s response, we should make the thinking process explicit and push the resident up Bloom’s taxonomy: to describe, explain, apply, analyze, evaluate, and ultimately create ( 20 ) ( Figure 2 ).

The revised Bloom’s taxonomy. This schematic, first created in 1956, depicts six levels of the cognitive domain. Remembering is the lowest level; creating is the highest level. Adapted from Anderson and Krathwol ( 20 ).

Faculty members should probe the thought process used to arrive at the assessment and encourage the resident to think about her thinking; that is, to engage in the process of metacognition. We recommend doing this in real time as the trainee is presenting the case by asking “how” and “why” questions (see strategy 4).

Attending: Why do you think he has sepsis? Resident: Well, he came in with infectious symptoms. Also, his blood pressure is quite low, and it only improved slightly with fluids in the emergency department. Attending: Okay, but how is blood pressure generated? How could you explain hypotension using other data in the case, such as the low hematocrit?

If the trainee is encouraged to think about her thinking, she may conclude that she was trying to force a “pattern” of sepsis, perhaps because she frequently sees patients with sepsis and because the emergency department framed the case in that way. It is possible that she does not have enough experience in the ICU or specific knowledge about sepsis to accurately assess this patient; in the actual case, a third-year resident with significant ICU experience ultimately admitted to defaulting to pattern recognition.

One way to push learners up Bloom’s taxonomy is to help them understand dual-process theory: the idea that the brain uses two thinking processes, type 1 and type 2 (alternately known as system 1 and system 2). Type 1 thinking is the more intuitive process of decision making; type 2 is an analytical process ( 17 , 21 , 22 ). Type 1 thinking is immediate and unconscious, and the hallmark is pattern recognition; type 2 is deliberate and effortful ( 17 ).

Critical thinkers understand and recognize the dual processes ( 21 ) and the fact that type I thinking is common in their daily lives. Furthermore, they acknowledge that type 1 reasoning, which is often automatic and unconscious, can be prone to error. There is a paucity of data linking cognitive errors to the particular type of thinking ( 14 ), but many of these studies are plagued by the fact that they do not test the atypical pattern. As a consequence, they do not truly test the hypothesis that type 2 reasoning will reduce error in more complex cases. It has been shown that combining type 1 and type 2 thinking improves diagnostic accuracy compared with just using one method versus another ( 23 ). We believe that helping learners understand how their minds work will help them recognize when they may be falling into pattern recognition and when this will be problematic (e.g., when there are discordant data, or one can only quickly think of one diagnosis). By expecting more from our learners, by compelling them to understand, analyze, and evaluate, we must provide constant feedback and coaching to help them develop, and we must ask the right questions (see strategy 4) to guide them.

Strategy 2: Discuss Cognitive Biases and De-Biasing Strategies

Cognitive biases are thought patterns that deviate from the typical way of decision making or judging ( 24 ). These occur commonly when we are under stress or time constrained when making decisions. At this time, there are more than 100 described cognitive biases, some of which are more common in medicine than others ( 25 ). We believe that the six outlined in Table 1 are particularly prevalent in the ICU.

Six common biases frequently used in the intensive care unit

The definitions of these biases are based on their application and use in clinical medicine. Table adapted from Croskerry ( 6 ), Croskerry ( 27 ), and Hogarth ( 37 ).

Although there are many proponents of teaching cognitive biases ( 6 ), there are no studies showing that teaching these to trainees improves their clinical decision making ( 14 ), again recognizing that research in this area has often not focused on the scenarios in which cognitive bias is likely to lead to error. Most cognitive biases are quiescent until the right scenario presents itself ( 26 ), which makes them difficult to study in the clinical context. Imagine an overworked, tired resident in a busy ICU or one who received an incomplete sign-out or felt pressure from the system to make a quick decision to move along patient care. These scenarios occur daily in the ICU; as a consequence, we believe that teaching residents how to recognize biases and giving them strategies to debias is important.

The resident in the clinical scenario outlined here is falling prey to many biases in her assessment that the patient has sepsis. First, it is likely that on her ICU rotation she has seen many patients with sepsis, and thus sepsis is a diagnosis that is easily available to her mind (availability bias). Next, she is falling victim to confirmation bias: The presence of hypotension supports a diagnosis of sepsis and is disproportionately appreciated by the trainee compared with a white blood cell count of 6,000, which does not easily fit with the diagnosis and is ignored. Next, she anchors and prematurely closes on the diagnosis of sepsis and does not look for other possible explanations of hypotension. The resident does not realize that she is subject to these biases; explicitly discussing them will help her understand her thinking process, enable her to recognize when she may be jumping to conclusions, and help her identify when she must switch to type 2 thinking.

Attending: Why do you think he has sepsis? Resident: Well, he came in with infectious symptoms. Also, his blood pressure is quite low, and it did not improve with fluids in the emergency department. This is similar to the other patient with sepsis. Attending: I can see why sepsis easily comes to your mind, as we have recently admitted three other patients with sepsis. These patients had similar features to this patient, so your mind is jumping to that conclusion, but if we stop and think together about what pieces of the case don’t fit with sepsis, we may come up with a different diagnosis. Resident: Well, the lack of leukocytosis doesn’t make sense. Attending: Yes! I agree, that is a bit odd. Let’s broaden our differential and not anchor on sepsis. What else could this be?

Cognitive forcing strategies ( 16 ), the process of making trainees aware of their cognitive biases and then developing strategies to overcome the bias, may help this resident. Studies show that debiasing can be taught to emergency medicine trainees ( 27 ), and we believe it can also be taught to critical care trainees, who experience a similar fast-paced and high-stakes learning environment. Proposed debiasing strategies include encouraging trainees to consider alternative diagnoses ( 3 , 6 , 27 , 28 ) and promoting broad differentials. In particular, they need to be able to rethink cases when confronted with information that is not consistent with the working diagnosis; for example, leukocytosis, as above. They should be allowed to communicate their level of uncertainty, and we should not think less of them if they do not have a single final answer with a targeted plan ( 29 ). When we do not discuss inconsistent information, we essentially give trainees permission to ignore it.

Attending: In addition to the white blood cell count not fitting, I’m also struggling with the hematocrit: How is it 35% in the setting of presumed decreased intravascular volume? Resident: Hmm.... I’m actually not sure. You’re right, though, it doesn’t make sense. Attending: I agree. Let’s pause and think about how we are thinking about this case .

To a large degree, recognition of cognitive bias requires metacognition, defined as thinking about one’s thinking ( 3 , 16 , 27 ). This process is optimized with a familiarity with how the mind works; that is, a basic understanding of dual-process theory and cognitive biases. In the ICU, we find it easiest to engage in a group metacognition exercise. The attending asks, “How are we thinking about this case?” This allows both the attending and the team to reflect together on how and why the diagnosis has been made. This can provide insight into the tendency to prematurely close or limit considerations, which has been shown to be the most common cause of inaccurate clinical synthesis ( 10 ).

Other debiasing strategies include accountability ( 6 ) and feedback ( 25 , 30 ). Giving specific and in-the-moment feedback can help residents understand their decisions ( 25 ). It is our job as attendings to provide this feedback, and it is thought that this is one of the most effective debiasing strategies ( 25 ).

Strategy 3: Model and Teach Inductive Reasoning

In medicine, we classically teach clinical reasoning via the hypothetico-deductive strategy ( 31 ) and rarely discuss inductive reasoning. To date, there are no data proving the advantages of one strategy over another, but we believe that modeling inductive reasoning is an important part of critical thinking, especially when type 1 thinking provides limited answers. In hypothetico-deductive reasoning, physicians make a cognitive jump from a few facts to hypotheses framed as a differential diagnosis from which one then deduces characteristics that are matched to the patient ( 32 ). Because this way of thinking relies on memory and pattern recognition, we find that it is more subject to cognitive biases, including premature closure, than inductive reasoning.

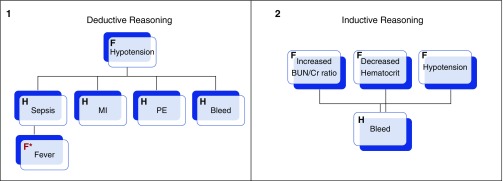

In our case, the presence of hypotension leads the trainee to come up with a differential based primarily on that single observation; the resident thinks of diagnoses such as sepsis or cardiogenic shock. Contrast this way of thinking with inductive reasoning, which proceeds in an orderly way from multiple facts to hypotheses ( 32 ). In our case, putting together the facts of hypotension, decreased hematocrit, and elevated blood urea nitrogen/creatinine would lead to a broader list of possible explanations or hypotheses that would include bleeding (see Figure 3 to compare and contrast inductive and deductive reasoning). We propose that this way of thinking is grounded more deeply in pathophysiology, and we believe it leads to broader thinking, because trainees do not have to rely on memory, pattern recognition, or heuristics; rather, they can reason their way through the problem via an understanding of basic mechanisms of health and disease.

Schematic representations of deductive ( 1 ) and inductive ( 2 ) reasoning apropos to the clinical case. In deductive reasoning, one fact ( F ; hypotension ) is used to generated multiple hypotheses ( H ), and then facts that pertain to each are retrofitted ( red F* ; fever ). In inductive reasoning, facts are grouped and used to generate hypotheses. Adapted from Pottier ( 32 ).

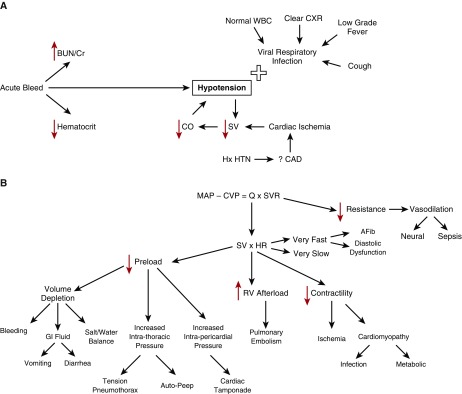

Inductive reasoning can be practiced using both mechanism and concept maps. Mechanism maps are a visual representation of how the pathophysiology of disease leads to the clinical symptoms ( 33 ), whereas concept maps graphically represent relationships between multiple concepts ( 33 ) and make links explicit. Both types reinforce mechanistic thinking and can be used as tools to avoid cognitive biases. Using our case as an example, if the resident started with the hypotension and made a mechanism ( Figure 4A ) or concept ( Figure 4B ) map, she would be less likely to anchor on the diagnosis of sepsis. This process gives trainees a strategy to broaden their differential and a way to think about the case when they do not know what is going on.

( A ) A mechanism map of a 45-year-old man presenting with cough, shortness of breath. Found to have an increased BUN/Cr ration, a decreased hematocrit, and a normal white blood cell count. ( B ) A concept map of the clinical case. AFib = atrial fibrillation; BUN/Cr = blood urea nitrogen to creatinine ratio; CAD = coronary artery disease; CO/Q = cardiac output; CVP = central venous pressure; CXR = chest X-ray; GI = gastrointestinal; HR = heart rate; Hx HTN = history of hypertension; MAP = mean arterial pressure; RV = right ventricle; SV = stroke volume; SVR = systemic vascular resistance; WBC = white blood cell.

Although critics contend that these maps take time and do not have a place in the ICU, we find that quickly sketching a mechanism map on rounds while the case is being presented only takes 1–2 minutes and is a powerful way of making your method of clinical reasoning explicit to the learner. This can also be done later as a way to review pathophysiology. We hold monthly concept mapping sessions for our students ( 34 ) to improve their clinical reasoning skills, but find that in the ICU with residents, doing this quickly in real time with a mechanism map is more effective.

Strategy 4: Use Questions to Stimulate Critical Thinking

Questions can be used to engage the learners and inspire them to think critically. When questioning trainees, it is important to avoid the “quiz show” type questions that just test whether a trainee can recall a fact (e.g., “What is the most common cause of X”?). In our current advanced technological age, answers to this type of question reveal less about thinking abilities than how adept one is at searching the internet. These questions do not provide insight into the trainee’s understanding but can, we fear, subtly emphasize that the practice of medicine is about memorization, rather than thinking. In addition, this type of question is often perceived by the trainee as “pimping.” This can belittle the trainee while securing the attending physician’s place of power ( 35 ) and create a hostile learning environment.

Attending: Why do you think this patient is hypotensive? Attending: How does the BUN/creatinine ratio relate to the hypotension? Attending: How would you expect the intravascular volume depletion to affect his hematocrit?

Questions like these allow the trainee to elaborate on her knowledge, which feels much safer to the learner and provides the attending insight into her thinking.

Resident: If my theory of sepsis were correct, I would think the patient would be intravascularly dry and have a higher hematocrit. The fact that it is only 35% and that his BUN/creatinine ratio is consistent with a prerenal picture is making me worried that maybe the hypotension is not from sepsis but, rather, from bleeding. I think we need to evaluate for gastrointestinal bleeding.

When the right questions are used to coach the resident, her thought processes are uncovered and she can be guided to the correct diagnosis. Although experience and domain-specific knowledge are important, data indicate that in the majority of malpractice cases involving diagnostic error, the problem is not that the doctor did not know the diagnosis; rather, she did not think of it. Reasoning, rather than knowledge, is key to avoiding mistakes in cases with confounding data.

Strategy 5: Assess Your Learner’s Critical Thinking

It is difficult, but necessary for trainee development, to assess critical thinking ( 18 ). Milestones, ranging from challenged and unreflective thinkers to accomplished critical thinkers, have been proposed ( 18 ). This approach is helpful not only for providing feedback to trainees on their critical thinking but also to give the trainees a framework to guide reflection on how they are thinking (see Table 2 for a description of the milestones).

Milestones of critical thinking and the descriptions of each stage

Note that “Challenged thinker” is in italics because any thinker can be challenged as a result of environmental pressures or time constraints. Adapted from Papp ( 18 ).

It is important to note that anyone, even accomplished critical thinkers, can become “challenged critical thinkers” when the environment precludes critical thinking. This is particularly relevant in critical care. In a busy ICU, one is often faced with time pressure, which contributes to premature closure. In our case presented earlier, perhaps the resident had limited time to admit this patient, and thus settled on the diagnosis of sepsis. It is our hope that teaching trainees to recognize this risk will lead to fewer cognitive biases. Imagine a different exchange between faculty and resident:

Attending: How are you doing with the new admission? How are you thinking about the case? Resident: I’m concerned this is sepsis, but there are few pieces that don’t fit. However, given the two other admissions and the cardiac arrest on the floor who is heading our way, I haven’t been able to give this case as much thought as I would like to. Attending: Okay, do you want to work through the case together? Or could I help with some other tasks so you have more time to think about this?

This type of response reflects a practicing critical thinker: one who is aware of her limitations and thinking processes. This can only occur, however, if the attending creates an environment in which critical thinking is valued by making a safe space and asking the right questions.

Conclusions

The ICU is a high-acuity, fast-paced, and high-stakes environment in which critical thinking is imperative. Despite the limited empirical evidence to guide faculty on best teaching practices for enhancing reasoning skills, it is our hope that these strategies will provide practical approaches for teaching this topic in the ICU. Given how fast medical knowledge grows and how rapidly technology allows us to find factual information, it is important to teach enduring principles, such as how to think.

Our job in the ICU, where literal life-and-death decisions are made daily, is to teach trainees to focus on how we actually think about problems and to uncover cognitive biases that cause flawed thinking and may lead to diagnostic error. The focus of the preclerkship curriculum at the undergraduate level is increasingly moving away from transfer of content to application of knowledge ( 36 ). When teaching residents and fellows, faculty should also emphasize thinking skills by making the thinking process explicit, discussing cognitive biases, and debiasing strategies, modeling and teaching inductive reasoning, using questions to stimulate curiosity, and assessing critical thinking skills.

As Albert Einstein said, “Education... is not the learning of facts, but the training of the mind to think...” ( 38 ).

Supplementary Material

Author Contributions : M.M.H. contributed to manuscript drafting, figure creation, and editing; S.C. contributed to figure creation, critical review, and editing; and R.M.S. contributed to figure creation, critical review, and editing.

Author disclosures are available with the text of this article at www.atsjournals.org .

- 1. Scriven M, Paul R. Critical thinking as defined by the national council for excellence in critical thinking. Presented at the 8th Annual International Conference on Critical Thinking and Education Reform; August 1987; Rohnert Park, California. [ Google Scholar ]

- 2. Huang GC, Newman LR, Schwartzstein RM. Critical thinking in health professions education: summary and consensus statements of the Millennium Conference 2011. Teach Learn Med. 2014;26:95–102. doi: 10.1080/10401334.2013.857335. [ DOI ] [ PubMed ] [ Google Scholar ]

- 3. Croskerry P. From mindless to mindful practice--cognitive bias and clinical decision making. N Engl J Med. 2013;368:2445–2448. doi: 10.1056/NEJMp1303712. [ DOI ] [ PubMed ] [ Google Scholar ]

- 4. Facione P. Critical thinking: What it is and why it counts. Millbrae, CA: The California Academic Press; 2011. [ Google Scholar ]

- 5. Tversky A, Kahneman D. Judgement under uncertainty: heuristics and biases. Science. 1974;185:1124–1131. doi: 10.1126/science.185.4157.1124. [ DOI ] [ PubMed ] [ Google Scholar ]

- 6. Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med. 2003;78:775–780. doi: 10.1097/00001888-200308000-00003. [ DOI ] [ PubMed ] [ Google Scholar ]

- 7. Saber Tehrani AS, Lee H, Mathews SC, Shore A, Makary MA, Pronovost PJ, Newman-Toker DE. 25-year summary of US malpractice claims for diagnostic errors 1986-2010: an analysis from the National Practitioner Data Bank. BMJ Qual Saf. 2013;22:672–680. doi: 10.1136/bmjqs-2012-001550. [ DOI ] [ PubMed ] [ Google Scholar ]

- 8. National Academies of Sciences, Engineering, and Medicine. Improving diagnosis in health care. Washington, DC: The National Academies Press; 2015. [ Google Scholar ]

- 9. Lambe KA, O’Reilly G, Kelly BD, Curristan S. Dual-process cognitive interventions to enhance diagnostic reasoning: a systematic review. BMJ Qual Saf. 2016;25:808–820. doi: 10.1136/bmjqs-2015-004417. [ DOI ] [ PubMed ] [ Google Scholar ]

- 10. Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165:1493–1499. doi: 10.1001/archinte.165.13.1493. [ DOI ] [ PubMed ] [ Google Scholar ]

- 11. Hoffman J. editor. 2014 Annual benchmarking report: malpractice risks in the diagnostic process. Cambridge, MA: CRICO Strategies; 2014 [accessed 2017 Feb 22]. Available from: https://psnet.ahrq.gov/resources/resource/28612/2014-annual-benchmarking-report-malpractice-risks-in-the-diagnostic-process .

- 12. Willingham DT. Critical thinking, why is it so hard to teach? Am Educ. 2007 Summer:8–19. [ Google Scholar ]

- 13. Wellbery C. Flaws in clinical reasoning: a common cause of diagnostic error. Am Fam Physician. 2011;84:1042–1048. [ PubMed ] [ Google Scholar ]

- 14. Norman GR, Monteiro SD, Sherbino J, Ilgen JS, Schmidt HG, Mamede S. The causes of errors in clinical reasoning: cognitive biases, knowledge deficits, and dual process thinking. Acad Med. 2017;92:23–30. doi: 10.1097/ACM.0000000000001421. [ DOI ] [ PubMed ] [ Google Scholar ]

- 15. Croskerry P.Diagnostic failure: a cognitive and affective approach Henriksen K, Battles JB, Marks ES, Lewin DI.editors. Advances in patient safety: from research to implementation. Volume 2: concepts and methodology Rockville, MD: Agency for Healthcare Research and Quality; 2005241–254. [ PubMed ] [ Google Scholar ]

- 16. Croskerry P. Cognitive forcing strategies in clinical decisionmaking. Ann Emerg Med. 2003;41:110–120. doi: 10.1067/mem.2003.22. [ DOI ] [ PubMed ] [ Google Scholar ]

- 17. Croskerry P, Petrie DA, Reilly JB, Tait G. Deciding about fast and slow decisions. Acad Med. 2014;89:197–200. doi: 10.1097/ACM.0000000000000121. [ DOI ] [ PubMed ] [ Google Scholar ]

- 18. Papp KK, Huang GC, Lauzon Clabo LM, Delva D, Fischer M, Konopasek L, Schwartzstein RM, Gusic M. Milestones of critical thinking: a developmental model for medicine and nursing. Acad Med. 2014;89:715–720. doi: 10.1097/ACM.0000000000000220. [ DOI ] [ PubMed ] [ Google Scholar ]

- 19. Garrouste Orgeas M, Timsit JF, Soufir L, Tafflet M, Adrie C, Philippart F, Zahar JR, Clec’h C, Goldran-Toledano D, Jamali S, et al. Outcomerea Study Group. Impact of adverse events on outcomes in intensive care unit patients. Crit Care Med. 2008;36:2041–2047. doi: 10.1097/CCM.0b013e31817b879c. [ DOI ] [ PubMed ] [ Google Scholar ]

- 20. Anderson LW, Krathwold DR. Taxonomy for learning, teaching and assessing: a revision of Bloom’s taxonomy of educational objectives. New York: Longman; 2001. [ Google Scholar ]

- 21. Kahneman D. Thinking fast and slow. New York: Farrar, Straus and Giroux; 2011. [ Google Scholar ]

- 22. Evans JS, Stanovich KE. Dual-process theories of higher cognition: advancing the debate. Perspect Psychol Sci. 2013;8:223–241. doi: 10.1177/1745691612460685. [ DOI ] [ PubMed ] [ Google Scholar ]

- 23. Ark TK, Brooks LR, Eva KW. Giving learners the best of both worlds: do clinical teachers need to guard against teaching pattern recognition to novices? Acad Med. 2006;81:405–409. doi: 10.1097/00001888-200604000-00017. [ DOI ] [ PubMed ] [ Google Scholar ]

- 24. Haselton MG, Nettle D, Andrews PW. The evolution of cognitive bias. In: Buss DM, editor. The handbook of evolutionary psychology. Hoboken, NJ: John Wiley & Sons Inc.; 2005. pp. 724–746. [ Google Scholar ]

- 25. Elstein AS. Thinking about diagnostic thinking: a 30-year perspective. Adv Health Sci Educ Theory Pract. 2009;14:7–18. doi: 10.1007/s10459-009-9184-0. [ DOI ] [ PubMed ] [ Google Scholar ]

- 26. Reason J. Human error. Cambridge: Cambridge University Press; 1990. [ Google Scholar ]

- 27. Croskerry P. Achieving quality in clinical decision making: cognitive strategies and detection of bias. Acad Emerg Med. 2002;9:1184–1204. doi: 10.1111/j.1553-2712.2002.tb01574.x. [ DOI ] [ PubMed ] [ Google Scholar ]

- 28. Arkes HA. Impediments to accurate clinical judgment and possible ways to minimize their impact. In: Arkes HR, Hammond KR, editors. Judgement and decision making: an interdisciplinary reader. New York: Cambridge University Press; 1986. pp. 582–592. [ Google Scholar ]

- 29. Simpkin AL, Schwartzstein RM. Tolerating uncertainty- the next medical revolution? N Engl J Med. 2016;375:1713–1715. doi: 10.1056/NEJMp1606402. [ DOI ] [ PubMed ] [ Google Scholar ]

- 30. Croskerry P. The feedback sanction. Acad Emerg Med. 2000;7:1232–1238. doi: 10.1111/j.1553-2712.2000.tb00468.x. [ DOI ] [ PubMed ] [ Google Scholar ]

- 31. Bowen JL. Educational strategies to promote clinical diagnostic reasoning. N Engl J Med. 2006;355:2217–2225. doi: 10.1056/NEJMra054782. [ DOI ] [ PubMed ] [ Google Scholar ]

- 32. Pottier P, Hardouin J-B, Hodges BD, Pistorius MA, Connault J, Durant C, Clairand R, Sebille V, Barrier JH, Planchon B. Exploring how students think: a new method combining think-aloud and concept mapping protocols. Med Educ. 2010;44:926–935. doi: 10.1111/j.1365-2923.2010.03748.x. [ DOI ] [ PubMed ] [ Google Scholar ]

- 33. Guerrero APS. Mechanistic case diagramming: a tool for problem-based learning. Acad Med. 2001;76:385–389. doi: 10.1097/00001888-200104000-00020. [ DOI ] [ PubMed ] [ Google Scholar ]

- 34. Richards J, Schwartzstein R, Irish J, Almeida J, Roberts D. Clinical physiology grand rounds. Clin Teach. 2013;10:88–93. doi: 10.1111/j.1743-498X.2012.00614.x. [ DOI ] [ PubMed ] [ Google Scholar ]

- 35. Kost A, Chen FM. Socrates was not a pimp: changing the paradigm of questioning in medical education. Acad Med. 2015;90:20–24. doi: 10.1097/ACM.0000000000000446. [ DOI ] [ PubMed ] [ Google Scholar ]

- 36. Krupat E, Richards JB, Sullivan AM, Fleenor TJ, Jr, Schwartzstein RM. Assessing the effectiveness of case-based collaborative learning via randomized controlled trial. Acad Med. 2016;91:723–729. doi: 10.1097/ACM.0000000000001004. [ DOI ] [ PubMed ] [ Google Scholar ]

- 37. Hogarth RM. Judgement and choice: the psychology of decision. Chichester: Wiley; 1980. [ Google Scholar ]

- 38. Frank P. Einstein: his life and times. New York: Da Capo Press; 2002.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

- View on publisher site

- PDF (701.3 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

This website is intended for healthcare professionals

- { $refs.search.focus(); })" aria-controls="searchpanel" :aria-expanded="open" class="hidden lg:inline-flex justify-end text-gray-800 hover:text-primary py-2 px-4 lg:px-0 items-center text-base font-medium"> Search

Search menu

Critical thinking: what it is and why it counts. 2020. https://tinyurl.com/ybz73bnx (accessed 27 April 2021)

Faculty of Intensive Care Medicine. Curriculum for training for advanced critical care practitioners: syllabus (part III). version 1.1. 2018. https://www.ficm.ac.uk/accps/curriculum (accessed 27 April 2021)

Guerrero AP. Mechanistic case diagramming: a tool for problem-based learning. Acad Med.. 2001; 76:(4)385-9 https://doi.org/10.1097/00001888-200104000-00020

Harasym PH, Tsai TC, Hemmati P. Current trends in developing medical students' critical thinking abilities. Kaohsiung J Med Sci.. 2008; 24:(7)341-55 https://doi.org/10.1016/S1607-551X(08)70131-1

Hayes MM, Chatterjee S, Schwartzstein RM. Critical thinking in critical care: five strategies to improve teaching and learning in the intensive care unit. Ann Am Thorac Soc.. 2017; 14:(4)569-575 https://doi.org/10.1513/AnnalsATS.201612-1009AS

Health Education England. Multi-professional framework for advanced clinical practice in England. 2017. https://www.hee.nhs.uk/sites/default/files/documents/multi-professionalframeworkforadvancedclinicalpracticeinengland.pdf (accessed 27 April 2021)

Health Education England, NHS England/NHS Improvement, Skills for Health. Core capabilities framework for advanced clinical practice (nurses) working in general practice/primary care in England. 2020. https://www.skillsforhealth.org.uk/images/services/cstf/ACP%20Primary%20Care%20Nurse%20Fwk%202020.pdf (accessed 27 April 2021)

Health Education England. Advanced practice mental health curriculum and capabilities framework. 2020. https://www.hee.nhs.uk/sites/default/files/documents/AP-MH%20Curriculum%20and%20Capabilities%20Framework%201.2.pdf (accessed 27 April 2021)

Jacob E, Duffield C, Jacob D. A protocol for the development of a critical thinking assessment tool for nurses using a Delphi technique. J Adv Nurs.. 2017; 73:(8)1982-1988 https://doi.org/10.1111/jan.13306

Kohn MA. Understanding evidence-based diagnosis. Diagnosis (Berl).. 2014; 1:(1)39-42 https://doi.org/10.1515/dx-2013-0003

Clinical reasoning—a guide to improving teaching and practice. 2012. https://www.racgp.org.au/afp/201201/45593

McGee S. Evidence-based physical diagnosis, 4th edn. Philadelphia PA: Elsevier; 2018

Norman GR, Monteiro SD, Sherbino J, Ilgen JS, Schmidt HG, Mamede S. The causes of errors in clinical reasoning: cognitive biases, knowledge deficits, and dual process thinking. Acad Med.. 2017; 92:(1)23-30 https://doi.org/10.1097/ACM.0000000000001421

Papp KK, Huang GC, Lauzon Clabo LM Milestones of critical thinking: a developmental model for medicine and nursing. Acad Med.. 2014; 89:(5)715-20 https://doi.org/10.1097/acm.0000000000000220

Rencic J, Lambert WT, Schuwirth L., Durning SJ. Clinical reasoning performance assessment: using situated cognition theory as a conceptual framework. Diagnosis.. 2020; 7:(3)177-179 https://doi.org/10.1515/dx-2019-0051

Examining critical thinking skills in family medicine residents. 2016. https://www.stfm.org/FamilyMedicine/Vol48Issue2/Ross121

Royal College of Emergency Medicine. Emergency care advanced clinical practitioner—curriculum and assessment, adult and paediatric. version 2.0. 2019. https://tinyurl.com/eps3p37r (accessed 27 April 2021)

Young ME, Thomas A, Lubarsky S. Mapping clinical reasoning literature across the health professions: a scoping review. BMC Med Educ.. 2020; 20 https://doi.org/10.1186/s12909-020-02012-9

Advanced practice: critical thinking and clinical reasoning

Sadie Diamond-Fox

Senior Lecturer in Advanced Critical Care Practice, Northumbria University, Advanced Critical Care Practitioner, Newcastle upon Tyne Hospitals NHS Foundation Trust, and Co-Lead, Advanced Critical/Clinical Care Practitioners Academic Network (ACCPAN)

View articles · Email Sadie

Advanced Critical Care Practitioner, South Tees Hospitals NHS Foundation Trust

View articles

Clinical reasoning is a multi-faceted and complex construct, the understanding of which has emerged from multiple fields outside of healthcare literature, primarily the psychological and behavioural sciences. The application of clinical reasoning is central to the advanced non-medical practitioner (ANMP) role, as complex patient caseloads with undifferentiated and undiagnosed diseases are now a regular feature in healthcare practice. This article explores some of the key concepts and terminology that have evolved over the last four decades and have led to our modern day understanding of this topic. It also considers how clinical reasoning is vital for improving evidence-based diagnosis and subsequent effective care planning. A comprehensive guide to applying diagnostic reasoning on a body systems basis will be explored later in this series.

The Multi-professional Framework for Advanced Clinical Practice highlights clinical reasoning as one of the core clinical capabilities for advanced clinical practice in England ( Health Education England (HEE), 2017 ). This is also identified in other specialist core capability frameworks and training syllabuses for advanced clinical practitioner (ACP) roles ( Faculty of Intensive Care Medicine, 2018 ; Royal College of Emergency Medicine, 2019 ; HEE, 2020 ; HEE et al, 2020 ).

Rencic et al (2020) defined clinical reasoning as ‘a complex ability, requiring both declarative and procedural knowledge, such as physical examination and communication skills’. A plethora of literature exists surrounding this topic, with a recent systematic review identifying 625 papers, spanning 47 years, across the health professions ( Young et al, 2020 ). A diverse range of terms are used to refer to clinical reasoning within the healthcare literature ( Table 1 ), which can make defining their influence on their use within the clinical practice and educational arenas somewhat challenging.

The concept of clinical reasoning has changed dramatically over the past four decades. What was once thought to be a process-dependent task is now considered to present a more dynamic state of practice, which is affected by ‘complex, non-linear interactions between the clinician, patient, and the environment’ ( Rencic et al, 2020 ).

Cognitive and meta-cognitive processes

As detailed in the table, multiple themes surrounding the cognitive and meta-cognitive processes that underpin clinical reasoning have been identified. Central to these processes is the practice of critical thinking. Much like the definition of clinical reasoning, there is also diversity with regard to definitions and conceptualisation of critical thinking in the healthcare setting. Facione (2020) described critical thinking as ‘purposeful reflective judgement’ that consists of six discrete cognitive skills: analysis, inference, interpretation, explanation, synthesis and self–regulation. Ross et al (2016) identified that critical thinking positively correlates with academic success, professionalism, clinical decision-making, wider reasoning and problem-solving capabilities. Jacob et al (2017) also identified that patient outcomes and safety are directly linked to critical thinking skills.

Harasym et al (2008) listed nine discrete cognitive steps that may be applied to the process of critical thinking, which integrates both cognitive and meta-cognitive processes:

- Gather relevant information

- Formulate clearly defined questions and problems

- Evaluate relevant information

- Utilise and interpret abstract ideas effectively

- Infer well-reasoned conclusions and solutions

- Pilot outcomes against relevant criteria and standards

- Use alternative thought processes if needed

- Consider all assumptions, implications, and practical consequences

- Communicate effectively with others to solve complex problems.

There are a number of widely used strategies to develop critical thinking and evidence-based diagnosis. These include simulated problem-based learning platforms, high-fidelity simulation scenarios, case-based discussion forums, reflective journals as part of continuing professional development (CPD) portfolios and journal clubs.

Dual process theory and cognitive bias in diagnostic reasoning

A lack of understanding of the interrelationship between critical thinking and clinical reasoning can result in cognitive bias, which can in turn lead to diagnostic errors ( Hayes et al, 2017 ). Embedded within our understanding of how diagnostic errors occur is dual process theory—system 1 and system 2 thinking. The characteristics of these are described in Table 2 . Although much of the literature in this area regards dual process theory as a valid representation of clinical reasoning, the exact causes of diagnostic errors remain unclear and require further research ( Norman et al, 2017 ). The most effective way in which to teach critical thinking skills in healthcare education also remains unclear; however, Hayes et al (2017) proposed five strategies, based on well-known educational theory and principles, that they have found to be effective for teaching and learning critical thinking within the ‘high-octane’ and ‘high-stakes’ environment of the intensive care unit ( Table 3 ). This is arguably a setting that does not always present an ideal environment for learning given its fast pace and constant sensory stimulation. However, it may be argued that if a model has proven to be effective in this setting, it could be extrapolated to other busy clinical environments and may even provide a useful aide memoire for self-assessment and reflective practices.

Integrating the clinical reasoning process into the clinical consultation

Linn et al (2012) described the clinical consultation as ‘the practical embodiment of the clinical reasoning process by which data are gathered, considered, challenged and integrated to form a diagnosis that can lead to appropriate management’. The application of the previously mentioned psychological and behavioural science theories is intertwined throughout the clinical consultation via the following discrete processes:

- The clinical history generates an initial hypothesis regarding diagnosis, and said hypothesis is then tested through skilled and specific questioning

- The clinician formulates a primary diagnosis and differential diagnoses in order of likelihood

- Physical examination is carried out, aimed at gathering further data necessary to confirm or refute the hypotheses

- A selection of appropriate investigations, using an evidence-based approach, may be ordered to gather additional data

- The clinician (in partnership with the patient) then implements a targeted and rationalised management plan, based on best-available clinical evidence.

Linn et al (2012) also provided a very useful framework of how the above methods can be applied when teaching consultation with a focus on clinical reasoning (see Table 4 ). This framework may also prove useful to those new to the process of undertaking the clinical consultation process.

Evidence-based diagnosis and diagnostic accuracy

The principles of clinical reasoning are embedded within the practices of formulating an evidence-based diagnosis (EBD). According to Kohn (2014) EBD quantifies the probability of the presence of a disease through the use of diagnostic tests. He described three pertinent questions to consider in this respect:

- ‘How likely is the patient to have a particular disease?’

- ‘How good is this test for the disease in question?’

- ‘Is the test worth performing to guide treatment?’

EBD gives a statistical discriminatory weighting to update the probability of a disease to either support or refute the working and differential diagnoses, which can then determine the appropriate course of further diagnostic testing and treatments.

Diagnostic accuracy refers to how positive or negative findings change the probability of the presence of disease. In order to understand diagnostic accuracy, we must begin to understand the underlying principles and related statistical calculations concerning sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV) and likelihood ratios.

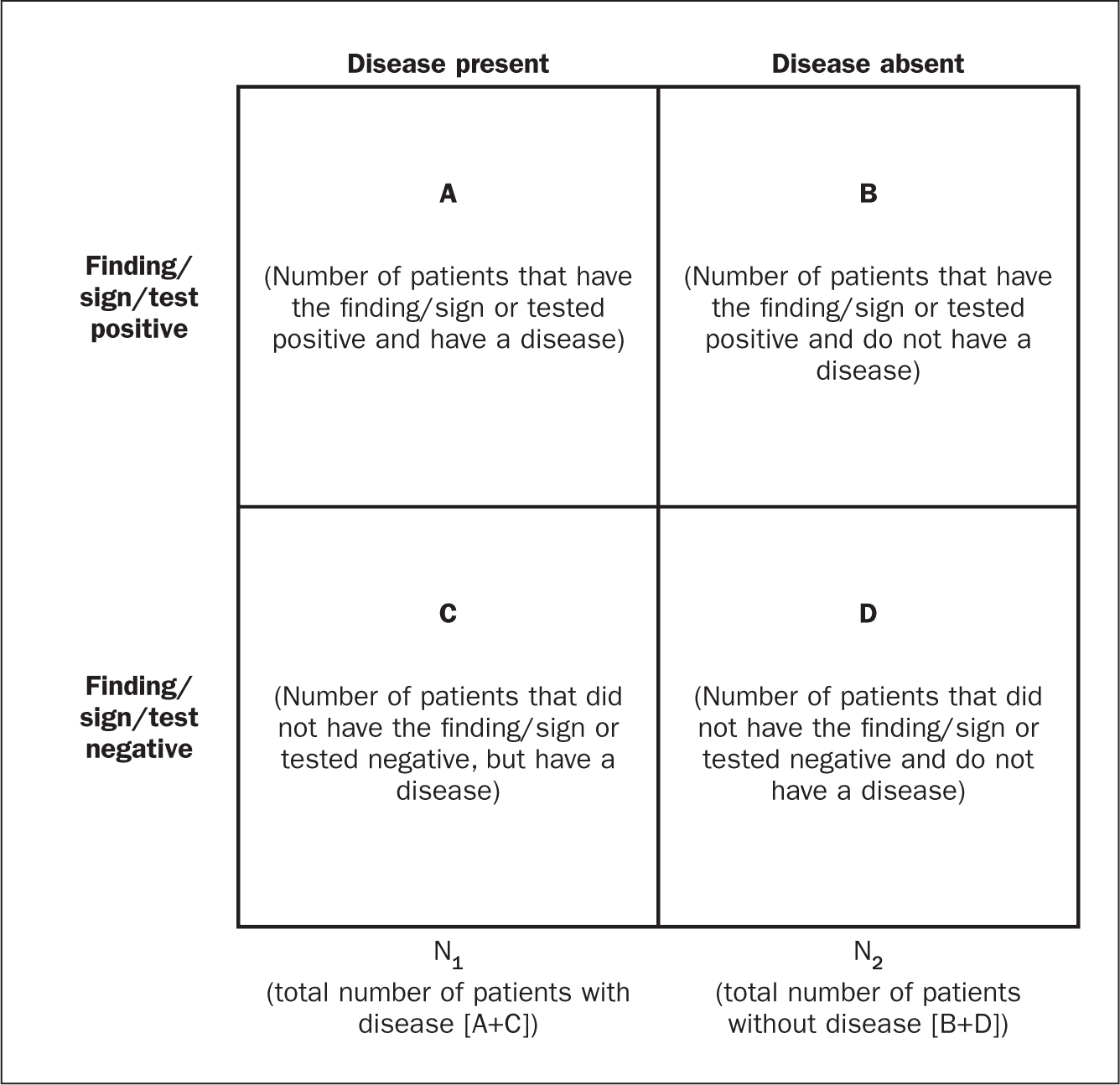

The construction of a two-by-two square (2 x 2) table ( Figure 1 ) allows the calculation of several statistical weightings for pertinent points of the history-taking exercise, a finding/sign on physical examination, or a test result. From this construct we can then determine the aforementioned statistical calculations as follows ( McGee, 2018 ):

- Sensitivity , the proportion of patients with the diagnosis who have the physical sign or a positive test result = A ÷ (A + C)

- Specificity , the proportion of patients without the diagnosis who lack the physical sign or have a negative test result = D ÷ (B + D)

- Positive predictive value , the proportion of patients with disease who have a physical sign divided by the proportion of patients without disease who also have the same sign = A ÷ (A + B)

- Negative predictive value , proportion of patients with disease lacking a physical sign divided by the proportion of patients without disease also lacking the sign = D ÷ (C + D)

- Likelihood ratio , a finding/sign/test results sensitivity divided by the false-positive rate. A test of no value has an LR of 1. Therefore the test would have no impact upon the patient's odds of disease

- Positive likelihood ratio = proportion of patients with disease who have a positive finding/sign/test, divided by proportion of patients without disease who have a positive finding/sign/test OR (A ÷ N1) ÷ (B÷ N2), or sensitivity ÷ (1 – specificity) The more positive an LR (the further above 1), the more the finding/sign/test result raises a patient's probability of disease. Thresholds of ≥ 4 are often considered to be significant when focusing a clinician's interest on the most pertinent positive findings, clinical signs or tests

- Negative likelihood ratio = proportion of patients with disease who have a negative finding/sign/test result, divided by the proportion of patients without disease who have a positive finding/sign/test OR (C ÷ N1) ÷ (D÷N1) or (1 – sensitivity) ÷ specificity The more negative an LR (the closer to 0), the more the finding/sign/test result lowers a patient's probability of disease. Thresholds <0.4 are often considered to be significant when focusing clinician's interest on the most pertinent negative findings, clinical signs or tests.

There are various online statistical calculators that can aid in the above calculations, such as the BMJ Best Practice statistical calculators, which may used as a guide (https://bestpractice.bmj.com/info/toolkit/ebm-toolbox/statistics-calculators/).

Clinical scoring systems

Evidence-based literature supports the practice of determining clinical pretest probability of certain diseases prior to proceeding with a diagnostic test. There are numerous validated pretest clinical scoring systems and clinical prediction tools that can be used in this context and accessed via various online platforms such as MDCalc (https://www.mdcalc.com/#all). Such clinical prediction tools include:

- 4Ts score for heparin-induced thrombocytopenia

- ABCD² score for transient ischaemic attack (TIA)

- CHADS₂ score for atrial fibrillation stroke risk

- Aortic Dissection Detection Risk Score (ADD-RS).

Conclusions

Critical thinking and clinical reasoning are fundamental skills of the advanced non-medical practitioner (ANMP) role. They are complex processes and require an array of underpinning knowledge of not only the clinical sciences, but also psychological and behavioural science theories. There are multiple constructs to guide these processes, not all of which will be suitable for the vast array of specialist areas in which ANMPs practice. There are multiple opportunities throughout the clinical consultation process in which ANMPs can employ the principles of critical thinking and clinical reasoning in order to improve patient outcomes. There are also multiple online toolkits that may be used to guide the ANMP in this complex process.

- Much like consultation and clinical assessment, the process of the application of clinical reasoning was once seen as solely the duty of a doctor, however the advanced non-medical practitioner (ANMP) role crosses those traditional boundaries

- Critical thinking and clinical reasoning are fundamental skills of the ANMP role

- The processes underlying clinical reasoning are complex and require an array of underpinning knowledge of not only the clinical sciences, but also psychological and behavioural science theories

- Through the use of the principles underlying critical thinking and clinical reasoning, there is potential to make a significant contribution to diagnostic accuracy, treatment options and overall patient outcomes

CPD reflective questions

- What assessment instruments exist for the measurement of cognitive bias?

- Think of an example of when cognitive bias may have impacted on your own clinical reasoning and decision making

- What resources exist to aid you in developing into the ‘advanced critical thinker’?

- What resources exist to aid you in understanding the statistical terminology surrounding evidence-based diagnosis?

This site is intended for healthcare professionals

Principles of diagnostic reasoning

Wes Mountain/The Pharmaceutical Journal

By the end of this article, you will be able to:

- Understand the role of fast and slow thinking during diagnostic reasoning;

- Know how to structure your diagnostic approach;

- Use illness scripts as a tool for developing your diagnostic efficiency.

RPS Competency Framework for All Prescribers

This article aims to support the development of knowledge and skills related to the following competencies :

Domain 1: Assess the patient (1.6, 1.7, 1.8, 1.10, 1.11, 1.12)

- Takes and documents an appropriate medical, psychological, and medical history including allergies and intolerances;

- Undertakes and documents an appropriate clinical assessment;

- Identifies and addresses potential vulnerabilities that may be causing the patient/carer to seek treatment;

- Requests and interprets relevant investigations necessary to inform treatment options;

- Makes, confirms or understands, and documents the working or final diagnosis by systematically considering the various possibilities (differential diagnosis);

- Understands the condition(s) being treated, their natural progression, and how to assess their severity, deterioration and anticipated response to treatment.

Introduction

Diagnostic reasoning is a concept often used interchangeably with terms such as ‘clinical reasoning’, ‘clinical problem solving’ and ‘clinical decision making’. Collectively, it is recognised that these terms are representing the central idea proposed by Barrows and Tamblyn of describing the “cognitive process necessary to evaluate and manage a patient’s medical problem” [1,2] . A broader definition encompasses the idea that diagnostic reasoning is the conscious and unconscious interpretation of patient data, and the consideration of risks and benefits of actions to determine a working diagnosis and treatment plan [3] .

Historically, diagnostic reasoning has focused on the process of applying the information you have gained from your history taking and physical examinations to formulate your list of potential causes for the patient’s presenting complaint and creating your differential diagnosis [4] . When considering the changing role of pharmacists as independent prescribers, it can also involve the process of identifying medication-related problems, considering therapeutic options and optimizing medication regimens [5,6] .

It is recommended that you read this article in conjunction with these resources from The Pharmaceutical Journal :

- ‘ Introduction to the prescribing consultation ‘;

- ‘ Principles of effective history taking when prescribing ‘;

- ‘Introduction to clinical assessment for prescribers ‘;

- ‘ Performing a physical examination on a patient when prescribing ‘.

To help further expand your prescribing skills, additional related articles are linked throughout. You will also be able to test your knowledge by completing a short quiz at the end of the article.

Structuring diagnostic reasoning

A commonly accepted theory of diagnostic reasoning was proposed by Kahneman who describes the two different thought processes that occur when making a decision; this dual processing involves system 1 and system 2 thinking. It is acknowledged that clinicians interchange and combine their diagnostic reasoning method depending on the scenario and their own experience [7] .

System 1 and system 2 thinking

System 1 — intuitive system : the fast, automatic reaction to information based on mental shortcuts formed from patterns or habits. This is often triggered when dealing with common, typical, and uncomplicated presentations.

System 2 — hypotheticodeductive system : the slow, systematic, controlled process based on conscious judgement, logic and the range of probabilities being considered. This is often triggered if the presentation is not recognised, atypical or ambiguous.

Watch this short video for more commentary on system 1 and 2 thinking applied in a clinical context.

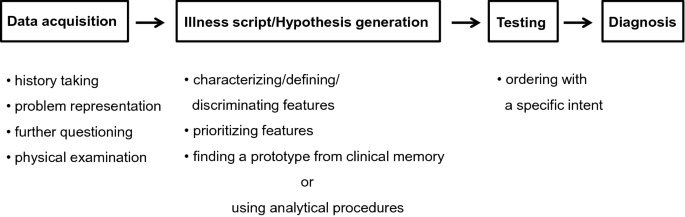

Effective diagnostic reasoning often utilises both system 1 and system 2 thinking and requires a combination of experience and skills (pattern recognition, critical thinking, communication skills , evidence-based practice , teamwork and reflection ) [8] . The reasoning process needs to be considered as comprising four discrete stages: information gathering, hypothesis generation, hypothesis testing and reflection [9] . We will now briefly consider each.

1. Information gathering

The first step of diagnostic reasoning is processing the information that can be gained from the patient’s health record, history, laboratory results, tests, and physical examination . For example, recent medication changes, blood pressure readings, creatinine clearance trends and physical features following examination (e.g. ankle swelling, shortness of breath).

2. Hypothesis generation

From here it becomes possible to also generate a broad, if not exhaustive, list of possible conditions that could be considered at this stage of the process [10] . When there are many signs and symptoms to consider, or a vague presentation, it can be challenging to match the information gathered with a single problem or even a problem shortlist. At this stage, a system 2 approach can be adopted where clusters of observations are separated and explored systematically within themes (e.g. anatomical location, the patient’s age, timing or onset of symptoms, review of body systems or multisystem conditions). Some examples of the benefits to this approach are shown in the Table.

3. Hypothesis testing

By now, the problem list has been reviewed, rationalised and prioritised to generate the working hypothesis (or differential/working diagnosis), through a focused matching of the symptoms to possible diseases or medication-related issues and cross-matching back the associated symptoms with the patient’s presentation and medication history.

This step promotes the identification of defining features of a condition, or where they exist, discriminatory features that are unique for a particular disease [10] . This process will allow the elimination of conditions that do not match and rationalises your list of differentials to a more specific list of possibilities [4] . For example, shortness of breath and coughing without signs of infection may rule out community acquired pneumonia; coughing up pink sputum may point towards pulmonary oedema.

Finally, further investigations to either provide pertinent negatives or confirmatory findings should be considered. For example, a patient may present with symptoms of heart failure following a recent myocardial infarction (e.g. shortness of breath, oedema) and has a raised B-type natriuretic peptide (BNP), but would require an echocardiogram to confirm diagnosis.

This working diagnosis then informs the treatment plan.

Throughout this whole process it is essential that you are vigilant to potential life-limiting conditions, including how to identify or rule out red flags and when onward referral will be required [4,11] . At all times it is imperative that you work within your scope of practice and have the requisite self-awareness to know when to refer to a more senior clinician, or involve another healthcare professional in your decision-making process.

4. Reflection

For all prescribers, but particularly those early in their career or working in a new scope of practice, an organised approach is critical to avoiding cognitive errors when generating the hypothesis of the diagnosis. Experience using system 1 thinking can allow pattern recognition to generate a diagnosis based on conditions, patients, and case reviews we have seen before and there is a risk that the prescriber may apply biases from their known experience rather than the full depth of information that has been presented. The application of a system 2 approaches offers a well-considered and more accurate diagnosis [11] .

Having formulated a working diagnosis, it may sometimes be appropriate to perform further tests to confirm the diagnosis. In 2014, Kohn identified that three pertinent questions for diagnostic reasoning when considering if further tests are required [12] :

- How likely is the patient to have this disease?

- How good is this ‘test’ for the disease in question?

- Is the test worth performing to guide treatment?

Finally, the identified problem and the reasoning to support this should be succinctly documented in the patient record [12] .

The illness script method

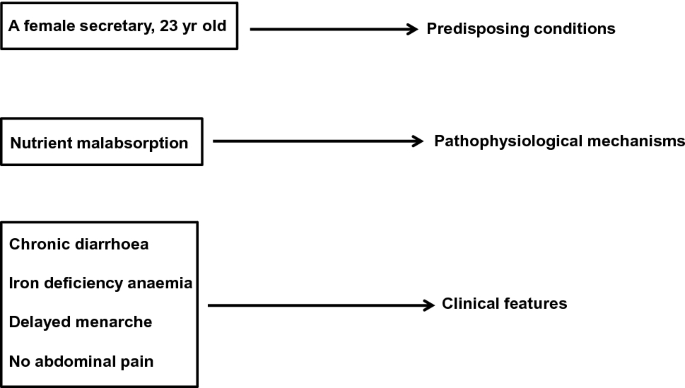

An alternative understanding and application of diagnostic reasoning is the use of ‘illness scripts’. This is where a clinician relies upon prior learning and their first-hand experiences to recognise a pattern of clinical characteristics as clues to the potential diagnosis. An illness script is a mental cue card that represents individual diseases, including their typical casual features, the actual pathology, the resulting signs, symptoms, expected diagnostic findings and the most likely course/prognosis with suitable management.

Illness scripts can help prescribers focus their questions during history taking, contextualise patterns of signs and symptoms and help to integrate new clinical knowledge with existing knowledge. A novice prescribing clinician will have limited experience to draw from initially so will rely upon their biomedical knowledge and have a broad range of differential options where symptoms may need to be worked through one by one. Experienced practitioners will have had time to hone their scripts so that they are quicker and can support a diagnosis more directly. Regardless of experience, the illness script requires knowledge of the epidemiological factors of the disease, the associated signs and symptoms and the pathophysiology. Some models of illness scripts also consider the time-course of the disease [10,13,14] .

We can use an illness script approach for many conditions, and you may already have some well-developed scripts for conditions you commonly see in your own clinical practice. For example, an illness script for croup could involve the following: epidemiology (infants and toddlers), pathophysiology (parainfluenza virus), presentation (fever, barky cough, stridor, worse at night) and management (hydration, paracetamol).

The following case illustrates how an illness script can be developed and used to form a diagnosis.

Case in practice

Action : List the questions that you would ask the patient, what physical examination could/would you undertake? What illness scripts did you form as you read through the case presentation? How can this inform the diagnosis?

Discussion : Several features of the presentation indicate that the patient has gout, rather a chronic condition such as arthritis, including:

- Presentation as an acute flare of symptoms;

- Onset of symptoms were abrupt;

- Pain is localised and severe;

- It is affecting a single joint.

The effectiveness of your script will have been influenced by how much you already knew about gout. Awareness of the epidemiology of the condition, the temporal pattern of symptom presentation and predisposing conditions, specifically any (single joint) or discriminating symptoms (episodic acute pain) will have helped you to reach a diagnosis directly. Novice practitioners whose illness script for gout is still forming may need to spend longer working through the information gathered before they can differentiate the patient’s disease from others that may have a similar presentation.

The script will have included ideas to eliminate other diagnostic possibilities and to weigh up the possibilities of the likely diagnoses. For example, you would have asked questions about acute trauma or injuries to exclude fractures and ruled out the unlikely condition of septic arthritis as the patient is not presenting with any systemic signs of infection.

For more information on gout, see: ‘ Treatment and Management of gout: the role of pharmacy ‘.

While learning to understand the processes of diagnostic reasoning, it is also important to recognise the potential sources of error, which may include [15] :

- Anchoring bias: the tendency to formulate and fix your idea early in the process and not adjust thinking if new information becomes available;

- Availability heuristic: Assuming a diagnosis because you have seen lots of recent cases;

- Confirmation bias: Seeking evidence to support your initial impression/diagnosis and ignoring information that does not support your diagnosis;

- Diagnostic momentum: Relying on previous clinicians’ diagnostic decisions and ignoring new information that may be contradictory;

- Framing effect: How the information is presented influences the diagnosis (e.g. emphasising or excluding certain clinical variables or elements such as CD4 count, abdominal pain weight loss, anxiety, polysubstance abuse can lead to different diagnostic considerations, ranging from viral gastroenteritis, hyperthyroidism, malnutrition or drug toxicity);

- Representation error: Not considering the prevalence for a condition when predicting the likelihood of the condition;

- Visceral bias: where either negative or positive feelings towards the patient influences your decisions and diagnosis.

Tips and guidance for good diagnostic reasoning

There are several things prescribers can do to help guard against these common sources of diagnostic error [16] :

- Slow down. Although time is often pressured there are also threats to sufficient information gathering and unconscious biases when you are rushing;

- Consider the likelihood of the presenting problems — “common things are common”. That being said, a broad awareness of some more rare diseases is required, as well as openness to consider them;

- Consider what information you have and focus on what is truly relevant;

- Actively consider alternative diagnoses. Pay specific attention to the potential for life limiting conditions and presenting symptoms. For example, it is important to rule out temporal arteritis in those presenting with headache if scalp tenderness is present;

- Ask active questions to rule out or disprove conditions that are part of your working diagnosis (distinguishing factors of those diseases). For example, chest pain that occurs only on inspiration compared to that occurring at rest may point to a respiratory rather than a cardiac cause;

- Consider the implications of a wrong diagnosis.

The principles of diagnostic reasoning are founded on the pharmacist undertaking effective continual professional development and practicing evidence-based diagnosis and medicine. Professional experience and exposure to different illnesses will grow naturally over time but it is important that prescribers continuously invest in developing their knowledge of the conditions being treated, their natural progression and how to assess their severity, deterioration and anticipated response to treatment.

The following scenario provides an opportunity to explore some of the concepts introduced in this article and apply them to practice.

Your physical examination identified that the pharynx is inflamed, chest examination is clear and there is no impaired motion of any joints in the body. The laboratory results show a raised CRP, Hb 116g/L, raised white cell count, temperature 38.4 o C.

Action : From the details provided here: write a list of your most likely differential diagnosis and any additional tests that you may request.

Using the cluster of observation approach (see Table), how could you reach a diagnosis for the differential list you have created?

Discussion (applying system 2 thinking) : Taking the anatomic location approach, you start with the head and explore the history further to identify that the source of pain is in the throat and mostly on swallowing. Your physical observations match this, with pustular and inflamed appearance of the tonsils. The results of the rapid antigen test are positive.

Considering the age of the patient, the common differentials for the symptoms as identified for the location could include viral or bacterial sore throats, with the antigen test making bacterial infection most likely. The patient’s age indicates there is risk of glandular fever (mononucleosis) and understanding the onset of symptoms can be a useful determining factor here.

Linking the onset of the different signs and symptoms can help to differentiate the story and likely diagnosis. For this case, as the exhaustion predates the sore throat, this differentiates between the two most common concerns, which are glandular fever and streptococcus sore throat. In the absence of laboratory test results, this is a key component of the diagnostic reasoning that would support a diagnosis of bacterial sore throat. The exhaustion/lethargy in this case is unrelated to the sore throat and could be linked to other conditions or lifestyle. Conversely, had the sore throat persisted for weeks and then the lethargy started later, this would be more indicative of glandular fever.

This diagnosis can be corroborated by looking at the involvement of other body systems. The systems in this case are localised and the more general signs and symptoms of fever and aches can be linked back to your likely differential diagnosis. If other systems were involved, for example abdominal tenderness, this could influence your diagnostic reasoning and prompt you to further explore the potential diagnosis of glandular fever.

As the presenting patient was young and there was no recorded history of smoking or occupational hazards, there were numerous conditions that were not part of your initial differential list.

Reflections on the case : How would your diagnostic reasoning change if the patient presenting was a 59-year-old male with a 40-pack-year smoking history and recent unplanned weight loss?

How would your diagnostic reasoning change if the patient presenting was a 36-year female refugee living in shared accommodation?

For more information on assessment of sore throat and use of Centor and FEVERPAIN prediction tools, see:

- ‘ Introduction to clinical assessment for prescribers ‘;

- ‘ Case-based learning: sore throat ‘.

Knowledge check

Quiz summary.

0 of 5 Questions completed

Information

You have already completed the quiz before. Hence you can not start it again.

Quiz is loading...

You must sign in or sign up to start the quiz.

You must first complete the following:

Quiz complete. Results are being recorded.

0 of 5 Questions answered correctly

Time has elapsed

You have reached 0 of 0 point(s), ( 0 )

Earned Point(s): 0 of 0 , ( 0 ) 0 Essay(s) Pending (Possible Point(s): 0 )

- Not categorized 0%

1 . Question

What type of thinking is being used when you are relying upon your fast thinking and pattern recognition to form a diagnosis?

- System 1 thinking

- System 2 thinking

2 . Question

Which four of the following are considered strategies for good diagnostic reasoning and safety netting?

- Working quickly to get to the answer

- Consider the likelihood of the presenting problems

- Consider what information you have and focus on what is irrelevant

- Actively consider alternative diagnoses, including potentially life limiting conditions

- Ask active questions to rule out or disprove conditions that are part of your working diagnosis

- Consider the implications of a wrong diagnosis

3 . Question

Seeking evidence to support your diagnosis and or ignoring information that does not support your diagnosis is known as:

- Confirmation bias

- Diagnostic momentum

- Framing effect

- Representation error

4 . Question

True or false — age is not relevant when creating the differential diagnosis list.

5 . Question

Diagnostic reasoning only applies to the formulation of a new diagnosis

Expanding your scope of practice

The following resources expand on the information contained in this article:

- ‘ How to use clinical reasoning in pharmacy ‘, The Pharmaceutical Journal ;

- ‘ Effective practitioner: core skills of decision making ‘, NHS Education Scotland;

- ‘ How to apply evidence to practice ‘, The Pharmaceutical Journal .

- 1 Barrows HS, Tamblyn RM. Problem-based learning: an approach to medical education . New York: Springer 1980. https://app.nova.edu/toolbox/instructionalproducts/edd8124/fall11/1980-BarrowsTamblyn-PBL.pdf (accessed February 2024)

- 2 Round A. Introduction to clinical reasoning. Evaluation Clinical Practice. 2001;7:109–17. https://doi.org/10.1046/j.1365-2753.2001.00252.x

- 3 Dy-Boarman EA, Bryant GA, Herring MS. Faculty preceptors’ strategies for teaching clinical reasoning skills in the advanced pharmacy practice experience setting. Currents in Pharmacy Teaching and Learning. 2021;13:623–7. https://doi.org/10.1016/j.cptl.2021.01.023

- 4 Bickley L, Szilagyi P, Hoffman R, et al. Bates’ guide to physical examination and history taking . 13th ed. Philadelphia: Wolters Kluwer; 2021.

- 5 Wright DFB, Anakin MG, Duffull SB. Clinical decision-making: An essential skill for 21st century pharmacy practice. Research in Social and Administrative Pharmacy. 2019;15:600–6. https://doi.org/10.1016/j.sapharm.2018.08.001

- 6 Eva KW. What every teacher needs to know about clinical reasoning. Med Educ. 2005;39:98–106. https://doi.org/10.1111/j.1365-2929.2004.01972.x

- 7 Kahneman D. Thinking fast and slow . 1st ed. New York: Farrar, Straus and Giroux 2011.

- 8 Effective Practitioner. Core skills of decision making. NHS Education for Scotland. https://www.effectivepractitioner.nes.scot.nhs.uk/clinical-practice/core-skills-of-decision-making.aspx (accessed February 2024)

- 9 Trimble M, Hamilton P. The thinking doctor: clinical decision making in contemporary medicine. Clin Med. 2016;16:343–6. https://doi.org/10.7861/clinmedicine.16-4-343

- 10 Bowen JL. Educational Strategies to Promote Clinical Diagnostic Reasoning. N Engl J Med. 2006;355:2217–25. https://doi.org/10.1056/nejmra054782

- 11 Sackett D. The rational clinical examination. A primer on the precision and accuracy of the clinical examination. JAMA . 1992;267:2638–44.

- 12 Kohn MA. Understanding evidence-based diagnosis. Diagnosis. 2014;1:39–42. https://doi.org/10.1515/dx-2013-0003

- 13 Gavinski K, Covin YN, Longo PJ. Learning How to Build Illness Scripts. Academic Medicine. 2019;94:293–293. https://doi.org/10.1097/acm.0000000000002493

- 14 Charlin B, Boshuizen HPA, Custers EJ, et al. Scripts and clinical reasoning. Medical Education. 2007;41:1178–84. https://doi.org/10.1111/j.1365-2923.2007.02924.x

- 15 Croskerry P. The Importance of Cognitive Errors in Diagnosis and Strategies to Minimize Them. Academic Medicine. 2003;78:775–80. https://doi.org/10.1097/00001888-200308000-00003

- 16 Klein JG. Five pitfalls in decisions about diagnosis and prescribing. BMJ. 2005;330:781–3. https://doi.org/10.1136/bmj.330.7494.781

Please leave a comment Cancel reply

You must be logged in to post a comment.

You might also be interested in…

Stuck in the 1950s: why UTI diagnosis badly needs an update

Blood test developed that can screen and locate cancers

Safety first: delivering trans-inclusive care is everyone’s responsibility

Advertisement

Diagnostic reasoning in internal medicine: a practical reappraisal

- IM - REVIEW

- Open access

- Published: 01 December 2020

- Volume 16 , pages 273–279, ( 2021 )

Cite this article

You have full access to this open access article

- Gino Roberto Corazza ORCID: orcid.org/0000-0001-9532-0573 1 , 3 ,

- Marco Vincenzo Lenti 1 &

- Peter David Howdle 2

5727 Accesses

13 Citations

3 Altmetric

Explore all metrics

The practice of clinical medicine needs to be a very flexible discipline which can adapt promptly to continuously changing surrounding events. Despite the huge advances and progress made in recent decades, clinical reasoning to achieve an accurate diagnosis still seems to be the most appropriate and distinctive feature of clinical medicine. This is particularly evident in internal medicine where diagnostic boundaries are often blurred. Making a diagnosis is a multi-stage process which requires proper data collection, the formulation of an illness script and testing of the diagnostic hypothesis. To make sense of a number of variables, physicians may follow an analytical or an intuitive approach to clinical reasoning, depending on their personal experience and level of professionalism. Intuitive thinking is more typical of experienced physicians, but is not devoid of shortcomings. Particularly, the high risk of biases must be counteracted by de-biasing techniques, which require constant critical thinking. In this review, we discuss critically the current knowledge regarding diagnostic reasoning from an internal medicine perspective.

Similar content being viewed by others

How to perform a critical appraisal of diagnostic tests: 7 steps, a model for clinical decision-making in medicine, defining and measuring diagnostic uncertainty in medicine: a systematic review.

Avoid common mistakes on your manuscript.

Introduction