ORIGINAL RESEARCH article

Identification of apple leaf diseases by improved deep convolutional neural networks with an attention mechanism.

- 1 College of Mechanical and Electronic Engineering, Northwest A&F University, Xianyang, China

- 2 Key Laboratory of Agricultural Internet of Things, Ministry of Agriculture and Rural Affairs, Xianyang, China

- 3 Shaanxi Key Laboratory of Agricultural Information Perception and Intelligent Services, Xianyang, China

- 4 College of Information Engineering, Northwest A&F University, Xianyang, China

The accurate identification of apple leaf diseases is of great significance for controlling the spread of diseases and ensuring the healthy and stable development of the apple industry. In order to improve detection accuracy and efficiency, a deep learning model, which is called the Coordination Attention EfficientNet (CA-ENet), is proposed to identify different apple diseases. First, a coordinate attention block is integrated into the EfficientNet-B4 network, which embedded the spatial location information of the feature by channel attention to ensure that the model can learn both the channel and spatial location information of important features. Then, a depth-wise separable convolution is applied to the convolution module to reduce the number of parameters, and the h-swish activation function is introduced to achieve the fast and easy to quantify the process. Afterward, 5,170 images are collected in the field environment at the apple planting base of the Northwest A&F University, while 3,000 images are acquired from the PlantVillage public data set. Also, image augmentation techniques are used to generate an Apple Leaf Disease Identification Data set (ALDID), which contains 81,700 images. The experimental results show that the accuracy of the CA-ENet is 98.92% on the ALDID, and the average F1-score reaches .988, which is better than those of common models such as the ResNet-152, DenseNet-264, and ResNeXt-101. The generated test dataset is used to test the anti-interference ability of the model. The results show that the proposed method can achieve competitive performance on the apple disease identification task.

Introduction

The apple industry is one of the most important fruit industries in China. However, the frequent occurrence of apple leaf diseases may seriously restrict the healthy and stable development of the apple industry. At present, the diseases of a large number of industrialized apple orchards mainly rely on human vision for recognition, which requires a high degree of reliance on disease experts. The identification task is huge, especially since the visual inspection of fruit farmers or experts is prone to misjudgment due to their subjective perception and visual fatigue, and it is difficult to meet the demand for high-precision identification for intelligent orchards ( Dutot et al., 2013 ). The problems previously discussed will lead to a large lag in the tracking management process of orchard diseases, which causes the improper use of pesticides and reduces the quality of fruit. Therefore, the accurate identification of diseases is of great significance to improve the yield and quality of apples and to cultivate disease-resistant varieties.

With the development of computer vision, machine learning techniques have been widely used in the agricultural field in recent years, and a series of approaches have been achieved in crop disease identification ( Aravind et al., 2018 ; Kour and Arora, 2019 ; Mohammadpoor et al., 2020 ). In recent years, the main techniques, which are widely used in crop disease identification include artificial neural network (ANN) ( Sheikhan et al., 2012 ), the K Nearest Neighbors (KNN) algorithm ( Guettari et al., 2016 ), random forests (RF) ( Kodovsky et al., 2012 ), and so on. For example, Wang et al. (2019) proposed a method for identifying cucumber powdery mildew based on a visible spectrum by extracting the spectral features and training a Support Vector Machine (SVM) classifier to establish a classification model, optimizing the radial basis kernel function, and the recognition accuracy of the method reached 98.13%. In contrast, Prasad et al. (2016) proposed a mobile client-server architecture for leaf disease detection and diagnosis based on the combination of a Gabor Wavelet Transform (GWT) and a Gray-Level Co-occurrence Matrix (GLCM). The mobile terminal captures the object image and then transmits it to the server after pre-processing. The server then performs GWT-GLCM feature extraction and classification based on the KNN algorithm. The system can monitor farmland information through the mobile terminal at any stage. Although the previously discussed studies achieved outstanding performances in disease identification tasks, the low-level feature representations extracted from them are limited to intuitive shallow features, such as the colors, textures, and shapes of the images. Thus, it is difficult to achieve competitive performance on apple leaf disease identification tasks.

Compared with machine learning algorithms that require cumbersome image pre-processing and feature extraction ( Kulin et al., 2018 ; Zhang et al., 2018b ), convolutional neural networks (CNNs) can directly learn robust high-level feature representations of apple diseases from images. The extracted high-level feature representation is richer and better compared with the method of manually extracting features; therefore, CNNs have achieved excellent results in multiple visual tasks ( Ren et al., 2017 ; Liu et al., 2018 ; Bi et al., 2020 ). In recent years, with the continuous emergence of advanced deep learning architectures such as the ResNet ( He et al., 2016 ), ResNeXt ( Xie et al., 2017 ), and DenseNet ( Huang et al., 2017 ), the recognition accuracy and speed are constantly being refreshed on the public dataset, ImageNet. In order to solve the problem of the mobile deployment of the model, scholars have proposed various lightweight architectures, such as Xception ( Chollet, 2017 ), MobileNet ( Howard et al., 2017 ; Sandler et al., 2018 ), ShuffleNet ( Ma et al., 2018 ; Zhang et al., 2018a ), and so on. In order to provide a stable, efficient, low-cost, and highly intelligent disease identification method, Chao et al. (2020) proposed that the XDNet combined with DenseNet and Xception can enhance the feature extraction capability of the model. The model achieved an accuracy of 98.82% in identifying five apple leaf diseases with fewer parameters. Liu et al. (2020) adopted the Inception structure and introduced a dense connection strategy to build a new neural network model, which realized the real-time and accurate identification of six different kinds of grape leaf diseases. In addition, Ramcharan et al. (2019) deployed a trained cassava disease recognition model for a mobile terminal. Tests under natural conditions in the field found that complex conditions, such as different angles, brightness, and the occlusion of the image taken, could adversely affect the performance of the model, which also proves that image classification under the complex background of the field is challenging.

An attention mechanism can provide a novel solution for feature extraction. The attention mechanism can assign larger weights to regions of interest and smaller weights to backgrounds and extract information that contributes more to classification to optimize the model and to make judgments that are more accurate. In other studies, attention mechanisms have achieved excellent performance in tasks, such as classification, detection, and segmentation ( Hu et al., 2018 ; Karthik et al., 2020 ; Mi et al., 2020 ; Hou et al., 2021 ). Inspired by the above researches, this study proposes a new CNN for apple diseases recognition. The main contributions and innovations of this study are summarized as follows:

1. A new Apple Leaf Disease Identification Data set (ALDID) is generated by using image generation techniques. In order to enhance the generalization performance of the model, image augmentation techniques are used to expand the data set and simulate apple leaf disease images collected under different conditions, laying a foundation for the training of the model.

2. A novel attention-based apple leaf disease recognition model, namely, the Coordination Attention EfficientNet (CA-ENet), is proposed. A network search technique is first used to determine the optimal structure of the model, and the optimal parameters of network depth, width, and input image resolution are obtained. Then, the deep separable convolution is applied to the coordination attention convolution (CA-Conv) infrastructure to greatly reduce the number of parameters and avoid an overfitting problem. Finally, a coordinated attention block is embedded in the infrastructure to realize the integration of characteristic channel information and spatial information attention and to strengthen the learning ability of the model for important information in the lesion area.

The remainder of the study is organized as follows: In section Materials and Methods, the detailed information of the dataset is introduced and expanded by data augmentation techniques. The model proposed in this study and the related content of attention visualization is introduced in detail. The section Results and Discussion presents the experiments for evaluating the performance of the model and analyzes the results of the experiments, discussed the impact of data augmentation and external interference on the performance of the model. The last section, Conclusion and Future Work, summarizes the work of this study and prospects for further research.

Materials and Methods

This section introduces the materials and methods used in the study in detail, including the collected apple diseased leaf images and the ALDID established after augmentation. It also presents the proposed model and the attention visualization method.

Image Acquisition

The study was conducted from July 2020 to October 2020, at the apple planting experimental station of the Northwest A&F University in Qianxian County, Shaanxi province. By using a variety of different types of mobile devices, a huge number of field environment apple leaf images under different angles and distances are collected. There are a total of 5,170 disease images with a resolution of 3,000 × 3,000 pixels, including those of five species of the Glomerella leaf spot ( Colletotrichum fructicola ), Apple leaf mites ( Panonychus ulmi ), Mosaic (Apple mosaic virus), Apple litura moth ( Spodoptera litura Fabricius ), and Healthy leaves. In addition, 3,000 disease images under a single background of three kinds of laboratories, namely, Black rot ( Physalospora obtuse ), Scab ( Venturia inaequalis ), and Rust ( Gymnosporangium yamadai ), were collected from the public dataset PlantVillage. The above two data sets are shuffled and mixed to generate the original data set of common apple diseases.

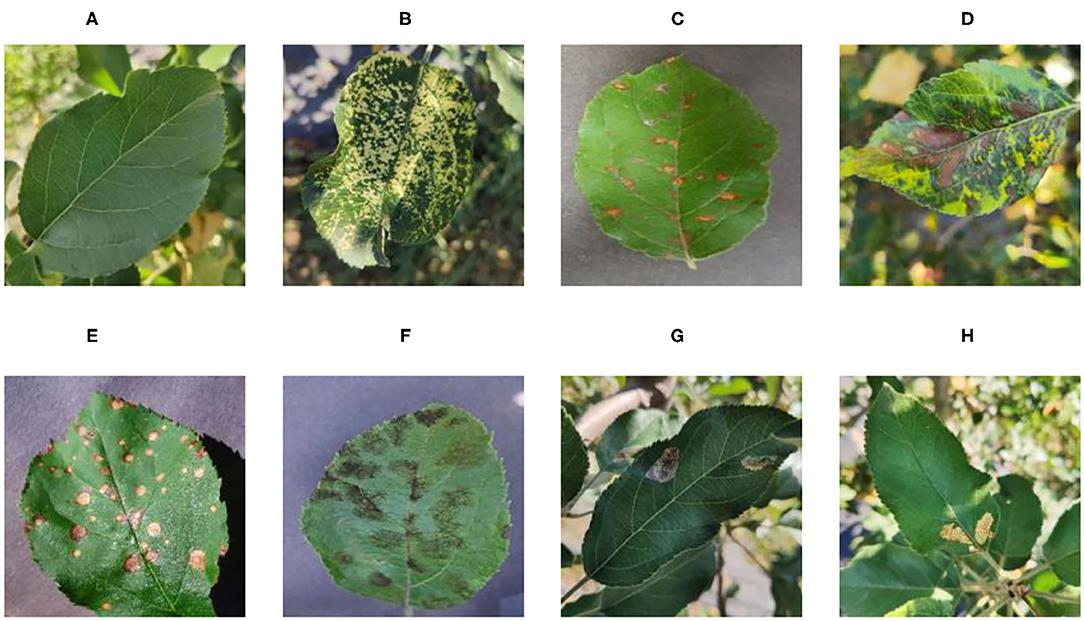

Figure 1 shows random samples of each category in the data set. There are a large number of complex background images in the data set. At the same time, it can be seen that Apple litura moth (G) and Apple leaf mites (H) leaves have relatively similar geometric features. The difference between the two diseases can be expressed as a fine-grained image classification problem. A variety of different forms of samples can increase the diversity of the data set, making it closer to various different situations that may occur in the real situation. However, it also constitutes a greater test for the image classification task and puts forward higher requirements for the comprehensive performance of the model.

Figure 1 . Eight common apple leaf disease types. (A) Healthy, (B) Mosaic, (C) Rust, (D) Glomerella leaf spot, (E) Black rot, (F) Scab, (G) Apple litura moth, and (H) Apple leaf mites.

Image Augmentation

When acquiring the apple disease images, the samples obtained varied in the apple leaf growth position, weather condition, shooting angle, and there are interference factors such as equipment noise. In order to enable the model to learn as many irrelevant patterns as possible and avoid overfitting problems, the images of the dataset need to be expanded and normalized.

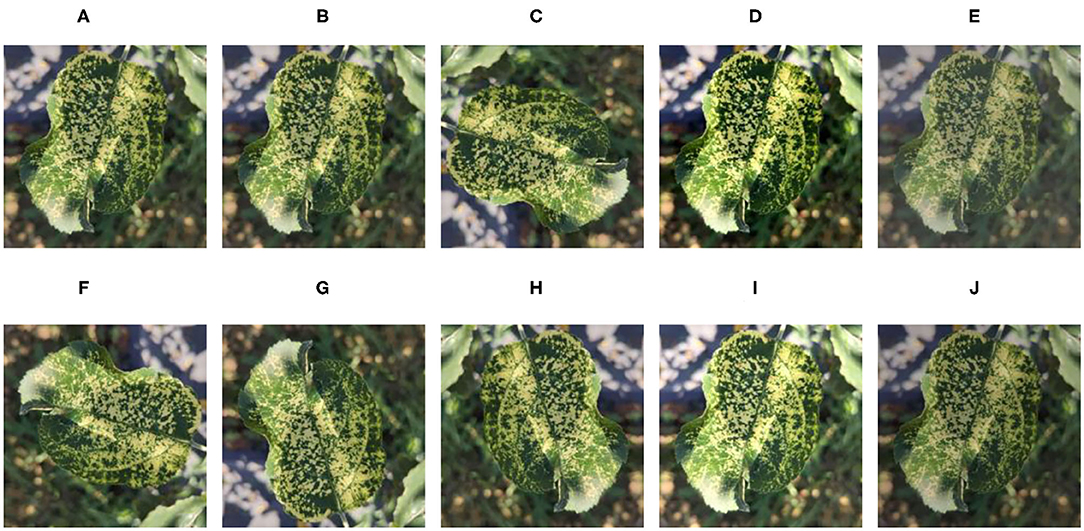

In the data expansion, Gaussian blurring, contrast enhancement by 30% and decrease by 30%, and brightness enhancement by 30% and decrease by 30% are adopted to simulate different weather conditions for all samples of the original dataset. The images are also rotated by 90°, 270°, a horizontal flip, and a vertical flip to simulate the change of shooting angle, then the original data set is added. A Mosaic disease image is randomly selected to enhance and display the effect as shown in Figure 2 . Table 1 represents the structure information of the ALDID. It can be seen from Table 1 that the sample distribution is balanced after image expansion, which is in line with the actual application scenario. It can ensure that the model extracts different features of each category in a balanced manner, ensuring its correct training and avoiding overfitting. This study also divides the ALDID according to the ratio of training set: validation set = 4:1 for model training and validation. The training set is used to train the model, and the validation set is used to check whether the model training process converges normally and whether there is an overfitting problem.

Figure 2 . Image enhancement example of the mosaic disease. (A) Original image, (B) Gaussian blur, (C) 90° rotation, (D) High contrast, (E) Low contrast, (F) 270° rotation, (G) Horizontal symmetry, (H) Vertical symmetry, (I) High brightness, and (J) Low brightness.

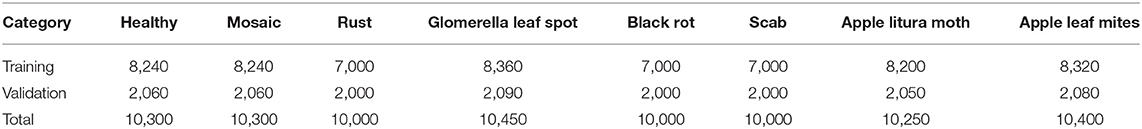

Table 1 . The composition of apple leaf disease identification data set (ALDID).

During the training process, a large fluctuation of the feature value range will affect the convergence of the model, which is not conducive to the model learning different feature differences, and the images need normalization. In order to test the stability of the model, 500 images were randomly selected from each type of disease image in the original data set, and a total of 4,000 images were selected from eight different diseases. After scrambling these 4,000 images, five different interference factors, namely, Gaussian noise, salt and pepper noise, 180° rotation, 30% sharpness enhancement, and 30% sharpness reduction were randomly added, and a Model Robustness Test Data set (MRTD) was generated. After the training process is completed, the MRTD is then used to test the model to verify the effect of the model training. The above work laid the foundation for the use of the model.

CA-ENet Network

The existing CNN methods of increasing network depth, width, and input image resolution can obtain richer and higher fine-grained features, but, there will be serious problems such as gradient disappearance and model degradation. The problem is that only changing a single variable cannot achieve better results. The basic network architecture EfficientNet-B0 ( Tan and Quoc, 2019 ), which uses neural architecture search (NAS) techniques to optimize the above three factors at the same time, balances the three dimensions of depth, width, and resolution, and can be further adjusted by the scaling factor. Therefore, in this study, we use the EfficientNet architecture as the feature extraction network.

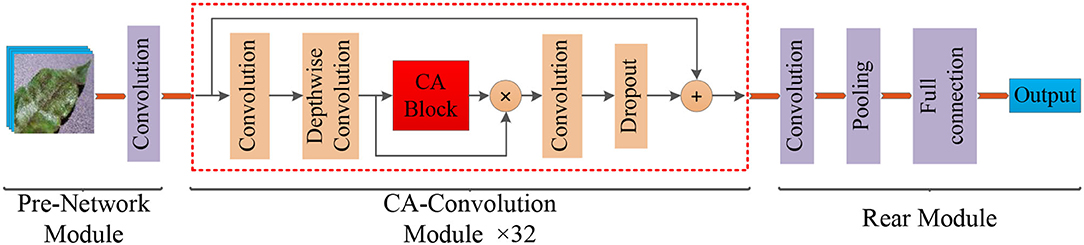

Different types of apple leaf diseases have different morphological characteristics with regard to lesions, but there is a high degree of similarity between certain types of diseases, which means apple disease classification can be viewed as a fine-grained image classification problem, and existing models still have difficulty achieving satisfactory results. Therefore, in order to enhance model effectiveness, attention to the lesion area is the key to solving this problem. The widely used channel attention mechanism, SENet ( Hu et al., 2018 ), has a significant effect on improving final performance, but this operation ignored the location information of the features, which is also important for generating spatial selective attention maps. In order to identify these differences, the CA-ENet is proposed to achieve real-time and accurate apple disease identification. The overall structure of the model is shown in Figure 3 .

Figure 3 . Structure of the Coordination Attention EfficientNet (CA-ENet) for apple disease identification.

The model mainly included three parts: the pre-network for the Batch Normalization of input images, the backbone network CA-Conv for feature extraction, and the rear part that outputs the recognition result through the fully connected layer. Pre-network uses a layer of 3 × 3 ordinary convolutions with a step of 1 to perform the convolution operation on the input image, the input image resolution is 380 × 380, and the feature map with the depth of the output feature matrix of 48 is obtained. Then, the obtained feature matrix are input into the 32 CA-Conv module embedded with the CA block. Finally, the 3 × 3 ordinary convolutions and pooling are used to further abstract features and then output through a fully connected layer with eight nodes.

During the model optimization process, a NAS technique is used to search for the optimal model structure. The operation process can be abstractly expressed as Equation (1):

where ⊙ is the multiplication symbol. F i L i means arithmetic operation, it is repeatedly executed L i times in the operation F i . X is the input feature matrix. ( H i , W i , and C i ) represents the height, width, and output channels of X . The NAS process can be optimized by adding the constraints of model accuracy, parameter, and calculation amount with Equations (2) and (5).

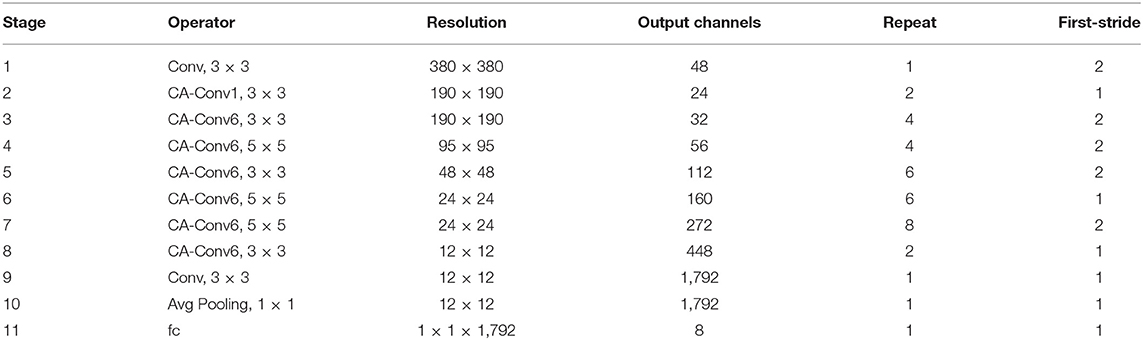

The d, w , and r are the sparseness that scales the depth, width, and resolution of the network, respectively, the tar_memory and tar_flops are the constraints on the number of parameters and calculations. Through the above optimization calculation, the best d, w , and r values of the EfficientNet-B0 structure can be obtained, and on this basis, the magnification factors d and w of EfficientNet-B4 are 1.8 and 1.4, respectively, and the input image resolution r is 380 × 380 pixels. From the discussed method, the optimal CA-ENet structure parameters can be calculated and are shown in Table 2 .

Table 2 . Details about coordination attention EfficientNet (CA-ENet).

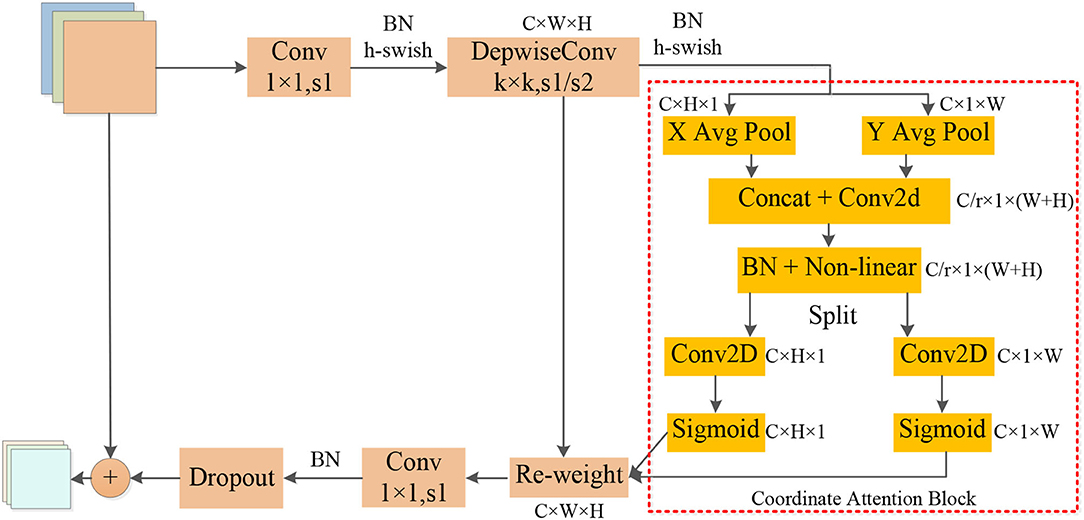

The operators in Table 2 perform arithmetic operations on the input features. The magnification of each CA-Conv6 in Stage 3–Stage 8 is 6; that is, in the first layer of convolution, the depth of the feature matrix of the input layer is increased to 6 times of the input, and the size of the convolution kernel is 3 × 3 or 5 × 5. The resolution, output channels, and repeat correspond to the resolution of the input layer, the depth of the output feature matrix, and the number of repetitions of the layer structure in the depth direction. The steps given by first-stride are only for the first layer structure of each stage, and the steps of the other layer structures are all 1. The network is composed of seven-stage CA-Conv blocks, and its structure is shown in Figure 4 . First, the input feature matrix is sent to CA-Conv through an ordinary 1 × 1 convolution for dimension upgrade. After the h-swish activation function, the feature is extracted through the deep separable convolution with a convolution kernel size of k × k (k = 3 or 5) and a step of 1 or 2. The use of a deep separable convolution structure greatly reduces the number of model parameters, and at the same time, can play an important role in avoiding model overfitting. Then, the obtained feature matrix is divided into two branches, one of which is assigned a weight to each channel by a Coordinate Attention Block (CAB), and another one without any processing is multiplied by the two weights passed through the CAB to obtain the weighted feature matrix. Finally, the dimension is reduced by 1 × 1 convolution and output to the subsequent structure after adding with the input feature matrix.

Figure 4 . Structure of coordination attention convolutional (CA-Conv).

The global pooling method can compress the global spatial information into the channel descriptor, but this results in a lack of location information. In order to capture the precise location information of the features, in the CAB in Figure 4 , the global pooling is decomposed into two one-dimensional feature encoding processes according to Equation (6). Furthermore, two one-dimensional average pooling operations along the horizontal and vertical directions are used to aggregate the input features into two separate direction-aware feature maps. This operation captures both direction-aware and position-sensitive information, thus enabling the model to locate the region of interest more accurately. The generated two separate direction-aware feature maps are concatenated in the depth direction, and the feature channel attention weight is generated through a 1 × 1 convolution compression channel, and the position information is embedded in the channel attention. Then, the Batch Nomalization (BN) operation is applied to the feature matrix and divided into two parts through a non-linear activation function, the feature depth is adjusted to be consistent with the input feature through 1 × 1 convolution, and the position information is saved in the generated attention map. Finally, the weights of the two attention maps are multiplied by the input features to strengthen the feature representation of the attention region and improve the ability of the network to locate the regions of interest accurately.

As the above-mentioned information embedding method can directly obtain the global receptive field and encode the accurate position information, so the transformation operation is performed on it using the 1 × 1 convolution transformation function F 1 . As shown in Equation (7), [ z h , z w ] is the splicing operation along a spatial dimension, δ is the non-linear activation function, and f is the intermediate feature map that encodes the spatial information in both horizontal and vertical directions. Then, through two 1 × 1 convolutions, f h and f w are transformed into tensors with the same number of channels, respectively. As shown in Equations (8) and (9), attention weights can be calculated, and the output of the CA block after the Re-weight is calculated by Equation (10).

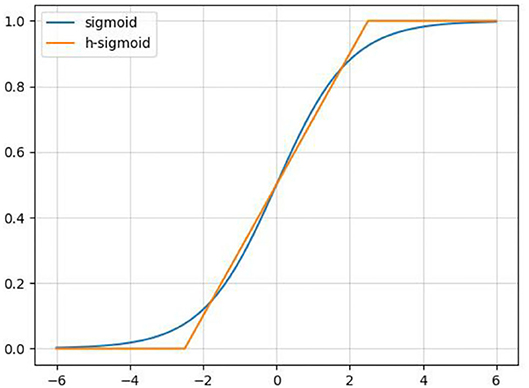

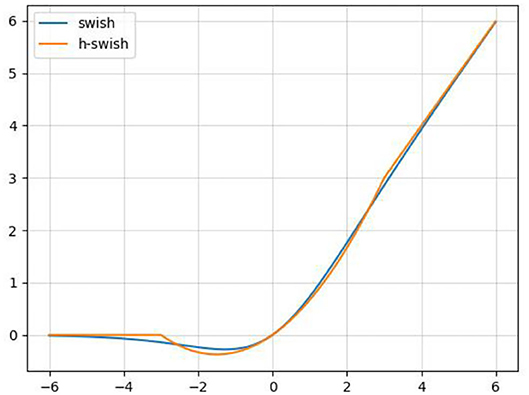

In order to reduce the amount of calculation and speed up reasoning while ensuring the effect of the activation function, a new activation function, h-swish, is applied into CA-Conv ( Howard et al., 2019 ). The activation functions of sigmoid and h-sigmoid are shown in Equations (11) and (12). It can be seen from Figure 5 that the above two activation functions are relatively close and the calculation process of h-sigmoid is more concise, so h-sigmoid can be used to replace sigmoid in Equations (13) and (14). Figure 6 shows the approximation of the effect of h-swish on the swish activation function. It can be seen that the two curves are basically the same, and the calculation speed of the h-swish is faster.

Figure 5 . Schematic diagram of the sigmoid and h-sigmoid activation functions.

Figure 6 . Schematic diagram of the swish and h-swish activation functions.

Experimental Results and Discussion

Model training details.

In order to verify the performance of the proposed method, a proposed network is trained via the ALDID. Thus, the proposed method is realized on the Pytorch 1.7.1 deep learning framework, while all experiments were conducted on an Intel ® Xeon(R) Gold 5217 CPU@3.00 GHz server equipped with an NVIDIA Tesla V100 (32GB) GPU. The operating system is Ubuntu 18.04.5 LTS 64. In order to accelerate the model convergence while keeping stable training, the initial learning rate is set to .01, and it decays according to the cosine learning rate change curve during the training process, and finally decays to .001. The number of training iterations for all models is 50 epochs.

Performance of Proposed CA-ENet

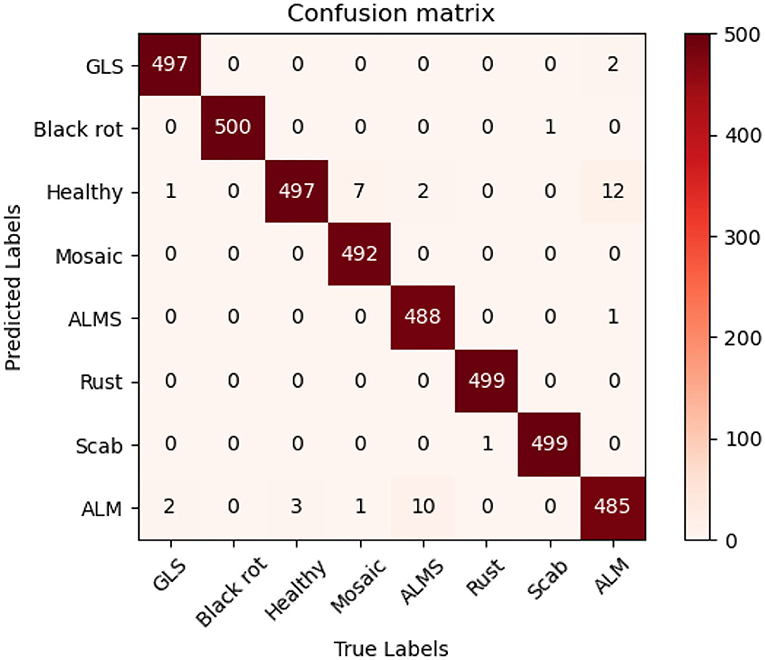

In order to evaluate the performance of the proposed method, multiple state-of-the-art methods were applied to the MRTD. In order to ensure that the results are comparable, the same training strategy was used. The test result is visually displayed with a confusion matrix. In order to facilitate the display of labels, the full names of some diseases are abbreviated. In this case, “GLS” in the confusion matrix stands for Glomerella leaf spot, “ALM” stands for Apple litura moth, and “ALMS” stands for Apple leaf mites.

Figure 7 can intuitively show the classification performance of the Coordination Attention EfficientNet, with the final accuracy reaching 98.92%. The misclassification mainly occurred between Apple leaf mites and Apple litura moth and between Apple litura moth and Healthy leaves. The main feature of the apple leaf mites is that the damaged leaves show many dense chlorosis gray-white spots. In contrast, after being damaged by apple litura moth, the insect spots formed on the leaves were elliptical and dense, and the leaf surface was wrinkled. The above two kinds of leaf spots have certain similarities in geometric and color characteristics, leading to misjudgment. Furthermore, affected by the complex background, a small number of leaves damaged by apple litura moths were mistakenly identified as healthy leaves. It can be seen that accurate recognition in a complex background has been a great challenge, but the number of misjudgments in this model is still within an acceptable range and can be maintained at a low level. The proposed CA-Conv structure can extract richer fine-grained features of the image and perceive the regions of interest with a higher degree of attention. It can also be seen that the model shows a good recognition effect and has strong robustness to the problem of apple leaf disease recognition.

Figure 7 . Confusion matrix of the CA-ENet.

Performance Comparison

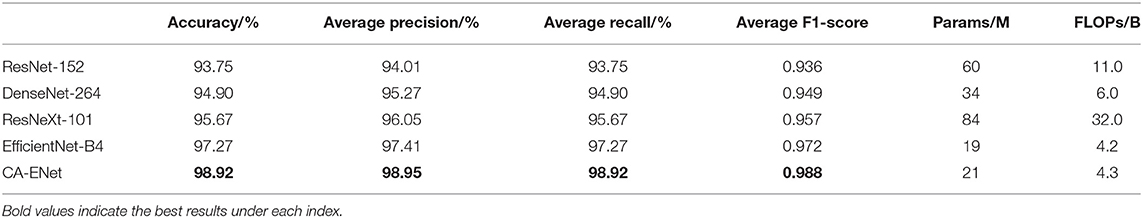

The performance comparison between CA-ENet and the standard method is shown in Table 3 . It can be seen from Table 3 that the proposed model has the best recognition performance on MRTD, with an accuracy of 98.92%. In this study, multiple metrics including accuracy, precision, recall, F1-score, parameter, and calculation are used as evaluation indicators. ResNet-152 takes advantage of the residual structure to make sure it has a strong feature learning ability, so it can reach an accuracy of 93.75%. The Dense Block, the basic structure of DenseNet-264, also has the advantages of enhanced feature propagation and incentive feature reuse, making it achieve a higher accuracy rate with nearly half of the parameters of ResNet-152. Furthermore, the accuracy of ResNeXt-101 reaches 95.67%, which is due to the use of grouped convolution, so it can achieve better results with fewer convolutional layers than ResNet-152. Although this structure can improve the final accuracy, the degree of network fragmentation is very high due to the existence of a large number of parallel branches, which greatly reduces the computation efficiency of the model.

Table 3 . Performance comparison of the CA-ENet with other classical networks.

EfficientNet uses NAS techniques to simultaneously search and optimize model depth, width, and input image resolution, and rationally expand the model architecture to achieve a high degree of coordination of structural proportions. It has obvious advantages in extracting more robust and reliable feature representations and can reach an overall accuracy of 97.27%. The strong learning ability of the CA module in CA-Conv may cause attention drift and affect model convergence, while the inverted residual structure in CA-Conv can suppress features that are not conducive to classification, ensuring model stability while further improving the recognition performance, and the effectiveness of the attention mechanism is verified.

Traditional CNNs do not distinguish the importance of information when extracting disease features, and there is a large number of convolutions that repeatedly extract low-contribution information, which causes a waste of computation resources. The attention mechanism can automatically extract high-contribution feature components, with only small parameters and calculations increases. The experimental results also show that, in the identification of apple leaf diseases, the proposed CA-ENet model is superior to other models in all evaluation indicators with fewer parameters and can classify apple disease images more accurately.

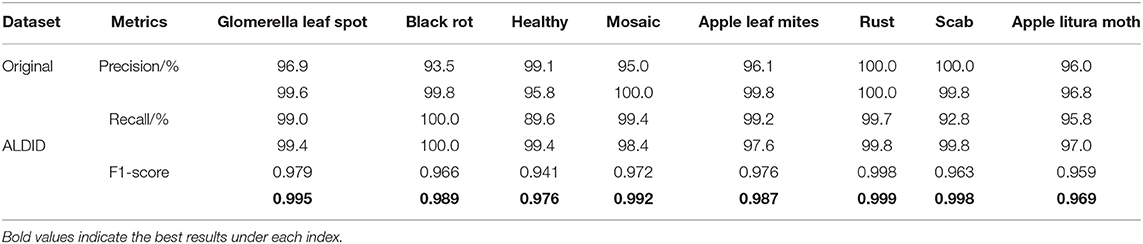

Effect of Data Augmentation on Identification Performance for Each Class

A variety of data expansion methods is used in the ALDID to improve the anti-interference ability of the model in complex situations and prevent the problem of overfitting. In order to verify the effect of data augmentation, a set of comparative experiments is designed to evaluate its impact on the final classification performance. Table 4 shows the accuracy, recall, and F1-score performance indicators of the proposed model for each category on the MRTD. The first row of values in each performance index is the performance obtained after training on the original dataset, and the second row of values is the performance obtained after training on the ALDID. It can be seen from Table 4 that the image diversity of the original data set is insufficient, and the average F1-score of the proposed method on the original dataset is 0.969, which is slightly lower than the performance of the model obtained on the ALDID, but it can still accurately classify apple leaf diseases. The results show that the augmented data set is closer to the actual situation, the ability of the model to adapt to complex scenes is enhanced, and the anti-interference ability is improved to a certain extent. The leverage of the deep separable convolution can effectively reduce the number of model parameters and greatly increase training speed.

Table 4 . Performance of the CA-ENet before and after data augmentation.

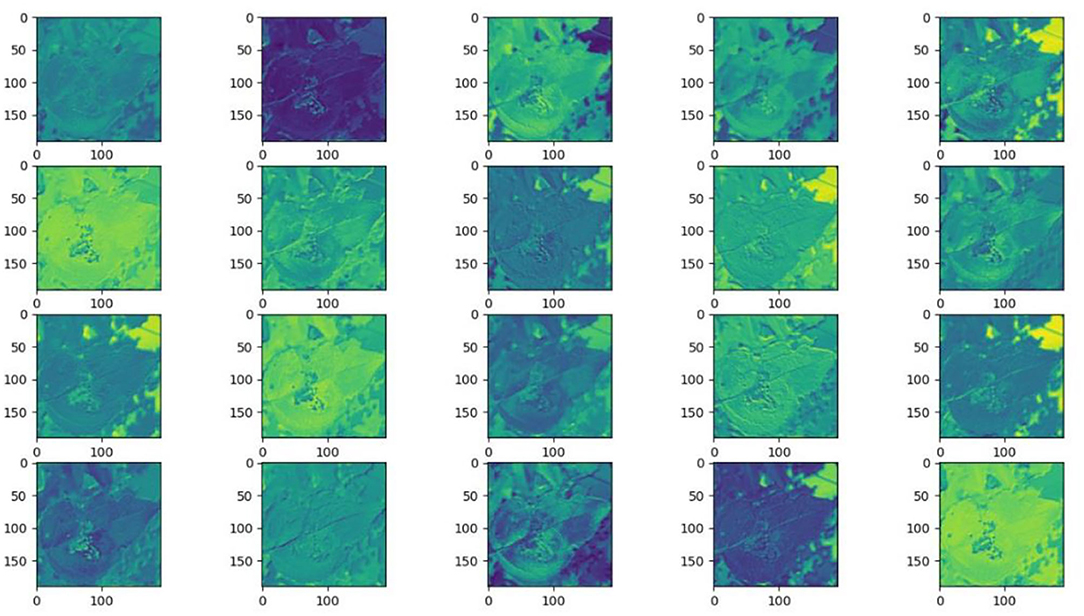

Feature and Network Attention Visualization

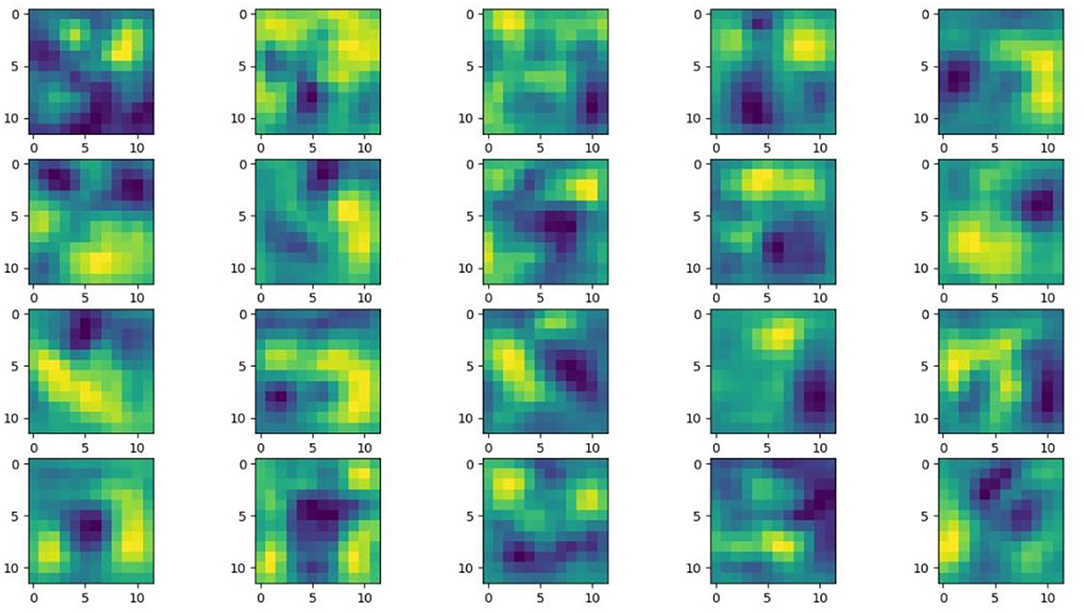

Understanding and analyzing the hidden layer structure of the model is an important method to comprehensively recognize the proposed network structure. CNNs are usually trained in the form of black-box testing and the evaluations of model performance are limited to the final accuracy and other indicators, which have certain deficiencies. Visualization techniques are the way to explore how CNNs learn features and distinguish categories. So, this section uses the visualization of layer activation and class activation heatmaps to analyze the performance of the proposed model. The visualization of layer activation helps to understand how the continuous convolutional layer performs feature extraction and completes the conversion of input features. Figures 8 , 9 show the output features of the first 20 channels of the CA-Conv structure in the first and last layers of the model, respectively. The given example category is apple leaf mites. In the superficial features of the model, it is obvious that the lesion area and the background are separated, and the characteristics of the disease location can be accurately extracted. The model has high efficiency in extracting deep features and only contains a few failed convolutions. The channel output features given here are all valid. Therefore, the stacking of the CA-Conv structure does not affect feature learning ability of the model, and the adopted separable convolution can effectively reduce the feature redundancy and lead to higher efficiency.

Figure 8 . Partial output feature maps of the first CA-Conv.

Figure 9 . Partial output feature maps of the last CA-Conv.

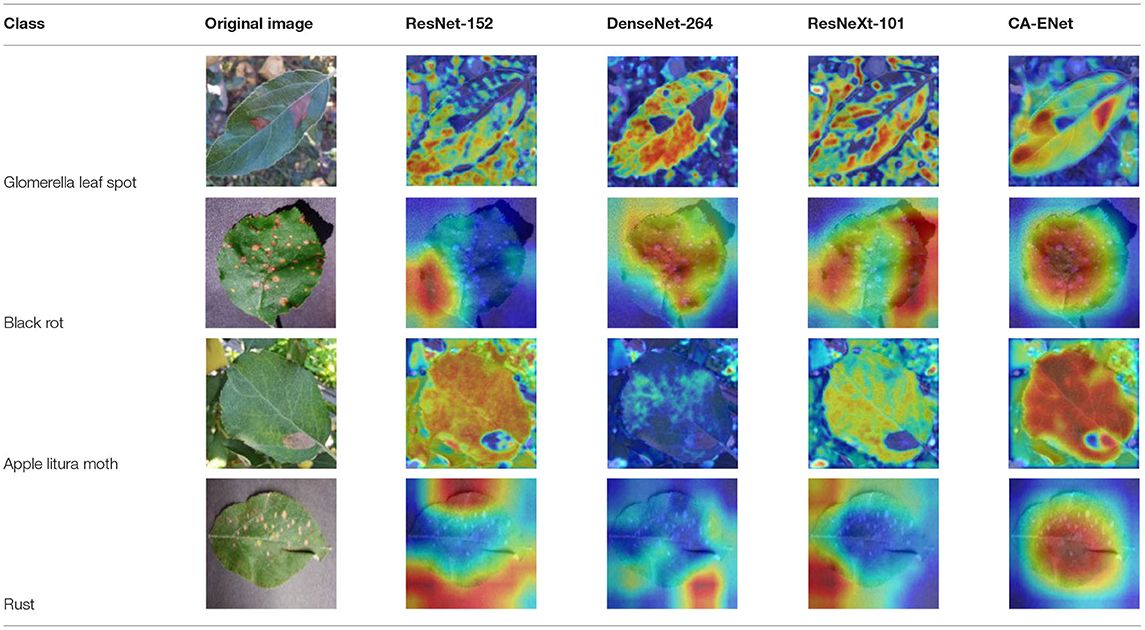

Class Activation Mapping (CAM) ( Selvaraju et al., 2020 ) helps to understand which feature components the model relies on to make decisions. Table 5 shows the original image of the class activation and the attention heatmaps of the commonly used models. The sample images of Glomerella leaf spot, black rot, apple litura moth, and rust are randomly selected for testing. Due to the introduction of the attention module CAB, CA-ENet has a stronger ability to focus on the lesion area. Compared with other models, CA-ENet has a good positioning effect and can accurately locate the interest area, whether it is a leaf lesion in a complex or a simple background. In contrast, ResNet-152, DenseNet-264, and ResNeXt-101 have deviations or even errors in their focus positions, which are what affect the robustness and accuracy of a model. The visual test results of the class activation heatmaps of the apple leaf diseases show that the model fully takes the characteristics of the disease spots into account and achieves superior recognition performance on apple leaf diseases.

Table 5 . Comparison of attention heatmaps of different models.

Conclusion and Future Work

An improved attention-based deep CNN to identify common apple leaf diseases to support the efficient management of orchards is proposed in this study. Due to the complex environment of orchards, in order to be close to the real application scenarios, 5,170 apple leaf images were collected by multiple mobile devices and 3,000 disease images were obtained from a public dataset. Image augmentation techniques are used to generate the ALDID containing 81,700 diseased images. By embedding a CA block into a CA-Conv module, the integration of characteristic channel and location information was realized. A deep separable convolution is also used to reduce the number of parameters, and the h-swish activation function is used to speed up the model convergence. The proposed model is training with ALDID and testing with MRTD and conducts a large number of comparative experiments including various performance evaluation indicators and process visualizations. The experimental results show that the method proposed in this study achieves a recognition accuracy of 98.92%, which is better than that of other existing deep learning methods and achieves competitive performance on apple leaf disease identification tasks, which provides a reference for the application of deep learning methods in crop disease classification. The proposed model has the advantages of a simple structure, fast running speed, good generalization performance, and robustness, and has great potential application value. In the future, a ground mobile inspection platform equipped with cameras will be built to replace manual operations and to realize the rapid diagnosis and early warning of apple diseases.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Author Contributions

PW designed and performed the experiment, selected the algorithm, analyzed the data, trained the algorithms, and wrote the manuscript. PW, TN, YM, and ZZ collected data. BL monitored the data analysis. DH conceived the study and participated in its design. All authors contributed to the article and approved the submitted version.

This research is sponsored by the Key Research and Development Program of Shaanxi (2021NY-138 and 2019ZDLNY07-06-01), by CCF-Baidu Open Fund (NO. 2021PP15002000).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We would like to thank the reviewers for their valuable suggestions on this manuscript.

Aravind, K. R., Raja, P., Mukesh, K. V., Aniirudh, R., Ashiwin, R., and Szczepanski, C. (2018). “Disease classification in maize crop using bag of features and multiclass support vector machine,” in 2nd International Conference on Inventive Systems and Control (Coimbatore), 1191–1196. doi: 10.1109/ICISC.2018.8398993

CrossRef Full Text | Google Scholar

Bi, C., Wang, J., Duan, Y., Fu, B., Kang, J., and Shi, Y. (2020). MobileNet based apple leaf diseases identification. Mobile Netw. Appl . doi: 10.1007/s11036-020-01640-1

Chao, X., Sun, G., Zhao, H., Li, M., and He, D. (2020). Identification of apple tree leaf diseases based on deep learning models. Symmetry 12:1065. doi: 10.3390/sym12071065

Chollet, F. (2017). Xception: deep learning with depthwise separable convolutions. IEEE Conf. Comput. Vision Pattern Recogn . 1800–1807. doi: 10.1109/CVPR.2017.195

CrossRef Full Text

Dutot, M., Nelson, L., and Tyson, R. (2013). Predicting the spread of postharvest disease in stored fruit, with application to apples. Postharvest Biol. Technol. 85, 45–56. doi: 10.1016/j.postharvbio.2013.04.003

Guettari, N., Capelle-Laizé, A. S., and Carré, P. (2016). Blind image steganalysis based on evidential k-nearest neighbors. IEEE Int. Conf. Image Process . 2742–2746. doi: 10.1109/ICIP.2016.7532858

He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep residual learning for image recognition. IEEE Conf. Comput. Vision Pattern Recogn . 770–778. doi: 10.1109/CVPR.2016.90

PubMed Abstract | CrossRef Full Text | Google Scholar

Hou, Q., Zhou, D., and Feng, J. (2021). Coordinate attention for efficient mobile network design. arXiv [Preprint]. arXiv:2103.02907v1.

Google Scholar

Howard, A., Sandler, M., Chen, B., Wang, W., Chen, L., Tan, M., et al. (2019). Searching for MobileNetV3. IEEE Int. Conf. Comput. Vision. 1314–1324. doi: 10.1109/ICCV.2019.00140

Howard, A. G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., et al. (2017). Mobilenets: efficient convolutional neural networks for mobile vision applications. arXiv [Preprint]. arXiv:1704.04861.

Hu, J., Shen, L., Albanie, S., and Sun, G. (2018). Squeeze-and-excitation networks. IEEE Trans. Pattern. Anal . 7132–7141. doi: 10.1109/CVPR.2018.00745

Huang, G., Liu, Z., Maaten, L. V. D., and Weinberger, K. Q. (2017). Densely connected convolutional networks. IEEE Conf. Comput. Vision Pattern Recogn . 2261–2269. doi: 10.1109/CVPR.2017.243

Karthik, R., Hariharan, M., Anand, S., Mathikshara, P., Johnson, A., and Menaka, R. (2020). Attention embedded residual CNN for disease detection in tomato leaves. Appl. Soft Comput. 86:105933. doi: 10.1016/j.asoc.2019.105933

Kodovsky, J., Fridrich, J., and Holub, V. (2012). Ensemble classifiers for steganalysis of digital media. IEEE Trans. Inf. Foren Sec. 7, 432–444. doi: 10.1109/tifs.2011.2175919

Kour, V. P., and Arora, S. (2019). Particle swarm optimization based support vector machine (P-SVM) for the segmentation and classification of plants. IEEE Access 7, 29374–29385. doi: 10.1109/ACCESS.2019.2901900

Kulin, M., Kazaz, T., Moerman, I., and Poorter, E. D. (2018). End-to-end learning from spectrum data: a deep learning approach for wireless signal identification in spectrum monitoring applications. IEEE Access 6, 18484–18501. doi: 10.1109/ACCESS.2018.2818794

Liu, B., Ding, Z., Tian, L., He, D., Li, S., and Wang, H. (2020). Grape leaf disease identification using improved deep convolutional neural networks. Front. Plant Sci 11:1082. doi: 10.3389/fpls.2020.01082

Liu, B., Zhang, Y., He, D., and Li, Y. (2018). Identification of apple leaf diseases based on deep convolutional neural networks. Symmetry 10:11. doi: 10.3390/sym10010011

Ma, N., Zhang, X., Zheng, H. T., and Sun, J. (2018). Shufflenet v2: practical guidelines for efficient CNN architecture design. arXiv [Preprint]. arXiv:1807.11164v1.

Mi, Z., Zhang, X., Su, J., Han, D., and Su, B. (2020). Wheat stripe rust grading by deep learning with attention mechanism and images from mobile devices. Front. Plant Sci. 11:558126. doi: 10.3389/fpls.2020.558126

Mohammadpoor, M., Nooghabi, M. G., and Ahmedi, Z. (2020). An intelligent technique for grape fanleaf virus detection. Int. J. Interact. Multimedia Artif. Intell. 6, 62–67. doi: 10.9781/ijimai.2020.02.001

Prasad, S., Peddoju, S. K., and Ghosh, D. (2016). Multi-resolution mobile vision system for plant leaf disease diagnosis. Signal Image Video Process. 10, 379–388. doi: 10.1007/s11760-015-0751-y

Ramcharan, A., McCloskey, P., Baranowski, K., Mbilinyi, N., Mrisho, L., Ndalahwa, M., et al. (2019). A mobile-based deep learning model for cassava disease diagnosis. Front. Plant Sci. 10:272. doi: 10.3389/fpls.2019.00272

Ren, S., He, K., Girshick, R., and Sun, J. (2017). Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern. Anal. 39, 1137–1149. doi: 10.1109/tpami.2016.2577031

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., and Chen, L. (2018). MobileNetV2: inverted residuals and linear bottlenecks. IEEE Conf. Comput. Vision Pattern Recogn . 4510–4520. doi: 10.1109/CVPR.2018.00474

Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., and Batra, D. (2020). “Grad-CAM: Visual Explanations From Deep Networks via Gradient-Based Localization,” in 2017 IEEE International Conference on Computer Vision (ICCV) , 618–626. doi: 10.1109/ICCV.2017.74

Sheikhan, M., Pezhmanpour, M., and Moin, M. S. (2012). Improved contourlet-based steganalysis using binary particle swarm optimization and radial basis neural networks. Neural Comput. Appl. 21, 1717–1728. doi: 10.1007/s00521-011-0729-9

Tan, M., and Quoc, V. L. (2019). EfficientNet: rethinking model scaling for convolutional neural networks. arXiv [Preprint]. arXiv:1905.11946.

Wang, X., Zhu, C., Fu, Z., Zhang, L., and Li, X. (2019). Research on cucumber powdery mildew recognition based on visual spectra. Spectrosc. Spectr. Anal. 39, 1864–1869. doi: 10.3964/j.issn.1000-0593 (2019)06-1864-06

Xie, S., Girshick, R., Dollár, P., Tu, Z., and He, K. (2017). Aggregated residual transformations for deep neural networks. IEEE Conf. Comput Vision Pattern. Recogn . 5987–5995. doi: 10.1109/CVPR.2017.634

Zhang, X., Zhou, X., Lin, M., and Sun, J. (2018a). ShuffleNet: an extremely efficient convolutional neural network for mobile devices. IEEE Conf. Comput. Vision Pattern. Recogn . 6848–6856. doi: 10.1109/CVPR.2018.00716

Zhang, Y., Gravina, R., Lu, H. M., Villari, M., and Fortino, G. (2018b). PEA: parallel electrocardiogram-based authentication for smart healthcare systems. J. Netw. Comput. Appl. 117, 10–16. doi: 10.1016/j.jnca.2018.05.007

Keywords: apple disease, CA-ENet, attention mechanism, CA block, diseases identification

Citation: Wang P, Niu T, Mao Y, Zhang Z, Liu B and He D (2021) Identification of Apple Leaf Diseases by Improved Deep Convolutional Neural Networks With an Attention Mechanism. Front. Plant Sci. 12:723294. doi: 10.3389/fpls.2021.723294

Received: 10 June 2021; Accepted: 23 August 2021; Published: 28 September 2021.

Reviewed by:

Copyright © 2021 Wang, Niu, Mao, Zhang, Liu and He. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bin Liu, liubin0929@nwsuaf.edu.cn ; Dongjian He, hdj168@nwsuaf.edu.cn

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu

- Subscribe SciFeed

- Recommended Articles

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

JSmol Viewer

Disease detection in apple leaves using deep convolutional neural network.

1. Introduction

2. related works.

- Previous models have limitations in utilising the advantage of Image Augmentation techniques properly. Our proposed model uses various Image Augmentation techniques such as Canny Edge Detection, Flipping, Blurring, etc., to enhance our dataset. These techniques can help in building a robust model.

- The performance of many of the previously proposed model were not adequate, especially under challenging cases, e.g., identifying leaves with multiple diseases. In our research, we have used an ensemble of pre-trained deep learning models. The advantage of this is that our proposed model combines the predictions of three models and can perform well under challenging situations.

- We have deployed the proposed model in the form of a web application that serves as an easy-to-use system for I users. The user has to upload the leaf’s image on our web application, and the result is obtained in a couple of seco.

3. Methodology

3.1. dataset collection, 3.1.1. healthy, 3.1.2. apple scab, 3.1.3. cedar apple rust, 3.1.4. multiple diseases, 3.2. image augmentation, 3.2.1. canny edge detection, 3.2.2. flipping, 3.2.3. convolution, 3.2.4. blurring, 3.3. dataset partition, 3.4. modeling, 3.4.1. multiclass classification, 3.4.2. transfer learning, 3.4.3. model structure, 3.4.4. densenet121, 3.4.5. efficientnet, 3.4.6. efficientnet noisy student, 3.5. ensembling, 4. experimental results and analyses, 4.1. experimental setup, 4.2. performance metrics, 4.3. performance benchmarking, 4.4. computational resources, 4.5. model deployment, 4.5.1. overview, 4.5.2. web application work flow.

- User visits our web application and uploads the image of apple leaf. All this takes place at the frontend.

- The image uploaded is then sent to the backend, where it is fed to the CNN model. At the backend, we have stored the weights of our proposed model in the form of an HDF5 file [ 57 ]. The model is loaded from this HDF5 file. Before feeding the image to the model, the image is first trimmed into the required shape of 512 × 512.

- The result returned by our model is a NumPy array of size (4,1), which includes the probability of the four classes. The class with the maximum probability is extracted.

- The results are shown to the user at the frontend, and the image is stored in our database to enhance our model.

4.5.3. Technologies Used

5. conclusions, author contributions, institutional review board statement, informed consent statement, data availability statement, acknowledgments, conflicts of interest.

- Apple. Wikipedia. 2021. Available online: https://en.wikipedia.org/wiki/Apple (accessed on 22 April 2021).

- Jordan, M.I.; Mitchell, T.M. Machine Learning: Trends, Perspectives, and Prospects. Science 2015 , 349 , 255–260. [ Google Scholar ] [ CrossRef ]

- Badage, A. Crop disease detection using machine learning: Indian agriculture. Int. Res. J. Eng. Technol. 2018 , 5 , 866–869. [ Google Scholar ]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986 , PAMI-8 , 679–698. [ Google Scholar ] [ CrossRef ]

- Korkut, U.B.; Göktürk, Ö.B.; Yildiz, O. Detection of Plant Diseases by Machine Learning. In Proceedings of the 2018 26th Signal Processing and Communications Applications Conference (SIU), Izmir, Turkey, 2–5 May 2018; pp. 1–4. [ Google Scholar ]

- Noble, W.S. What Is a Support Vector Machine? Nat. Biotechnol. 2006 , 24 , 1565–1567. [ Google Scholar ] [ CrossRef ]

- Quinlan, J.R. Simplifying Decision Trees. Int. J. Man-Mach. Stud. 1987 , 27 , 221–234. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Huang, Y.; Li, L. Naive Bayes Classification Algorithm Based on Small Sample Set. In Proceedings of the 2011 IEEE International Conference on Cloud Computing and Intelligence Systems, Beijing, China, 15–17 September 2011; pp. 34–39. [ Google Scholar ]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015 , 521 , 436–444. [ Google Scholar ] [ CrossRef ]

- Kumar, E.P.; Sharma, E.P. Artificial neural networks-a study. Int. J. Emerg. Eng. Res. Technol. 2014 , 2 , 143–148. [ Google Scholar ]

- Pardede, H.F.; Suryawati, E.; Sustika, R.; Zilvan, V. Unsupervised Convolutional Autoencoder-Based Feature Learning for Automatic Detection of Plant Diseases. In Proceedings of the 2018 International Conference on Computer, Control, Informatics and Its Applications (IC3INA), Tangerang, Indonesia, 1–2 November 2018; pp. 158–162. [ Google Scholar ]

- Howard, A.G. Some Improvements on Deep Convolutional Neural Network Based Image Classification. arXiv 2013 , arXiv:1312.5402. [ Google Scholar ]

- Boulent, J.; Foucher, S.; Théau, J.; St-Charles, P.-L. Convolutional Neural Networks for the Automatic Identification of Plant Diseases. Front. Plant Sci. 2019 , 10 , 941. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [ Google Scholar ]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 24 May 2019; pp. 6105–6114. [ Google Scholar ]

- Xie, Q.; Luong, M.-T.; Hovy, E.; Le, Q.V. Self-Training With Noisy Student Improves ImageNet Classification. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10684–10695. [ Google Scholar ]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A Survey on Deep Transfer Learning. In Artificial Neural Networks and Machine Learning-ICANN 2018 Lecture Notes in Computer Science ; Kůrková, V., Manolopoulos, Y., Hammer, B., Iliadis, L., Maglogiannis, I., Eds.; Springer: Cham, Germany, 2018; Volume 11141. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Steel, M.F.J. Model Averaging and Its Use in Economics. J. Econ. Lit. 2020 , 58 , 644–719. [ Google Scholar ] [ CrossRef ]

- Selvaraj, M.G.; Vergara, A.; Ruiz, H.; Safari, N.; Elayabalan, S.; Ocimati, W.; Blomme, G. AI-Powered Banana Diseases and Pest Detection. Plant Methods 2019 , 15 , 92. [ Google Scholar ] [ CrossRef ]

- Early Detection and Classification of Plant Diseases with Support Vector Machines Based on Hyperspectral Reflectance. Comput. Electron. Agric. 2010 , 74 , 91–99. [ CrossRef ]

- Sinha, A.; Shekhawat, R.S. Review of Image Processing Approaches for Detecting Plant Diseases. IET Image Process. 2019 , 14 , 1427–1439. [ Google Scholar ] [ CrossRef ]

- Ramesh, S.; Hebbar, R.; Niveditha, M.; Pooja, R.; Shashank, N.; Vinod, P.V. Plant Disease Detection Using Machine Learning. In Proceedings of the 2018 International Conference on Design Innovations for 3Cs Compute Communicate Control (ICDI3C), Bangalore, India, 25–28 April 2018; pp. 41–45. [ Google Scholar ]

- Yang, X.; Guo, T. Machine Learning in Plant Disease Research. Eur. J. Biomed. Res. 2017 , 3 , 6–9. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using Deep Learning for Image-Based Plant Disease Detection. Front. Plant Sci. 2016 , 7 . [ Google Scholar ] [ CrossRef ] [ PubMed ] [ Green Version ]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Plant Disease Detection and Classification by Deep Learning. Plants 2019 , 8 , 468. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Goncharov, P.; Ososkov, G.; Nechaevskiy, A.; Uzhinskiy, A.; Nestsiarenia, I. Disease Detection on the Plant Leaves by Deep Learning. In Proceedings of the International Conference on Neuroinformatics, Moscow, Russia, 8–12 October 2018; Kryzhanovsky, B., Dunin-Barkowski, W., Redko, V., Tiumentsev, Y., Eds.; Advances in Neural Computation, Machine Learning, and Cognitive Research II. Springer International Publishing: Cham, Germany, 2019; pp. 151–159. [ Google Scholar ]

- Sun, J.; Yang, Y.; He, X.; Wu, X. Northern Maize Leaf Blight Detection Under Complex Field Environment Based on Deep Learning. IEEE Access 2020 , 8 , 33679–33688. [ Google Scholar ] [ CrossRef ]

- Vine Disease Detection in UAV Multispectral Images Using Optimized Image Registration and Deep Learning Segmentation Approach. Comput. Electron. Agric. 2020 , 174 , 105446. [ CrossRef ]

- Identification of Rice Diseases Using Deep Convolutional Neural Networks. Neurocomputing 2017 , 267 , 378–384. [ CrossRef ]

- Amara, J.; Bouaziz, B.; Algergawy, A. A Deep Learning-Based Approach for Banana Leaf Diseases Classification. In Datenbanksysteme für Business, Technologie und Web (BTW 2017)-Workshopband ; Mitschang, B., Nicklas, D., Leymann, F., Schöning, H., Herschel, M., Teubner, J., Härder, T., Kopp, O., Wieland, M., Eds.; Gesellschaft für Informatik e.V.: Bonn, Germany, 2017; pp. 79–88. [ Google Scholar ]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998 , 86 , 2278–2324. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Factors Influencing the Use of Deep Learning for Plant Disease Recognition. Biosyst. Eng. 2018 , 172 , 84–91. [ CrossRef ]

- Liu, B.; Zhang, Y.; He, D.; Li, Y. Identification of Apple Leaf Diseases Based on Deep Convolutional Neural Networks. Symmetry 2018 , 10 , 11. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Chuanlei, Z.; Shanwen, Z.; Jucheng, Y.; Yancui, S.; Jia, C. Apple Leaf Disease Identification Using Genetic Algorithm and Correlation Based Feature Selection Method. Int. J. Agric. Biol. Eng. 2017 , 10 , 74–83. [ Google Scholar ] [ CrossRef ]

- Dai, B.; Qiu, T.; Ye, K. Foliar Disease Classification. Available online: http://noiselab.ucsd.edu/ECE228/projects/Report/15Report.pdf (accessed on 20 April 2021).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [ Google Scholar ]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper With Convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [ Google Scholar ]

- Sardoğan, M.; Özen, Y.; Tuncer, A. Detection of Apple Leaf Diseases Using Faster R-CNN. Düzce Üniversitesi Bilim Teknol. Derg. 2020 , 8 , 1110–1117. [ Google Scholar ] [ CrossRef ]

- Jiang, P.; Chen, Y.; Liu, B.; He, D.; Liang, C. Real-Time Detection of Apple Leaf Diseases Using Deep Learning Approach Based on Improved Convolutional Neural Networks. IEEE Access 2019 , 7 , 59069–59080. [ Google Scholar ] [ CrossRef ]

- Jeong, J.; Park, H.; Kwak, N. Enhancement of SSD by Concatenating Feature Maps for Object Detection. arXiv 2017 , arXiv:1705.09587. [ Google Scholar ]

- Plant Pathology 2020-FGVC7. Available online: https://kaggle.com/c/plant-pathology-2020-fgvc7/data/ (accessed on 20 April 2021).

- Perez, L.; Wang, J. The Effectiveness of Data Augmentation in Image Classification Using Deep Learning. arXiv 2017 , arXiv:1712.04621. [ Google Scholar ]

- Glossary-Convolution. Available online: https://homepages.inf.ed.ac.uk/rbf/HIPR2/convolve.htm (accessed on 18 April 2021).

- OpenCV: Smoothing Images. Available online: https://docs.opencv.org/master/d4/d13/tutorial_py_filtering.html (accessed on 18 April 2021).

- Lin, M.; Chen, Q.; Yan, S. Network In Network. arXiv 2014 , arXiv:1312.4400. [ Google Scholar ]

- Nwankpa, C.; Ijomah, W.; Gachagan, A.; Marshall, S. Activation Functions: Comparison of Trends in Practice and Research for Deep Learning. arXiv 2018 , arXiv:1811.03378. [ Google Scholar ]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017 , arXiv:1412.6980. [ Google Scholar ]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [ Google Scholar ]

- Nain, A. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. Available online: https://medium.com/@nainaakash012/efficientnet-rethinking-model-scaling-for-convolutional-neural-networks-92941c5bfb95 (accessed on 25 May 2021).

- Ju, C.; Bibaut, A.; van der Laan, M. The Relative Performance of Ensemble Methods with Deep Convolutional Neural Networks for Image Classification. J. Appl. Stat. 2018 , 45 , 2800–2818. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Kaggle: Your Machine Learning and Data Science Community. Available online: https://www.kaggle.com/ (accessed on 20 April 2021).

- Tensor Processing Units (TPUs) Documentation. Available online: https://www.kaggle.com/docs/tpu (accessed on 20 April 2021).

- Tf.Data.Dataset | TensorFlow Core v2.4.1. Available online: https://www.tensorflow.org/api_docs/python/tf/data/Dataset (accessed on 20 April 2021).

- Seaborn: Statistical Data Visualization—Seaborn 0.11.1 Documentation. Available online: https://seaborn.pydata.org/#:~:text=Seaborn%20is%20a%20Python%20data,attractive%20and%20informative%20statistical%20graphics (accessed on 20 April 2021).

- Mishra, A. Metrics to Evaluate Your Machine Learning Algorithm. Available online: https://towardsdatascience.com/metrics-to-evaluate-your-machine-learning-algorithm-f10ba6e38234 (accessed on 18 April 2021).

- Available online: https://github.com/prakhar070/apple-disease-detection (accessed on 20 April 2021).

- The HDF5® Library & File Format. The HDF Group. Available online: https://www.hdfgroup.org/solutions/hdf5/ (accessed on 22 April 2021).

| Split | DenseNet121 | EfficientNetB7 | NoisyStudent | Our Model |

|---|---|---|---|---|

| 0.10 | 0.9398 | 0.9416 | 0.9095 | 0.9344 |

| 0.15 | 0.9526 | 0.9562 | 0.9124 | 0.9625 |

| 0.20 | 0.9511 | 0.9506 | 0.9233 | 0.9499 |

| 0.25 | 0.9414 | 0.9471 | 0.8991 | 0.9211 |

| 0.30 | 0.9232 | 0.9376 | 0.9091 | 0.9301 |

| Split | DenseNet121 | EfficientNetB7 | NoisyStudent | Our Model |

|---|---|---|---|---|

| 0.10 | 0.8432 | 0.8637 | 0.8344 | 0.8555 |

| 0.15 | 0.8637 | 0.8901 | 0.8479 | 0.9091 |

| 0.20 | 0.9496 | 0.8871 | 0.8544 | 0.8963 |

| 0.25 | 0.8598 | 0.8388 | 0.8282 | 0.8446 |

| 0.30 | 0.8876 | 0.8876 | 0.8298 | 0.8991 |

| Split | DenseNet121 | EfficientNetB7 | NoisyStudent | Our Model |

|---|---|---|---|---|

| 0.10 | 0.8442 | 0.8637 | 0.8344 | 0.8518 |

| 0.15 | 0.8637 | 0.8961 | 0.8429 | 0.8977 |

| 0.20 | 0.9496 | 0.8870 | 0.8542 | 0.8951 |

| 0.25 | 0.8221 | 0.8388 | 0.8238 | 0.8331 |

| 0.30 | 0.8806 | 0.8806 | 0.8301 | 0.8881 |

| Split | DenseNet121 | EfficientNetB7 | NoisyStudent | Our Model |

|---|---|---|---|---|

| 0.10 | 0.8442 | 0.8637 | 0.8329 | 0.8497 |

| 0.15 | 0.8637 | 0.8922 | 0.8453 | 0.9098 |

| 0.20 | 0.9496 | 0.8871 | 0.8576 | 0.8732 |

| 0.25 | 0.8560 | 0.8382 | 0.8249 | 0.8490 |

| 0.30 | 0.8839 | 0.8839 | 0.8290 | 0.8987 |

| Model | Accuracy |

|---|---|

| DenseNet | 0.9260 |

| GoogleNet | 0.9530 |

| EfficientNetB7 | 0.9562 |

| ResNet20 | 0.9370 |

| VggNet-16 | 0.9400 |

| Our Model | 0.9625 |

| MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

Share and Cite

Bansal, P.; Kumar, R.; Kumar, S. Disease Detection in Apple Leaves Using Deep Convolutional Neural Network. Agriculture 2021 , 11 , 617. https://doi.org/10.3390/agriculture11070617

Bansal P, Kumar R, Kumar S. Disease Detection in Apple Leaves Using Deep Convolutional Neural Network. Agriculture . 2021; 11(7):617. https://doi.org/10.3390/agriculture11070617

Bansal, Prakhar, Rahul Kumar, and Somesh Kumar. 2021. "Disease Detection in Apple Leaves Using Deep Convolutional Neural Network" Agriculture 11, no. 7: 617. https://doi.org/10.3390/agriculture11070617

Article Metrics

Article access statistics, further information, mdpi initiatives, follow mdpi.

Subscribe to receive issue release notifications and newsletters from MDPI journals

- Advanced Search

Research on deep learning in apple leaf disease recognition

College of Information and Electrical Engineering, China Agricultural University, Beijing 100083, China

- 13 citation

New Citation Alert added!

This alert has been successfully added and will be sent to:

You will be notified whenever a record that you have chosen has been cited.

To manage your alert preferences, click on the button below.

New Citation Alert!

Please log in to your account

- Publisher Site

Computers and Electronics in Agriculture

• | Use DenseNet-121 as the backbone network. | ||||

• | Three methods were proposed to identify apple leaf diseases. | ||||

• | The recognition accuracy is improved compared with the traditional method. | ||||

The main reason affecting apple production is the occurrence of apple leaf diseases, which causes huge economic losses every year. Therefore, it is of great significance to study the identification of apple leaf diseases. Based on DenseNet-121 deep convolution network, three methods of regression, multi-label classification and focus loss function were proposed to identify apple leaf diseases. In this paper, the apple leaf image data set, including 2462 images of six apple leaf diseases, were used for data modeling and method evaluation. The proposed methods achieved 93.51%, 93.31% and 93.71% accuracy on the test set respectively, which were better than the traditional multi-classification method based on cross-entropy loss function with an accuracy of 92.29%.

Index Terms

Applied computing

Life and medical sciences

Computing methodologies

Artificial intelligence

Computer vision

Machine learning

Learning paradigms

Supervised learning

Machine learning approaches

Neural networks

Recommendations

Mobilenet based apple leaf diseases identification.

Alternaria leaf blotch, and rust are two common types of apple leaf diseases that severely affect apple yield. A timely and effective detection of apple leaf diseases is crucial for ensuring the healthy development of the apple industry. In ...

Leaf disease detection using machine learning and deep learning: Review and challenges

Identification of leaf disorder plays an important role in the economic prosperity of any country. Many parts of a plant can be infected by a virus, fungal, bacteria, and other infectious organisms but here we mainly considered the ...

- A study on leaf disease (LD) detection is conducted using Machine Learning and Deep Learning from 2010 to 2022.

Leaf Disease Classification of Various Crops Using Deep Learning Based DBESeriesNet Model

Chilli is one of the world’s most extensively used spices. Numerous diseases affect chilli leaves as a result of climatic and environmental changes, which limit crop output. Chilli leaf disease drastically reduces the quality and yield of the ...

Login options

Check if you have access through your login credentials or your institution to get full access on this article.

Full Access

- Information

- Contributors

Published in

Elsevier B.V.

In-Cooperation

Elsevier Science Publishers B. V.

Netherlands

Publication History

- Published: 1 January 2020

Author Tags

- Deep learning

- Apple leaves

- Disease recognition

- Multi-label classification

- Focus loss function

- Cross entropy loss function

- research-article

Funding Sources

Other metrics.

- Bibliometrics

- Citations 13

Article Metrics

- 13 Total Citations View Citations

- 0 Total Downloads

- Downloads (Last 12 months) 0

- Downloads (Last 6 weeks) 0

Digital Edition

View this article in digital edition.

Share this Publication link

https://dl.acm.org/doi/10.1016/j.compag.2019.105146

Share on Social Media

- 0 References

Export Citations

- Please download or close your previous search result export first before starting a new bulk export. Preview is not available. By clicking download, a status dialog will open to start the export process. The process may take a few minutes but once it finishes a file will be downloadable from your browser. You may continue to browse the DL while the export process is in progress. Download

- Download citation

- Copy citation

We are preparing your search results for download ...

We will inform you here when the file is ready.

Your file of search results citations is now ready.

Your search export query has expired. Please try again.

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

A method of detecting apple leaf diseases based on improved convolutional neural network

Roles Writing – original draft

Affiliation Center for Innovation Management Research of Xinjiang, School of Economics and Management, Xinjiang University, Urumqi, Xinjiang, China

Roles Supervision, Writing – review & editing

* E-mail: [email protected]

- Jie Di,

- Published: February 1, 2022

- https://doi.org/10.1371/journal.pone.0262629

- Reader Comments

Apple tree diseases have perplexed orchard farmers for several years. At present, numerous studies have investigated deep learning for fruit and vegetable crop disease detection. Because of the complexity and variety of apple leaf veins and the difficulty in judging similar diseases, a new target detection model of apple leaf diseases DF-Tiny-YOLO, based on deep learning, is proposed to realize faster and more effective automatic detection of apple leaf diseases. Four common apple leaf diseases, including 1,404 images, were selected for data modeling and method evaluation, and made three main improvements. Feature reuse was combined with the DenseNet densely connected network and further realized to reduce the disappearance of the deep gradient, thus strengthening feature propagation and improving detection accuracy. We introduced Resize and Re-organization (Reorg) and conducted convolution kernel compression to reduce the calculation parameters of the model, improve the operating detection speed, and allow feature stacking to achieve feature fusion. The network terminal uses convolution kernels of 1 × 1, 1 × 1, and 3 × 3, in turn, to realize the dimensionality reduction of features and increase network depth without increasing computational complexity, thus further improving the detection accuracy. The results showed that the mean average precision (mAP) and average intersection over union (IoU) of the DF-Tiny-YOLO model were 99.99% and 90.88%, respectively, and the detection speed reached 280 FPS. Compared with the Tiny-YOLO and YOLOv2 network models, the new method proposed in this paper significantly improves the detection performance. It can also detect apple leaf diseases quickly and effectively.

Citation: Di J, Li Q (2022) A method of detecting apple leaf diseases based on improved convolutional neural network. PLoS ONE 17(2): e0262629. https://doi.org/10.1371/journal.pone.0262629

Editor: Nguyen Quoc Khanh Le, Taipei Medical University, TAIWAN

Received: July 15, 2021; Accepted: December 31, 2021; Published: February 1, 2022

Copyright: © 2022 Di, Li. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: All data files are available from the PlantVillage database ( https://plantvillage.org/ ). These are third party data (i.e., data not owned or collected by the author(s)) and we confirm that we did not have any special access privileges that others would not have.

Funding: National Natural Science Foundation of China (82060265)” and “the Department of Human Resources and Social Security of Xinjiang Uygur Autonomous Region (042419001).

Competing interests: The authors have declared that no competing interests exist.

1. Introduction

With over 2,000 years of history in China, areas of apple implantation have expanded annually, and 65% of all apples in the world are now produced in China. The traditional apple industry has been modernized with the support of national economic policies, and China has gradually developed into a major power source in the apple industry. Nonetheless, behind the rapid development of the apple implantation industry, disease prevention and control have been important problems that have long perplexed orchard farmers [ 1 ], who identify apple diseases by referring to experience, books, the Internet, and consulting professional and technical personnel [ 2 ]. However, relying solely on these sources is not conducive to timely and effective identification of diseases, and might even cause other problems associated with subjective judgment. Effective automated detection of apple diseases during production not only promptly monitors the health status of apples but also helps orchard farmers to correctly judge apple diseases. They can then implement timely prevention and control to avoid large-scale diseases, which is crucial for promoting the healthy growth of apples and increasing the economic benefits of orchards [ 3 , 4 ].

Hundreds of apple diseases disseminate in fruits, leaves, branches, roots, and other areas, but often initially appear in leaves, which are easily observed, collected, and managed. Therefore, they are an important reference for disease identification and effective automated detection of diseases is essential. However, judging differences among diseases is difficult due to the complexity of blade veins [ 5 ], resulting in unsatisfactory outcomes of experimental detection methods [ 6 ].

Nowadays, based on traditional algorithms, much methods has been made in the detection of apple leaf diseases, artificial neural network algorithms, genetic algorithms [ 7 ], support vector machines (SVMs), and a clustering algorithm [ 8 ] have applied feature extraction to apple leaves, and Li and He [ 9 ] established a BP network model with intelligent recognition that can diagnose apple leaf diseases using low-resolution images. Rong-Ming, Wei [ 10 ] proposed a method for extracting apple leaf disease spots based on hyperspectral images. Omrani, Khoshnevisan [ 11 ] proposed a calculation based on an artificial neural network (ANN) and SVMs to detect three apple leaf diseases. Shuaibu, Lee [ 12 ] applied hyperspectral images to detect marssonina blotch in apples at different stages and used orthogonal subspace projection for feature selection and redundancy reduction which generated better results. Zhang, Zhang [ 7 ] used genetic algorithm and feature selection method based on correlation to identify three kinds of apple leaf diseases, with an average recognition rate of 90%. Although traditional methods can also extract a large number of classification features, to select a few optimal features using general feature selection methods is difficult, so the recognition rate of these methods is low and generalization ability is weak.

Since the emergence of target detection technology during the 1960s, many domestic and overseas investigators have focused on the development of new technologies. After Krizhevsky, Sutskever [ 13 ] proposed the AlexNet structure at the ImageNet Large-scale Visual Recognition Challenge (ILSVRC) in 2012, target detection based on deep learning has gradually become mainstream [ 14 ]. Instead of using manual feature extraction, deep learning adopts hierarchical feature extraction [ 15 ], which is more similar to the human visual mechanism and has more accurate detection [ 16 ]. Many investigations into target detection based on deep learning have emerged [ 17 ], and applications to fruit and vegetable crop disease detection have been continuously proposed. Based on the AlexNet model, Brahimi, Boukhalfa [ 18 ] classified nine tomato diseases using more than 10,000 tomato disease images with a simple background in the PlantVillage database, further enhancing the model recognition rate. Priyadharshini, Arivazhagan [ 19 ] proposed a deep CNN-based architecture (modified LeNet) for four maize leaf disease classifications, and the trained model achieved 97.89% accuracy. In order to effectively examine the minor disease spots on diseased grape leaves, Zhu, Cheng [ 20 ] proposed a detection algorithm based on convolutional neural network and super-resolution image enhancement, the test effect is greatly improved. Zhang, Zhang [ 21 ] improved the model structure of the LeNet convolutional neural network to solve the problems of leaf shape, illumination, and image background that easily affect detection using traditional recognition methods; their model recognized six cucumber diseases, with an average recognition ratio of 90.32%. Xue, Huang [ 22 ], overcame the difficulty of detecting mango overlap or occlusion and proposed the improved Tiny-YOLO mango detection network combining dense connections, with an accuracy rate of 97.02%. Xiao, Li-ren [ 23 ] proposed the feature pyramid networks-single-shot multibox detector (FPN-SSD) model to detect top soybean leaflets on collected images, and automatically detect soybean leaves and classify leaf morphology. The wide application of deep learning to the detection of fruit and vegetable diseases has furthered automatic disease detection by leaps and bounds [ 24 , 25 ].

Convolutional neural network learning methods are now being applied to classify and identify apple leaf diseases, such as the SDD model, and the R-CNN and YOLO series of algorithms. Many improved schemes have been proposed based on existing algorithms. Jiang, Chen [ 26 ] proposed the INAR-SSD (SSD with an Inception module and Rainbow concatenation) model to detect five common apple leaf diseases, with high accuracy (78.80% mAP) in real time. The INAR-SSD has provided feasible real-time detection of apple leaf diseases. Liu, Zhang [ 27 ] created a new structure based on the deep convolutional neural network AlexNet to detect apple leaf diseases, thus further improving the effective identification of apple leaf diseases. Based on the DenseNet-121 deep convolutional network, Zhong and Zhao [ 28 ] proposed regression, multi-label classification, and focus loss function, to identify apple leaf diseases, with a recognition accuracy rate > 93%, which was better than the traditional multi-classification method based on the cross-entropy loss function. Jan and Ahmad [ 29 ] developed an apple pest and disease diagnostic system to predict apple scabs and leaf spots. Entropy, energy, inverse difference moment (IDM), mean, standard deviation (SD), and perimeter, etc., were extracted from apple leaf images and a multi-layer perceptron (MLP) pattern classifier and 11 apple leaf image features to train the model, which had 99.1% diagnostic accuracy. Yu, Son [ 30 ] proposed a new method based on a region-of-interest-aware deep convolutional neural network (ROI-aware DCNN) to render deep features more discriminative and increase classification performance for apple leaf disease identification, with an average accuracy of 84.3%. Sun, Xu [ 31 ] proposed a lightweight CNN model that can be deployed on mobile devices to detect apple leaf diseases in real time to test five common apple leaf diseases, with a mAP value of 83.12%. Di and Qu [ 32 ] applied the Tiny-YOLO model to apple leaf diseases, and the results showed that the model was effective.