Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

Code for voicing silent speech from EMG. Official repository for the papers "Digital Voicing of Silent Speech" at EMNLP 2020 and "An Improved Model for Voicing Silent Speech" at ACL 2021. Also includes code for converting silent speech to text.

dgaddy/silent_speech

Folders and files, repository files navigation, voicing silent speech.

This repository contains code for synthesizing speech audio from silently mouthed words captured with electromyography (EMG). It is the official repository for the papers Digital Voicing of Silent Speech at EMNLP 2020, An Improved Model for Voicing Silent Speech at ACL 2021, and the dissertation Voicing Silent Speech . The current commit contains only the most recent model, but the versions from prior papers can be found in the commit history. On an ASR-based open vocabulary evaluation, the latest model achieves a WER of approximately 36%. Audio samples can be found here .

The repository also includes code for directly converting silent speech to text. See the section labeled Silent Speech Recognition .

The EMG and audio data can be downloaded from https://doi.org/10.5281/zenodo.4064408 . The scripts expect the data to be located in a emg_data subdirectory by default, but the location can be overridden with flags (see the top of read_emg.py ).

Force-aligned phonemes from the Montreal Forced Aligner have been included as a git submodule, which must be updated using the process described in "Environment Setup" below. Note that there will not be an exception if the directory is not found, but logged phoneme prediction accuracies reporting 100% is a sign that the directory has not been loaded correctly.

Environment Setup

We strongly recommend running in Anaconda. To create a new environment with all required dependencies, run:

This will install with CUDA 11.8.

You will also need to pull git submodules for Hifi-GAN and the phoneme alignment data, using the following commands:

Use the following commands to download pre-trained DeepSpeech model files for evaluation. It is important that you use DeepSpeech version 0.7.0 model files for evaluation numbers to be consistent with the original papers. Note that more recent DeepSpeech packages such as version 0.9.3 can be used as long as they are compatible with version 0.7.x model files.

(Optional) Training will be faster if you re-run the audio cleaning, which will save re-sampled audio so it doesn't have to be re-sampled every training run.

Pre-trained Models

Pre-trained models for the vocoder and transduction model are available at https://doi.org/10.5281/zenodo.6747411 .

To train an EMG to speech feature transduction model, use

where hifigan_finetuned/checkpoint is a trained HiFi-GAN generator model (optional). At the end of training, an ASR evaluation will be run on the validation set if a HiFi-GAN model is provided.

To evaluate a model on the test set, use

By default, the scripts now use a larger validation set than was used in the original EMNLP 2020 paper, since the small size of the original set gave WER evaluations a high variance. If you want to use the original validation set you can add the flag --testset_file testset_origdev.json .

HiFi-GAN Training

The HiFi-GAN model is fine-tuned from a multi-speaker model to the voice of this dataset. Spectrograms predicted from the transduction model are used as input for fine-tuning instead of gold spectrograms. To generate the files needed for HiFi-GAN fine-tuning, run the following with a trained model checkpoint:

The resulting files can be used for fine-tuning using the instructions in the hifi-gan repository. The pre-trained model was fine-tuned for 75,000 steps, starting from the UNIVERSAL_V1 model provided by the HiFi-GAN repository. Although the HiFi-GAN is technically fine-tuned for the output of a specific transduction model, we found it to transfer quite well and shared a single HiFi-GAN for most experiments.

Silent Speech Recognition

This section is about converting silent speech directly to text rather than synthesizing speech audio. The speech-to-text model uses the same neural architecture but with a CTC decoder, and achieves a WER of approximately 28% (as described in the dissertation Voicing Silent Speech ).

You will need to install the ctcdecode library (1.0.3) in addition to the libraries listed above to use the recognition code. (This package cannot be built successfully under Windows platform)

And you will need to download a KenLM language model, such as this one from DeepSpeech:

Pre-trained model weights can be downloaded from https://doi.org/10.5281/zenodo.7183877 .

To train a model, run

To run a test set evaluation on a saved model, use

Contributors 2

- Python 100.0%

Work for a Member company and need a Member Portal account? Register here with your company email address.

Creative Commons

Attribution 4.0 International

AlterEgo is a non-invasive, wearable, peripheral neural interface that allows humans to converse in natural language with machines, artificial intelligence assistants, services, and other people without any voice—without opening their mouth, and without externally observable movements—simply by articulating words internally. The feedback to the user is given through audio, via bone conduction, without disrupting the user's usual auditory perception, and making the interface closed-loop. This enables a human-computer interaction that is subjectively experienced as completely internal to the human user—like speaking to one's self.

A primary focus of this project is to help support communication for people with speech disorders including conditions like ALS (amyotrophic lateral sclerosis) and MS (multiple sclerosis). Beyond that, the system has the potential to seamlessly integrate humans and computers—such that computing, the Internet, and AI would weave into our daily life as a "second self" and augment our cognition and abilities.

The wearable system captures peripheral neural signals when internal speech articulators are volitionally and neurologically activated, during a user's internal articulation of words. This enables a user to transmit and receive streams of information to and from a computing device or any other person without any observable action, in discretion, without unplugging the user from her environment, without invading the user's privacy.

AlterEgo: A Personalized Wearable Silent Speech Interface

A. Kapur, S. Kapur, and P. Maes, "AlterEgo: A Personalized Wearable Silent Speech Interface." 23rd International Conference on Intelligent User Interfaces (IUI 2018), pp 43-53, March 5, 2018.

Non-Invasive Silent Speech Recognition in Multiple Sclerosis with Dysphonia

Kapur, A., Sarawgi, U., Wadkins, E., Wu, M., Hollenstein, N., & Maes, P. (2020, April 30). Non-Invasive Silent Speech Recognition in Multiple Sclerosis with Dysphonia. Retrieved July 20, 2020, from http://proceedings.mlr.press/v116/kapur20a.html

Research Topics

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Sustainability

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

Computer system transcribes words users “speak silently”

Press contact :, media download.

*Terms of Use:

Images for download on the MIT News office website are made available to non-commercial entities, press and the general public under a Creative Commons Attribution Non-Commercial No Derivatives license . You may not alter the images provided, other than to crop them to size. A credit line must be used when reproducing images; if one is not provided below, credit the images to "MIT."

Previous image Next image

MIT researchers have developed a computer interface that can transcribe words that the user verbalizes internally but does not actually speak aloud.

The system consists of a wearable device and an associated computing system. Electrodes in the device pick up neuromuscular signals in the jaw and face that are triggered by internal verbalizations — saying words “in your head” — but are undetectable to the human eye. The signals are fed to a machine-learning system that has been trained to correlate particular signals with particular words.

The device also includes a pair of bone-conduction headphones, which transmit vibrations through the bones of the face to the inner ear. Because they don’t obstruct the ear canal, the headphones enable the system to convey information to the user without interrupting conversation or otherwise interfering with the user’s auditory experience.

The device is thus part of a complete silent-computing system that lets the user undetectably pose and receive answers to difficult computational problems. In one of the researchers’ experiments, for instance, subjects used the system to silently report opponents’ moves in a chess game and just as silently receive computer-recommended responses.

“The motivation for this was to build an IA device — an intelligence-augmentation device,” says Arnav Kapur, a graduate student at the MIT Media Lab, who led the development of the new system. “Our idea was: Could we have a computing platform that’s more internal, that melds human and machine in some ways and that feels like an internal extension of our own cognition?”

“We basically can’t live without our cellphones, our digital devices,” says Pattie Maes, a professor of media arts and sciences and Kapur’s thesis advisor. “But at the moment, the use of those devices is very disruptive. If I want to look something up that’s relevant to a conversation I’m having, I have to find my phone and type in the passcode and open an app and type in some search keyword, and the whole thing requires that I completely shift attention from my environment and the people that I’m with to the phone itself. So, my students and I have for a very long time been experimenting with new form factors and new types of experience that enable people to still benefit from all the wonderful knowledge and services that these devices give us, but do it in a way that lets them remain in the present.”

The researchers describe their device in a paper they presented at the Association for Computing Machinery’s ACM Intelligent User Interface conference. Kapur is first author on the paper, Maes is the senior author, and they’re joined by Shreyas Kapur, an undergraduate major in electrical engineering and computer science.

Subtle signals

The idea that internal verbalizations have physical correlates has been around since the 19th century, and it was seriously investigated in the 1950s. One of the goals of the speed-reading movement of the 1960s was to eliminate internal verbalization, or “subvocalization,” as it’s known.

But subvocalization as a computer interface is largely unexplored. The researchers’ first step was to determine which locations on the face are the sources of the most reliable neuromuscular signals. So they conducted experiments in which the same subjects were asked to subvocalize the same series of words four times, with an array of 16 electrodes at different facial locations each time.

The researchers wrote code to analyze the resulting data and found that signals from seven particular electrode locations were consistently able to distinguish subvocalized words. In the conference paper, the researchers report a prototype of a wearable silent-speech interface, which wraps around the back of the neck like a telephone headset and has tentacle-like curved appendages that touch the face at seven locations on either side of the mouth and along the jaws.

But in current experiments, the researchers are getting comparable results using only four electrodes along one jaw, which should lead to a less obtrusive wearable device.

Once they had selected the electrode locations, the researchers began collecting data on a few computational tasks with limited vocabularies — about 20 words each. One was arithmetic, in which the user would subvocalize large addition or multiplication problems; another was the chess application, in which the user would report moves using the standard chess numbering system.

Then, for each application, they used a neural network to find correlations between particular neuromuscular signals and particular words. Like most neural networks, the one the researchers used is arranged into layers of simple processing nodes, each of which is connected to several nodes in the layers above and below. Data are fed into the bottom layer, whose nodes process it and pass them to the next layer, whose nodes process it and pass them to the next layer, and so on. The output of the final layer yields is the result of some classification task.

The basic configuration of the researchers’ system includes a neural network trained to identify subvocalized words from neuromuscular signals, but it can be customized to a particular user through a process that retrains just the last two layers.

Practical matters

Using the prototype wearable interface, the researchers conducted a usability study in which 10 subjects spent about 15 minutes each customizing the arithmetic application to their own neurophysiology, then spent another 90 minutes using it to execute computations. In that study, the system had an average transcription accuracy of about 92 percent.

But, Kapur says, the system’s performance should improve with more training data, which could be collected during its ordinary use. Although he hasn’t crunched the numbers, he estimates that the better-trained system he uses for demonstrations has an accuracy rate higher than that reported in the usability study.

In ongoing work, the researchers are collecting a wealth of data on more elaborate conversations, in the hope of building applications with much more expansive vocabularies. “We’re in the middle of collecting data, and the results look nice,” Kapur says. “I think we’ll achieve full conversation some day.”

“I think that they’re a little underselling what I think is a real potential for the work,” says Thad Starner, a professor in Georgia Tech’s College of Computing. “Like, say, controlling the airplanes on the tarmac at Hartsfield Airport here in Atlanta. You’ve got jet noise all around you, you’re wearing these big ear-protection things — wouldn’t it be great to communicate with voice in an environment where you normally wouldn’t be able to? You can imagine all these situations where you have a high-noise environment, like the flight deck of an aircraft carrier, or even places with a lot of machinery, like a power plant or a printing press. This is a system that would make sense, especially because oftentimes in these types of or situations people are already wearing protective gear. For instance, if you’re a fighter pilot, or if you’re a firefighter, you’re already wearing these masks.”

“The other thing where this is extremely useful is special ops,” Starner adds. “There’s a lot of places where it’s not a noisy environment but a silent environment. A lot of time, special-ops folks have hand gestures, but you can’t always see those. Wouldn’t it be great to have silent-speech for communication between these folks? The last one is people who have disabilities where they can’t vocalize normally. For example, Roger Ebert did not have the ability to speak anymore because lost his jaw to cancer. Could he do this sort of silent speech and then have a synthesizer that would speak the words?”

Share this news article on:

Press mentions, smithsonian magazine.

Smithsonian reporter Emily Matchar spotlights AlterEgo, a device developed by MIT researchers to help people with speech pathologies communicate. “A lot of people with all sorts of speech pathologies are deprived of the ability to communicate with other people,” says graduate student Arnav Kapur. “This could restore the ability to speak for people who can’t.”

WCVB-TV’s Mike Wankum visits the Media Lab to learn more about a new wearable device that allows users to communicate with a computer without speaking by measuring tiny electrical impulses sent by the brain to the jaw and face. Graduate student Arnav Kapur explains that the device is aimed at exploring, “how do we marry AI and human intelligence in a way that’s symbiotic.”

Fast Company

Fast Company reporter Eillie Anzilotti highlights how MIT researchers have developed an AI-enabled headset device that can translate silent thoughts into speech. Anzilotti explains that one of the factors that is motivating graduate student Arnav Kapur to develop the device is “to return control and ease of verbal communication to people who struggle with it.”

Quartz reporter Anne Quito spotlights how graduate student Arnav Kapur has developed a wearable device that allows users to access the internet without speech or text and could help people who have lost the ability to speak vocalize their thoughts. Kapur explains that the device is aimed at augmenting ability.

Axios reporter Ina Fried spotlights how graduate student Arnav Kapur has developed a system that can detect speech signals. “The technology could allow those who have lost the ability to speak to regain a voice while also opening up possibilities of new interfaces for general purpose computing,” Fried explains.

After several years of experimentation, graduate student Arnav Kapur developed AlterEgo, a device to interpret subvocalization that can be used to control digital applications. Describing the implications as “exciting,” Katharine Schwab at Co.Design writes, “The technology would enable a new way of thinking about how we interact with computers, one that doesn’t require a screen but that still preserves the privacy of our thoughts.”

The Guardian

AlterEgo, a device developed by Media Lab graduate student Arnav Kapur, “can transcribe words that wearers verbalise internally but do not say out loud, using electrodes attached to the skin,” writes Samuel Gibbs of The Guardian . “Kapur and team are currently working on collecting data to improve recognition and widen the number of words AlterEgo can detect.”

Popular Science

Researchers at the Media Lab have developed a device, known as “AlterEgo,” which allows an individual to discreetly query the internet and control devices by using a headset “where a handful of electrodes pick up the miniscule electrical signals generated by the subtle internal muscle motions that occur when you silently talk to yourself,” writes Rob Verger for Popular Science.

New Scientist

A new headset developed by graduate student Arnav Kapur reads the small muscle movements in the face that occur when the wearer thinks about speaking, and then uses “artificial intelligence algorithms to decipher their meaning,” writes Chelsea Whyte for New Scientist . Known as AlterEgo, the device “is directly linked to a program that can query Google and then speak the answers.”

Previous item Next item

Related Links

- Paper: “AlterEgo: A personalized wearable silent speech interface”

- Arnav Kapur

- Pattie Maes

- Fluid Interfaces group

- School of Architecture and Planning

Related Topics

- Assistive technology

- Computer science and technology

- Artificial intelligence

Related Articles

Recycling air pollution to make art

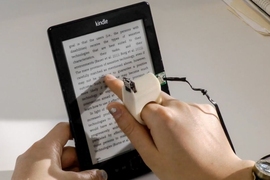

Finger-mounted reading device for the blind

‘Wise chisels’: Art, craftsmanship, and power tools

More mit news.

A launchpad for entrepreneurship in aerospace

Read full story →

Ensuring a durable transition

J-PAL North America announces new evaluation incubator collaborators from state and local governments

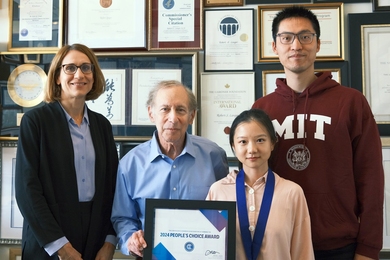

Linzixuan (Rhoda) Zhang wins 2024 Collegiate Inventors Competition

Dancing with currents and waves in the Maldives

School of Engineering faculty receive awards in summer 2024

- More news on MIT News homepage →

Massachusetts Institute of Technology 77 Massachusetts Avenue, Cambridge, MA, USA

- Map (opens in new window)

- Events (opens in new window)

- People (opens in new window)

- Careers (opens in new window)

- Accessibility

- Social Media Hub

- MIT on Facebook

- MIT on YouTube

- MIT on Instagram

Cornell Chronicle

- Architecture & Design

- Arts & Humanities

- Business, Economics & Entrepreneurship

- Computing & Information Sciences

- Energy, Environment & Sustainability

- Food & Agriculture

- Global Reach

- Health, Nutrition & Medicine

- Law, Government & Public Policy

- Life Sciences & Veterinary Medicine

- Physical Sciences & Engineering

- Social & Behavioral Sciences

- Coronavirus

- News & Events

- Public Engagement

- New York City

- Photos of the Week

- Big Red Sports

- Freedom of Expression

- Student Life

- University Statements

- Around Cornell

- All Stories

- In the News

- Expert Quotes

- Cornellians

AI-equipped eyeglasses can read silent speech

By louis dipietro cornell ann s. bowers college of computing and information science.

It may look like Ruidong Zhang is talking to himself, but in fact the doctoral student in the field of information science is silently mouthing the passcode to unlock his nearby smartphone and play the next song in his playlist.

It’s not telepathy: It’s the seemingly ordinary, off-the-shelf eyeglasses he’s wearing, called EchoSpeech – a silent-speech recognition interface that uses acoustic-sensing and artificial intelligence to continuously recognize up to 31 unvocalized commands, based on lip and mouth movements.

Ruidong Zhang, a doctoral student in the field of information science, wearing EchoSpeech glasses.

Developed by Cornell’s Smart Computer Interfaces for Future Interactions (SciFi) Lab , the low-power, wearable interface requires just a few minutes of user training data before it will recognize commands and can be run on a smartphone, researchers said.

Zhang is the lead author of “ EchoSpeech: Continuous Silent Speech Recognition on Minimally-obtrusive Eyewear Powered by Acoustic Sensing ,” which will be presented at the Association for Computing Machinery Conference on Human Factors in Computing Systems (CHI) this month in Hamburg, Germany.

“For people who cannot vocalize sound, this silent speech technology could be an excellent input for a voice synthesizer. It could give patients their voices back,” Zhang said of the technology’s potential use with further development.

In its present form, EchoSpeech could be used to communicate with others via smartphone in places where speech is inconvenient or inappropriate, like a noisy restaurant or quiet library. The silent speech interface can also be paired with a stylus and used with design software like CAD, all but eliminating the need for a keyboard and a mouse.

Outfitted with a pair of microphones and speakers smaller than pencil erasers, the EchoSpeech glasses become a wearable AI-powered sonar system, sending and receiving soundwaves across the face and sensing mouth movements. A deep learning algorithm, also developed by SciFi Lab researchers, then analyzes these echo profiles in real time, with about 95% accuracy.

“We’re moving sonar onto the body,” said Cheng Zhang , assistant professor of information science in the Cornell Ann S. Bowers College of Computing and Information Science and director of the SciFi Lab.

“We’re very excited about this system,” he said, “because it really pushes the field forward on performance and privacy. It’s small, low-power and privacy-sensitive, which are all important features for deploying new, wearable technologies in the real world.”

The SciFi Lab has developed several wearable devices that track body , hand and facial movements using machine learning and wearable, miniature video cameras. Recently, the lab has shifted away from cameras and toward acoustic sensing to track face and body movements, citing improved battery life; tighter security and privacy; and smaller, more compact hardware. EchoSpeech builds off the lab’s similar acoustic-sensing device called EarIO , a wearable earbud that tracks facial movements.

Most technology in silent-speech recognition is limited to a select set of predetermined commands and requires the user to face or wear a camera, which is neither practical nor feasible, Cheng Zhang said. There also are major privacy concerns involving wearable cameras – for both the user and those with whom the user interacts, he said.

Acoustic-sensing technology like EchoSpeech removes the need for wearable video cameras. And because audio data is much smaller than image or video data, it requires less bandwidth to process and can be relayed to a smartphone via Bluetooth in real time, said François Guimbretière , professor in information science in Cornell Bowers CIS and a co-author.

“And because the data is processed locally on your smartphone instead of uploaded to the cloud,” he said, “privacy-sensitive information never leaves your control.”

Battery life improves exponentially, too, Cheng Zhang said: Ten hours with acoustic sensing versus 30 minutes with a camera.

The team is exploring commercializing the technology behind EchoSpeech, thanks in part to Ignite: Cornell Research Lab to Market gap funding .

In forthcoming work, SciFi Lab researchers are exploring smart-glass applications to track facial, eye and upper body movements.

“We think glass will be an important personal computing platform to understand human activities in everyday settings,” Cheng Zhang said.

Other co-authors were information science doctoral student Ke Li, Yihong Hao ’24, Yufan Wang ’24 and Zhengnan Lai ‘25. This research was funded in part by the National Science Foundation.

Louis DiPietro is a writer for the Cornell Ann S. Bowers College of Computing and Information Science.

Media Contact

Becka bowyer.

Get Cornell news delivered right to your inbox.

You might also like

Gallery Heading

IMAGES

VIDEO